How Analytics Leaders Build Distributed Data Stewardship That Actually Works

Discover how analytics leaders design data stewardship that scales across organizations without sacrificing agility or creating administrative overhead.

Enterprise data has exploded beyond the capacity of traditional stewardship models. While organizations collect more information than ever, the responsibility for managing data quality, lineage, and governance has become fragmented across departments, business units, and cloud platforms.

Analytics leaders find themselves caught between competing demands: business teams need faster access to data, while compliance requirements demand stricter controls. The result is often inconsistent data quality, duplicated effort, and governance gaps that expose organizations to significant risk.

In this article, we examine how forward-thinking analytics leaders are reimagining data stewardship for distributed organizations, developing frameworks that balance accountability with agility.

What is data stewardship?

Data stewardship is the practice of managing data assets throughout their lifecycle to ensure quality, accessibility, and compliance with organizational policies and regulatory requirements. Unlike data governance, which focuses on establishing policies and frameworks, data stewardship involves the day-to-day execution of those policies through hands-on data management activities.

Data stewards serve as the operational layer of data governance, acting as custodians who monitor data quality, resolve issues, maintain metadata, and ensure data remains fit for its intended business purposes.

They bridge the gap between technical data management and business requirements, treating data as a product by translating governance policies into practical workflows that protect data value while enabling business innovation.

In distributed organizations, data stewardship extends beyond individual databases or applications to encompass data flows across multiple systems, departments, and geographic locations. This complexity requires stewardship models that maintain consistency while adapting to local business needs and technical constraints.

The evolution of data stewardship

Traditional data stewardship emerged when most organizations maintained centralized data warehouses with clearly defined data flows and limited user communities. Stewards could manually monitor data quality, resolve issues through direct database access, and maintain comprehensive documentation about data lineage and business definitions.

This centralized model worked well for structured data environments with predictable usage patterns. Stewards typically came from IT backgrounds and focused primarily on technical data quality issues, such as completeness, accuracy, and consistency.

Business context remained secondary to technical correctness, and stewardship activities often operated independently from business processes.

Today's stewards need both technical skills and business acumen, working closely with domain experts to understand how data creates value while ensuring it meets enterprise standards for quality and compliance.

This evolution has shifted stewardship from a purely technical function to a collaborative discipline that requires ongoing coordination between business and technical teams across the entire organization.

Comparing data stewardship vs data governance

Data governance and data stewardship work together but serve distinctly different purposes in organizational data management. Understanding their differences helps organizations assign the right responsibilities to the right people while avoiding common overlaps that create confusion:

The relationship between governance and stewardship becomes critical in distributed organizations. Governance bodies typically operate at the enterprise level, establishing policies that must cascade down to individual teams and domain-level practitioners across different business units, geographic regions, and technical platforms.

With modern platforms like Prophecy, self-service data access remains governed because the tools enable the direct embedding of governance controls into individual workflows and team processes.

Tools that enable distributed data stewardship

Effective data stewardship at scale requires technology platforms that can coordinate activities across distributed environments while maintaining visibility and control. The right tools enable stewards to monitor data quality, track lineage, and collaborate with business users without becoming bottlenecks in data workflows.

Data catalog platforms for stewardship workflows

Modern data catalog platforms serve as the operational foundation for distributed stewardship by providing centralized visibility into data assets spread across multiple systems.

These platforms automatically discover datasets, extract metadata, and map relationships between different data sources, creating a comprehensive inventory that stewards can use to understand the scope of their responsibilities.

Organizations using Databricks Unity Catalog as their primary data governance layer can leverage tools like Prophecy to extend catalog capabilities with visual stewardship workflows. This helps eliminate the complexity of managing separate catalog platforms while maintaining comprehensive metadata governance.

Features such as workflow management, task assignment, and automated notifications enable teams responsible for stewardship to coordinate activities and ensure that nothing falls through the cracks as data environments become more complex.

Automated data quality monitoring tools

Manual data quality monitoring becomes impossible at enterprise scale, making automated monitoring tools essential for distributed stewardship. These platforms continuously scan data sources for quality issues, applying predefined rules and machine learning algorithms to identify anomalies, completeness problems, and consistency issues across multiple systems.

Prophecy embeds quality monitoring directly into data development workflows, enabling stewards to define quality rules visually and automatically enforce them as data moves through transformation pipelines.

Modern quality monitoring extends beyond basic validation rules to include business context and user behavior. By tracking how data gets used and monitoring the downstream impact of quality issues, these tools help stewards understand which problems truly affect business outcomes and which can be addressed through longer-term improvement initiatives.

Automated lineage tracking

Data lineage tracking becomes exponentially more complex in distributed environments where data flows through multiple systems, gets transformed by different teams, and serves various business purposes. Advanced lineage platforms automatically map these complex data flows, providing stewards with comprehensive visibility into how data moves and changes throughout the organization.

Modern data integration platforms, such as Prophecy, automatically generate and maintain lineage documentation as data engineers and analysts visually build pipelines. This ensures that stewards always have current visibility into data flows without incurring additional maintenance overhead.

Automated lineage tracking enables impact analysis, helping stewards understand the downstream effects of data changes and quality issues. When problems occur, stewards can quickly identify all affected systems and users, enabling faster resolution and more effective communication about data issues.

Modern lineage platforms integrate with development and deployment workflows to maintain accurate lineage information as data systems evolve. This integration ensures that lineage tracking doesn't become a separate maintenance burden but instead stays current with the actual data flows that stewards need to monitor and manage.

Integration platforms for governance orchestration

Distributed stewardship requires coordination across multiple tools, systems, and teams, making integration platforms essential for effective governance orchestration. These platforms connect data catalogs, quality monitoring tools, lineage systems, and business applications to create unified workflows that span the entire data lifecycle.

Prophecy exemplifies this approach by providing unified stewardship experiences across hybrid cloud environments, enabling teams to enforce governance policies consistently. Stewards can define policies once and have them applied automatically across multiple systems, reducing manual effort while ensuring consistency.

This automation frees stewards to focus on higher-value activities, such as collaborating with business users and improving data practices, rather than managing repetitive administrative tasks.

Overcoming critical challenges that turn data stewardship into organizational bottlenecks

Modern data stewardship faces unprecedented complexity as organizations struggle to maintain quality and governance across rapidly evolving, distributed data environments. These challenges require innovative approaches that balance automation with human expertise while retaining the business focus that makes stewardship valuable.

Teams responsible for stewardship overwhelmed by data volume and velocity

The sheer scale of modern data environments creates an impossible workload for traditional stewardship approaches. Organizations generate terabytes of data daily across hundreds of systems, while business demands for faster insights put pressure on teams responsible for stewardship to accelerate their quality assurance processes.

Manual monitoring and validation simply cannot keep pace with this volume and velocity.

This challenge intensifies when teams responsible for stewardship become bottlenecks in data workflows. Business teams wait for stewardship approval while opportunities slip away, creating pressure to bypass governance processes entirely. The result is often a choice between speed and quality that undermines both business agility and data integrity.

The solution lies in intelligent automation that amplifies human stewardship capabilities rather than replacing them. Modern platforms use machine learning to identify the most critical quality issues, automatically handle routine validation tasks such as data auditing, and route complex problems to the appropriate human experts.

This approach allows teams responsible for stewardship to focus their expertise where it creates the most value while ensuring comprehensive coverage of data quality requirements.

Successful organizations implement tiered stewardship models, where automated systems handle routine monitoring and validation, while human stewards focus on business context, policy interpretation, and resolving complex problems.

This division of labor scales stewardship capabilities without scaling headcount, enabling organizations to maintain quality standards even as data volumes grow exponentially.

Fractured definitions across business domain silos

Different business units often develop their own data practices, definitions, and quality standards, creating inconsistencies that undermine enterprise-wide analytics and reporting. What marketing considers a "customer" may differ from sales or support definitions, leading to conflicting metrics and fragmented insights across the organization.

This problem becomes particularly acute in large, geographically distributed organizations where local business requirements may conflict with global standards. Regional teams require flexibility to adapt to local market conditions, regulations, and business practices; however, this flexibility can compromise data consistency and complicate enterprise-wide analysis.

The complexity multiplies when mergers and acquisitions introduce entirely different data cultures and technical systems into the organization. Harmonizing stewardship practices across previously independent business units requires a careful balance between standardization and local autonomy.

Effective solutions center on federated stewardship models that establish global standards while allowing local adaptation within defined boundaries. Organizations create enterprise data definitions and quality standards that provide consistency for critical business metrics, while permitting local variation for region-specific requirements that don't affect enterprise reporting.

Technology platforms enable this balance by providing centralized policy management with distributed enforcement. Stewards can define global standards that automatically apply across all business units, while maintaining the flexibility to add local requirements that enhance rather than contradict enterprise policies.

This approach preserves the business context that makes stewardship valuable while ensuring consistency where it matters most.

Technical complexity creating business accessibility barriers

Modern data environments often require deep technical expertise to understand data lineage, transformation logic, and quality requirements. Yet effective stewardship depends on the business context that only domain experts possess. This creates a gap between technical stewards, who understand the systems, and business stewards, who understand the value and meaning of the data.

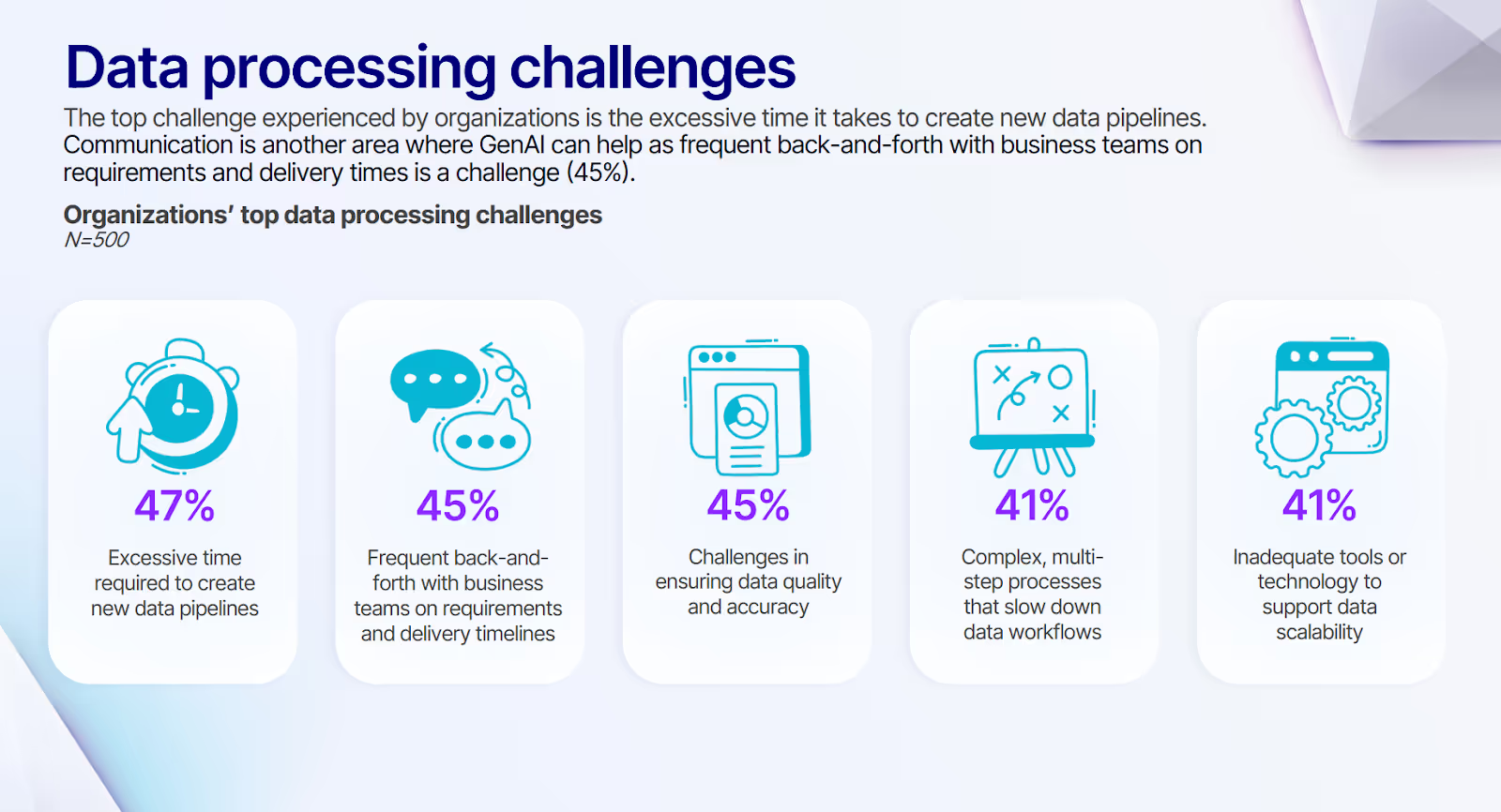

Our survey data reveals the scope of this challenge, with organizations reporting how they struggle with frequent back-and-forth communication between business teams and technical teams on requirements and delivery timelines. Additionally, 41% cite complex, multi-step processes as a significant barrier that slows down data workflows.

These communication barriers directly impact stewardship effectiveness. Traditional approaches that separate technical and business stewardship create bottlenecks that slow issue resolution and reduce the effectiveness of both groups. Technical stewards struggle to prioritize issues without business context, while business stewards cannot effectively communicate requirements without understanding technical constraints.

The challenge intensifies with self-service analytics platforms that enable business users to create their own data transformations and analyses. While these platforms democratize data access, they also multiply the potential sources of quality issues and governance exceptions that stewards must monitor and manage.

Successful organizations develop collaborative stewardship models that integrate technical and business expertise throughout the data lifecycle. Rather than relying on handoffs between separate teams, these models embed business stewards within technical workflows and provide technical stewards with business context and decision-making authority.

Modern platforms like Prophecy support this collaboration through visual interfaces that make technical data lineage and transformation logic accessible to business users, while providing business context and impact analysis that helps technical stewards prioritize their work.

This unified approach ensures that stewardship decisions consider both technical feasibility and business value, leading to more effective governance outcomes.

Governance processes stifling innovation velocity

Traditional governance processes often hinder business innovation by requiring multiple approvals, extensive documentation, and lengthy review cycles before data can be utilized for new purposes. In fast-paced business environments, these delays can erode competitive advantages and diminish the value of data-driven insights.

The challenge becomes more complex in distributed organizations where different business units have varying risk tolerances and operational requirements. What works for financial reporting may be too restrictive for marketing experimentation, while what's appropriate for internal analytics may be insufficient for customer-facing applications.

Organizations often fall into the trap where governance becomes either too rigid, stifling innovation, or too permissive, creating quality and compliance risks. Finding the right balance requires governance frameworks that can adapt to different business contexts while maintaining essential standards and controls, and enhancing data literacy among business users.

Effective solutions implement risk-based governance that adjusts controls based on data usage context and business impact. Low-risk internal analyses can proceed with minimal oversight, while high-impact customer-facing applications receive more rigorous review and validation. This differentiated approach ensures that governance efforts focus where they create the most value.

Automation plays a crucial role in scaling governance without sacrificing agility. Automated policy enforcement handles routine compliance checks, while workflow automation streamlines approval processes for cases that require human review. This combination reduces the time and effort needed for governance while maintaining the quality and consistency that make it valuable.

Hybrid cloud environments creating coordination chaos

Modern organizations operate data platforms that span on-premises systems, multiple cloud providers, and various software-as-a-service applications. Each environment has its own unique security models, data formats, and management interfaces, making coordinated stewardship extremely challenging.

Data often flows between these environments for different business purposes, creating complex lineage relationships that cross system boundaries. Stewards must understand how data transforms as it moves between environments, ensuring that quality and governance standards are consistently applied, regardless of where the data resides.

The problem intensifies when different environments have different stewardship tools and processes. What works for on-premises databases may not apply to cloud data lakes, while SaaS applications often provide limited stewardship capabilities. This fragmentation forces stewards to work with multiple interfaces and processes, increasing complexity and reducing efficiency.

Cloud migration projects further complicate stewardship by creating temporary hybrid states where data exists in multiple locations simultaneously. During these transitions, stewards must maintain quality and governance standards across both existing and new environments while minimizing disruptions to business operations.

Successful approaches focus on creating unified stewardship experiences that abstract away the complexity of underlying infrastructure. Modern platforms provide a unified interface for managing data quality, lineage, and governance across hybrid environments, enabling stewards to focus on business requirements rather than technical implementation details.

Integration platforms become essential for coordinating stewardship activities across different environments. These platforms connect disparate tools and systems to create consistent workflows that span the entire data landscape, ensuring that stewardship processes work effectively regardless of where data physically resides.

Orchestrate enterprise stewardship without the overhead

Enterprise data stewardship doesn't have to choose between comprehensive governance and operational agility. Organizations that successfully scale stewardship combine intelligent automation with collaborative workflows that put the right expertise in the right place at the right time.

Here’s how Prophecy's data integration platform addresses the fundamental challenge of distributed stewardship:

- Visual stewardship workflows that make complex data lineage and transformation logic accessible to business stewards while providing technical teams with the precision they need for quality assurance and governance enforcement

- Automated quality monitoring integrated directly into data development workflows, catching issues before they impact business operations while routing complex problems to the appropriate domain experts for resolution

- Federated governance capabilities that enforce enterprise standards while allowing business units to adapt processes to their specific requirements, ensuring consistency without sacrificing local agility

- Collaborative stewardship environments where business stewards can work directly with data engineers on the same assets, eliminating the translation errors and delays that plague traditional handoff-based approaches

- Unified control plane that provides consistent stewardship experiences across hybrid cloud environments, ensuring governance standards apply regardless of where data resides or how it's processed

- Workflow automation for routine stewardship tasks like quality validation, metadata updates, and issue routing, freeing human experts to focus on business context and complex problem resolution

To transform fragmented stewardship processes that struggle to keep pace with business demands, explore Self-Service Data Preparation Without the Risk to build stewardship frameworks that scale with your organization's growth.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now