AI-native analytics & automation platform

Prepare data and analyses natively on cloud data platforms with governance

Self-Service

with Governance

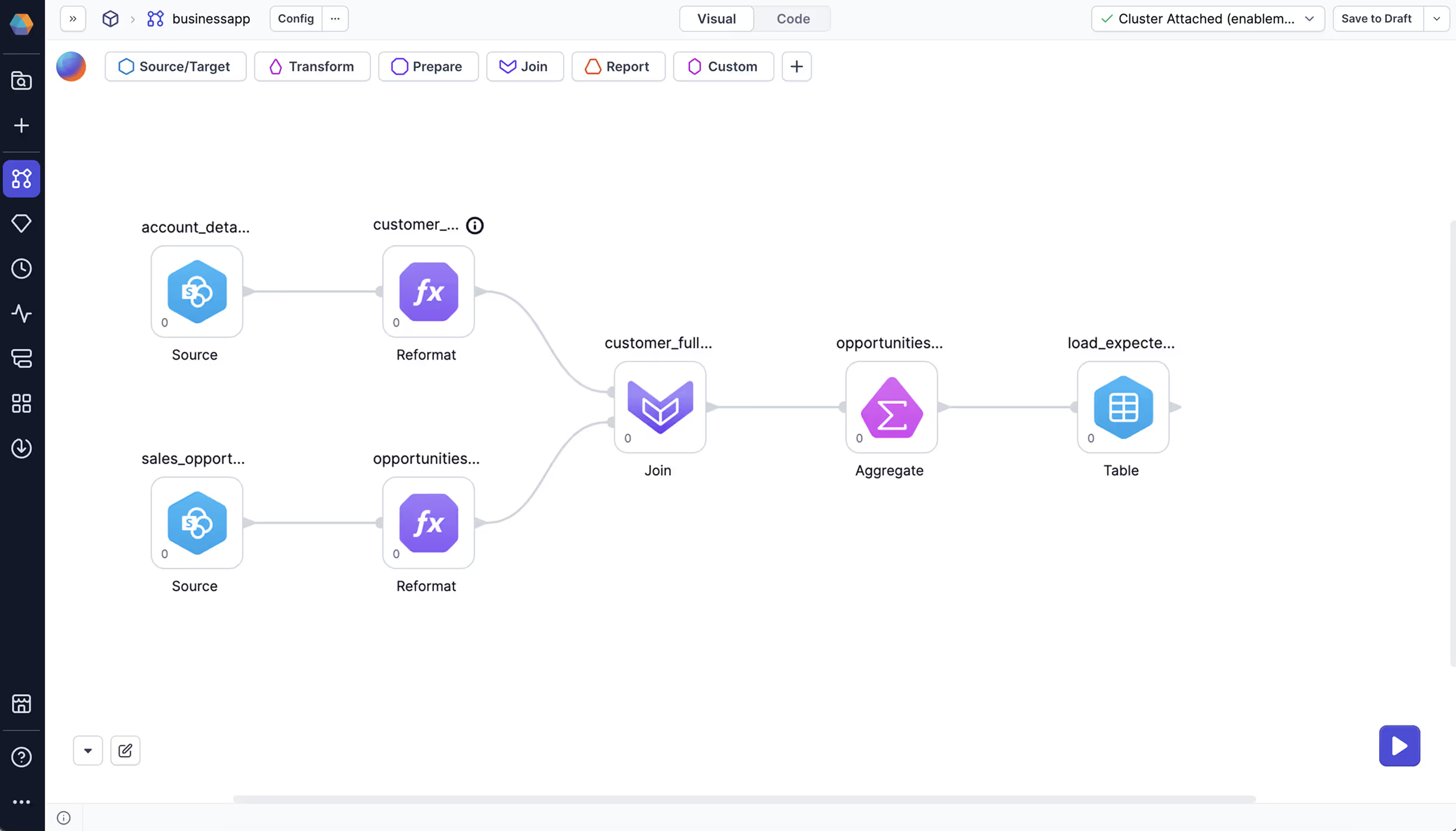

Prophecy empowers business data users with an AI-powered visual designer to seamlessly prepare data for analytics and AI. Data analysts and data scientists can build and deploy data pipelines that read, transform, and write data for business intelligence, reporting, and AI—without relying on the data platform team for day to day work.

The pipelines are fully aligned with the data platform team’s architecture, running at scale while adhering to enterprise-grade access controls. Under the hood, they follow best software practices, including Git integration, versioning, and automated testing. There are controls to ensures security, quality and cost management.

Connect with Prophecy at the Data and AI Summit

San Francisco, Moscone Center | June 26 - 29, 2023

Be the

Data Hero

Business data team

Prophecy empowers business data users - data analysts and data scientists to self-serve and build data pipelines that run at scale on your cloud data platform.

You’ll get faster access to data, run a well governed system and remove iteration with the data platform team. We’ll also import the footprint from your desktop product.

Data platform team

Provide your consumers in business data teams with self-serve data preparation on your data platform with governance and controls.

Your team can use Prophecy to build pipelines visually on Spark increasing productivity.

You’ll reduce your backlog, control costs, increase governance and have happier internal customers.