How to Stop Playing Data Detective and Build Lineage That Actually Works

Discover why data lineage implementations fail and the solutions that fix them.

Picture this: your critical revenue dashboard is showing numbers that don't add up, and you need to trace back through dozens of data transformations across multiple systems to find the root cause. Sound familiar?

For data engineers and analysts, maintaining accurate, up-to-date, end-to-end lineage information has become one of the most challenging aspects of modern data management. As data ecosystems grow increasingly complex, manual tracking becomes nearly impossible.

When teams can't quickly understand where data came from or how it was transformed, troubleshooting becomes a nightmare, compliance audits turn stressful, and business users lose trust in their data.

The good news? You don't have to accept data lineage as an unsolvable problem.

This guide covers what data lineage is, why it's critical for compliance and quality assurance, practical implementation techniques, common challenges, and how modern solutions can help you establish lineage that actually works.

What is data lineage?

Data lineage is the process of tracking the flow of data over time, providing a clear understanding of where the data originated, how it has been transformed, any dependencies, and where it is stored throughout its lifecycle.

It is a comprehensive map of your data's journey from its initial source through every transformation, calculation, and storage point until it reaches its final destination in reports, dashboards, or applications.

For large enterprises managing thousands of data sources, hundreds of transformation processes, and dozens of different systems, data lineage becomes the foundation that makes everything else possible—from regulatory compliance to root cause analysis to impact assessment for system changes.

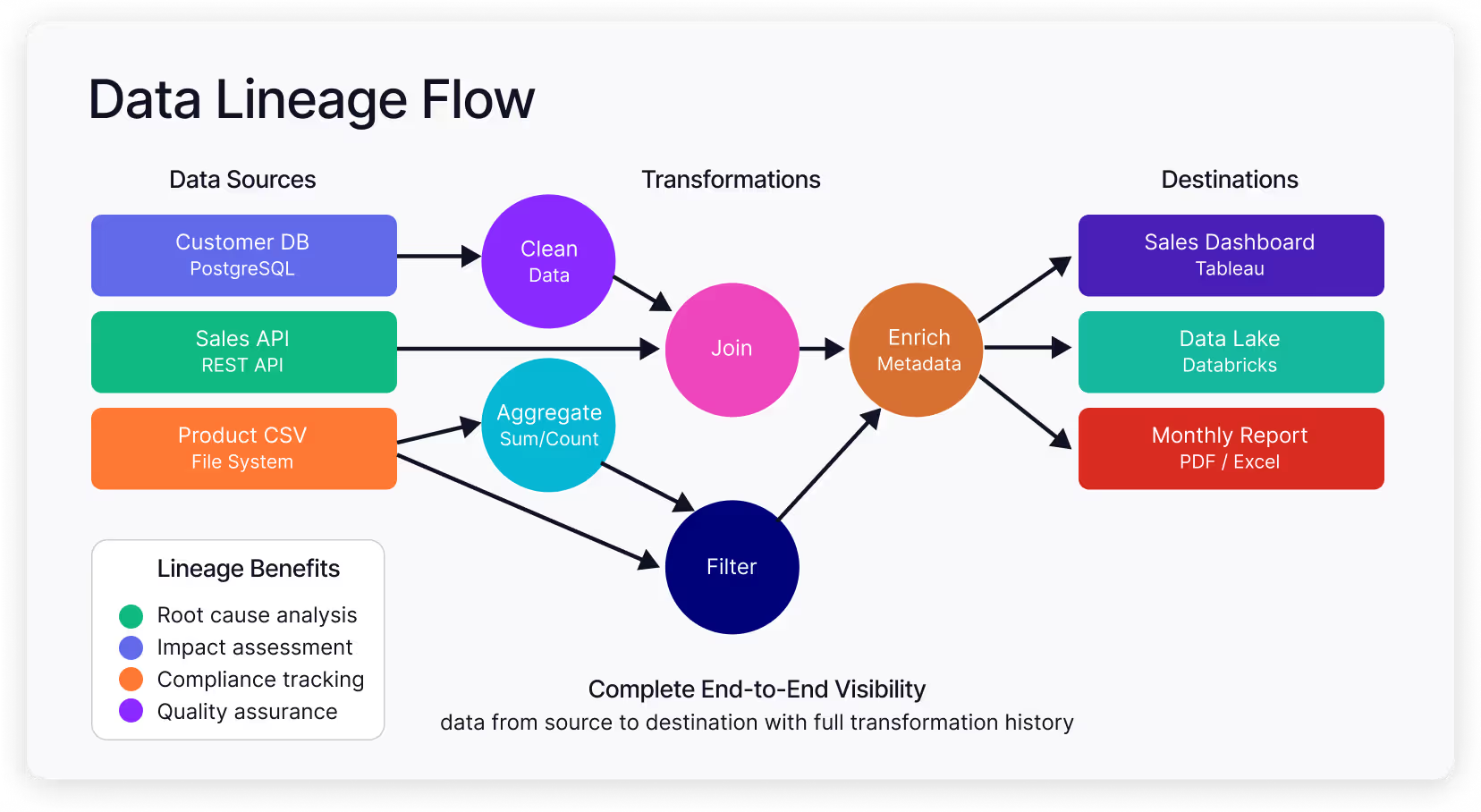

Here´s a data lineage diagram that visually illustrates the process:

Comparing data lineage, data provenance, and data traceability

While these terms are often used interchangeably, they represent distinct but complementary concepts in data management.

- Data lineage focuses on mapping the journey of data through systems, with a primary emphasis on data flow and transformations. It answers questions like "Where did this data come from?" and "What transformations were applied?"

- Data provenance provides a comprehensive historical record with contextual information about data's creation, modifications, and usage. It goes beyond tracking movement to include who created the data, when modifications occurred, what business rules were applied, and the broader context of data usage decisions.

Data traceability focuses on tracking specific data elements across systems and processes, enabling granular tracking of individual data points. It's useful for compliance scenarios where you need to trace specific records or values through complex transformation chains.

Why is data lineage important?

Effective data lineage delivers multiple high-value benefits for organizations seeking to improve governance, quality, and compliance.

Rather than being just another IT requirement, lineage becomes a strategic enabler that impacts everything from daily operations to regulatory readiness.

- Faster root cause analysis and troubleshooting: Clear data lineage enables teams to quickly trace the origins and transformations of data, making it easier to identify, diagnose, and resolve data quality issues or pipeline failures.

- Impact analysis for change management: Transparent data lineage shows which downstream reports, dashboards, or processes will be affected by changes to upstream data sources or transformations.

- Improved data quality and trust: Understanding the full journey of data helps organizations detect errors, inconsistencies, and redundancies, building trust in data products and empowering users to rely on data quality metrics for critical business decisions.

- Regulatory compliance and audit readiness: Data lineage provides auditable records of data flows, supporting compliance with standards like GDPR, HIPAA, and financial reporting requirements.

- Enhanced collaboration and data literacy: A clear visual map of data flows and visual data pipelines makes it easier for technical and non-technical stakeholders to understand and collaborate on data projects.

- Operational efficiency and cost savings: Automated data lineage reduces manual mapping and documentation while helping identify redundant processes and data assets.

- Support for cloud migration and modernization: Lineage helps organizations prioritize essential data assets and understand dependencies during migration or modernization initiatives, supporting effective data migration strategies, data lakehouse creation, and adopting lakehouse architecture.

Data lineage techniques

Organizations employ various techniques to implement data lineage, each with different levels of granularity, automation, and use cases.

The key is understanding which approaches work best for your specific data environment and business needs.

- Table-level lineage: Tracks how entire tables relate and move through the data environment, providing a high-level overview of data flows but lacking granularity about individual data elements.

- Column-level lineage: Offers detailed tracking at the column or field level, showing exactly how each piece of data is transformed from source to destination, which is essential for root cause analysis and impact assessment.

- Query parsing: Analyzes SQL queries and ETL scripts to determine how data is selected, combined, transformed, and stored by examining the actual code that manipulates the data.

- Log-based lineage tracking: Uses database logs (e.g., write-ahead logs, binary logs) to infer how data changes over time, particularly useful when direct query parsing is not feasible.

- Instrumentation in data pipelines: Embeds lineage tracking directly into ETL/ELT pipelines using frameworks like Prophecy, capturing dependencies and execution history as part of the pipeline to provide automated lineage as data moves and transforms.

- Manual lineage mapping: Documents data flows and transformations manually, especially during system migrations or with legacy systems, which can be augmented with APIs and custom scripts for improved accuracy.

- Metadata management and tagging: Collects, manages, and annotates metadata at various stages of the data lifecycle to track origins, transformations, and destinations, with tagging adding descriptive context to data elements.

- Version control and audit trails: Tracks changes to data and schemas over time using version control systems, ensuring historical lineage is preserved and allowing for easy auditing and rollback.

How to overcome critical data lineage challenges

Implementing comprehensive data lineage is not without challenges, particularly in large, complex organizations with diverse data ecosystems.

The goal isn't just to implement data lineage but to create a sustainable, scalable lineage practice that delivers ongoing value to the organization.

Let's examine each major challenge and how it can be overcome with the right approach and technology.

Complexity of data transformations

Data engineers often deal with intricate ETL/ELT workflows, custom scripts, and evolving data models. Tracing how data moves and transforms, especially at the column or field level, can be extremely difficult, particularly when custom code is involved or when pipelines span multiple systems.

The solution is to implement visual, drag-and-drop interfaces for building data pipelines that make complex transformations visible and understandable. Your platform should represent each transformation step clearly, enabling an intuitive understanding of data movement while automatically capturing lineage at both table and column levels.

Look for systems that integrate AI-assisted features to help with recommendations, code generation, and error handling, which further simplify complex transformations.

This approach ensures even sophisticated data processing logic remains traceable and maintainable, turning what was once a black box into a transparent, documented process, facilitating ETL modernization.

Platforms like Prophecy provide these capabilities, combining visual pipeline design with automated lineage capture to solve the complexity challenge. When considering ETL tools selection, look for these features.

Lack of standardization and metadata gaps

Data often comes from disparate sources with inconsistent formats or naming conventions. Incomplete or inaccurate metadata makes it hard to track the true origin and transformation logic behind data, leading to confusion and errors.

The key is to enforce consistent naming conventions and standardized approaches to data transformation through automated systems. Your organization needs tools that automatically generate metadata as pipelines are built, filling gaps that would otherwise exist manually.

Implement solutions that integrate with enterprise data catalogs and connect business glossaries with technical lineage information, creating a single source of truth for data knowledge and improving metadata management.

This approach supports organization-wide naming standards for data assets and ensures consistency across different departments and systems, eliminating the confusion that comes from inconsistent data documentation.

Prophecy's visual interface and automated metadata generation directly address these standardization challenges.

Limited automation and scalability

Manual lineage tracking is slow, error-prone, and doesn't scale as data volumes grow. Many organizations struggle to maintain accurate, up-to-date lineage across large, interconnected systems, especially when relying on outdated or fragmented tools.

The solution requires leveraging cloud-native platforms that can scale infrastructure seamlessly as data volume and complexity grow. You need systems that automate lineage extraction and reporting, eliminating manual tracking efforts.

Look for tools that include automated lineage extractors that can retrieve and export lineage information from projects and pipelines while supporting integration with CI/CD systems. This automation ensures lineage information stays current as systems evolve and captures changes to data pipelines in real-time, making scalability a built-in feature rather than an ongoing challenge.

Prophecy's cloud-native architecture and automated lineage extractor deliver exactly this level of automation and scalability.

Tool integration and collaboration issues

Siloed tools and poor integration across the data stack result in fragmented lineage information, making it difficult for teams to collaborate and maintain a unified view of data flows.

Implement platforms that support simplified version control and are natively integrated with cloud data intelligence platforms, enabling centralized visibility and control through self-service data governance. Use systems that allow analysts to hand off pipelines to engineers for review and optimization, streamlining workflows across departments.

Prioritize tools with Git integration that track all pipeline changes, supporting versioning, collaboration, and rollback capabilities. This approach allows teams to always audit how and when data transformations changed over time, creating a unified view that bridges departmental silos and ensures effective governance.

Prophecy's native integration with platforms like Databricks and built-in collaboration features solve these integration challenges effectively.

Compliance and audit pressures

Regulatory requirements (like GDPR, HIPAA) demand robust lineage for audits and data governance. Without automated, end-to-end tracking, compliance becomes costly and stressful.

Install centralized controls for data access, encryption, and lineage that ensure compliance and auditability across all data flows. Your organization needs a governance-first approach that enforces quality standards and automates critical checks, such as data profiling and lineage validation.

Look for systems that enable automated delivery of lineage and error reports via email or as code commits in version control systems, supporting proactive monitoring and rapid response to compliance issues.

Prophecy's integration with Unity Catalog and governance-first design directly address these compliance requirements, supported by robust data governance models.

Resource constraints and bottlenecks

The shortage of skilled data engineers means that business users often wait for pipeline changes, creating bottlenecks and slowing down data-driven decision-making.

The solution is to democratize data engineering through visual, low-code development that allows a wider range of users—including business analysts—to participate in building and modifying data pipelines. This reduces dependency on scarce data engineering resources while maintaining quality standards.

Implement AI-assisted visual development with enterprise-grade code generation that enables self-service analytics while maintaining governance standards.

Your platform should be intuitive enough for data analysts to use while extending critical capabilities, allowing non-technical users to interact with lineage diagrams to answer questions about data sources, quality, and usage without IT intervention.

Prophecy's visual interface and self-service capabilities directly address these resource constraint challenges.

Gain visibility with the right data lineage tool

As organizations seek to mature their data practices, they need tools that not only solve technical challenges but also democratize access to data and insights across the business.

The solution requires platforms designed specifically to address the dual needs of technical data teams and business stakeholders. Here's how Prophecy addresses these needs:

- Centralized governance and compliance: Integrated with Databricks Unity Catalog, Prophecy provides centralized controls for data access, encryption, and lineage, ensuring compliance and auditability across all data flows

- Automated, end-to-end lineage tracking: Prophecy automatically captures and visualizes data lineage at both table and column levels as users build pipelines, making it easy to trace data origins, transformations, and destinations without manual effort

- Visual, low-code pipeline design: The drag-and-drop interface lets users design, inspect, and modify data pipelines visually, with each transformation step (or "gem") represented, enabling an intuitive understanding of data movement for both technical and non-technical users

- Version control and collaboration: All pipeline changes are tracked in Git, supporting versioning, collaboration, and rollback, so teams can always audit how and when data transformations changed over time

- Real-time observability and error prevention: Prophecy provides at-a-glance data profiles and real-time pipeline observability, allowing users to monitor lineage, catch failures early, and prevent disruptions before they impact downstream analytics or reporting.

Data transformation continues to occupy the majority of enterprise data professionals' time. Explore the future of data transformation, powered by unified visual and code development.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The AI Data Prep & Analysis Opportunity

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar