Building a Self-Service Data Governance Framework in Databricks With Prophecy

Stop choosing between control and access. Learn how to build a robust Databricks governance framework that enables true self-service analytics without compromising security, powered by Prophecy.

Organizations face a frustrating choice: technical teams need to maintain security, compliance, and quality standards, while business users demand faster access to insights without technical barriers.

Traditional approaches to data governance have created a false dichotomy: either lock down data (creating bottlenecks) or allow ungoverned access (creating security, compliance, and data quality risks). Neither option adequately serves modern enterprises that need both agility and control.

As Joe Greenwood, VP of Global Data Strategy at Mastercard, emphasizes, "The challenge isn't just providing access to data—it's providing access within a framework that maintains security, compliance, and quality standards”. Organizations that solve this paradox gain a significant competitive advantage through faster, more confident decision-making.

In this article, we explore how to architect a robust governance framework in Databricks that enables true self-service analytics without compromising data integrity or security, leveraging Prophecy to make governance accessible to both technical and non-technical stakeholders.

What is a self-service data governance framework?

A self-service data governance framework is a structured approach that enables non-technical users to access, prepare, and analyze data independently while automatically enforcing organizational policies, security controls, and quality standards.

Unlike traditional governance models that rely on gatekeepers and manual approvals, modern data governance models embed governance into the data access process itself, making it invisible to end users while still maintaining appropriate controls.

Why traditional governance self-service frameworks fall short

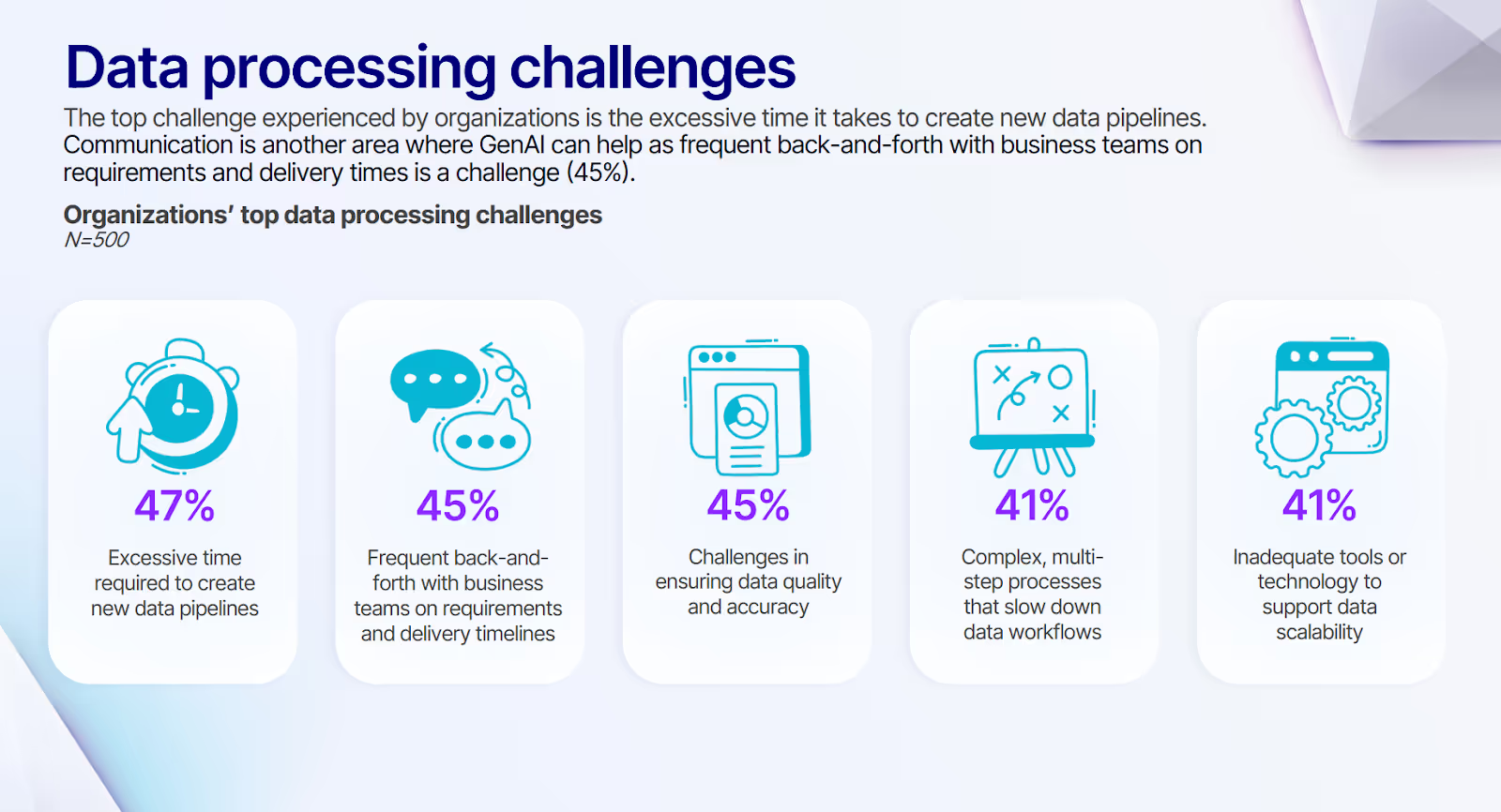

According to our survey of data leaders in 500 organizations, 41% cite complex, multi-step processes that slow down data workflows as their top data processing challenge. Another 45% report frequent back-and-forth with business teams on requirements as a major hurdle to timely data access.

These challenges stem from governance approaches that weren't designed for self-service environments:

- Manual gatekeeping creates bottlenecks as technical teams become overwhelmed with access requests

- Static policies fail to adapt to changing business needs and data usage patterns

- Disconnected tools create governance gaps as data moves between systems

- Technical interfaces make governance inaccessible to the business users who need to understand it

- Reactive monitoring catches governance issues after they occur rather than preventing them

These limitations force organizations into an impossible choice: maintain rigorous governance (and accept the resulting bottlenecks) or prioritize speed (and accept the resulting risks). Organizations must find ways of balancing self-service analytics and compliance to meet these competing demands.

Effective self-service governance requires balancing three key elements:

- Accessibility - Providing intuitive interfaces that non-technical users can navigate independently

- Enforcement - Automatically applying appropriate policies without requiring manual intervention

- Transparency - Maintaining visibility into how data is being accessed and used throughout the organization

When appropriately implemented, these key elements constitute a framework that allows organizations to democratize data access without sacrificing governance, accelerating insights while maintaining security, compliance, and quality standards.

Seven components of an effective self-service data governance framework in Databricks

Building an effective self-service governance framework in Databricks requires careful attention to seven critical components. Each component addresses specific governance challenges while enabling greater self-service capabilities.

1. Implement role-based access controls with Unity Catalog

Effective access control is the foundation of any governance framework, particularly in self-service environments. Role-based access control (RBAC) ensures users can only access the data appropriate for their role and business needs, maintaining security without creating unnecessary barriers.

Databricks Unity Catalog provides a unified governance model for data assets across the Databricks Lakehouse. It offers fine-grained access control at multiple levels—from catalogs down to individual columns—while centralizing identity management through integration with enterprise directories like Okta.

Dynamic access policies can incorporate sophisticated controls such as row-level security and data masking, with inheritance models that simplify permission management across large environments.

Prophecy takes this foundation and extends it seamlessly to the data preparation layer. Users automatically inherit appropriate permissions from Databricks without additional configuration, eliminating the need to maintain separate security models. Visual indicators show analysts which datasets they can access without requiring technical knowledge, and pipeline creation respects underlying Unity Catalog permissions automatically.

This integration creates a consistent access model across the entire analytics lifecycle. Technical teams define permissions once in Unity Catalog, and those permissions are automatically enforced throughout all data preparation activities.

The result is a security framework that eliminates governance gaps without adding friction for business users, allowing them to focus on analysis rather than navigating complex permission systems.

2. Establish metadata management and data discoverability

Without proper metadata management, even perfectly secured data remains inaccessible to the business users who need it. Comprehensive metadata—both technical and business—enables users to find, understand, and trust the data available to them, helping to overcome data silos.

Databricks offers several capabilities to enhance data discoverability. The Unity Catalog metadata layer provides a hierarchical structure of catalogs, schemas, and tables that organizes data assets logically.

Table descriptions and column comments capture technical metadata, while tags and properties enable custom metadata for business context. Search capabilities help users locate relevant data assets within this structure.

Prophecy extends these capabilities by providing a visual data browser that makes Unity Catalog accessible to non-technical users. This interface adds business context through integration with data discovery tools and enables simplified search across data assets without requiring SQL knowledge.

Rich metadata is displayed directly in the pipeline design interface, putting critical context at users' fingertips when they need it most.

Enhanced metadata management dramatically improves the self-service experience by reducing the time users spend searching for data. When business users can quickly find relevant, well-described datasets, they can focus on analysis rather than data discovery.

3. Enforce data quality with automated validation

As Paige Roberts, Senior Product Marketing Manager for Analytics and AI at OpenText, notes, "Automated quality validation isn't just a technical feature—it's the foundation that gives non-technical users the confidence to make high-stakes decisions based on their analyses."

Without built-in quality controls, self-service analytics can quickly lead to inconsistent or unreliable insights, undermining trust in data across the organization. Quality must be embedded throughout the data lifecycle, not treated as an afterthought or a separate process.

Databricks provides several capabilities to support data quality. Delta Lake time travel enables data versioning and rollback when quality issues are discovered. Schema enforcement and evolution maintain structural integrity as data evolves, while constraints and expectations enforce business rules at the data layer.

Open APIs allow integration with specialized data quality tools for more complex scenarios.

Prophecy builds on this foundation with comprehensive quality capabilities accessible to non-technical users. Visual data profiling immediately shows value distributions, null values, and other quality indicators, making potential issues visible without requiring SQL queries or code.

Built-in quality assertions can be added to pipelines with a few clicks, automatically validating data against expectations before it's used for analysis. Automated testing frameworks validate pipeline outputs against expected results, ensuring that transformations maintain data quality throughout the process.

Prebuilt quality components further implement best practices like deduplication, validation, and error handling, making quality accessible to users regardless of their technical background.

These integrated quality controls ensure that everyone, from data engineers to business analysts, works with reliable, consistent data. When business users can verify quality themselves, they gain confidence in their conclusions, and technical teams spend less time on quality verification.

4. Standardize with reusable, governed components

Standardization is critical for maintaining consistency, quality, and efficiency in self-service environments. Without standardization, each user creates their own approach to common data tasks, leading to inconsistent results, duplicated effort, and governance gaps. Well-designed standards encode best practices and governance requirements in reusable assets that anyone can leverage.

Databricks supports standardization through several mechanisms. SQL functions and procedures enable reusable logic that can be shared across queries and notebooks, using Spark SQL for data transformation.

Delta Live Tables also define data transformation pipelines with built-in quality controls and documentation. Parameterized notebooks can be reused for common analytical tasks, while CI/CD integration supports version control and consistent deployment practices.

Prophecy takes standardization to the next level with its innovative Packages feature. These reusable, governed components encapsulate best practices, quality controls, and optimization techniques in visual building blocks that non-technical users can easily incorporate into their workflows.

The visual function builder allows non-technical users to create standardized logic without writing code, widening the pool of contributors to the organization's standards library. The searchable component library also includes governance metadata that helps users understand how and when to use each component.

Standardization through reusable components improves governance while accelerating self-service. Technical teams can encode governance rules, quality standards, and optimization practices into components that business users can simply drag and drop into their workflows..

5. Implement comprehensive audit logging and monitoring

Visibility into data access and usage is essential for both governance and optimization. Without proper audit capabilities, organizations cannot verify compliance, identify potential issues, or understand how data assets are being used across the business.

Effective monitoring is proactive rather than reactive, catching problems early and providing insights for continuous improvement. Databricks provides several audit capabilities to support governance requirements. Account-level audit logs capture user actions, resource management, and access events.

Workspace audit logs track notebook, job, and cluster activities, providing visibility into who is doing what within the environment. Query history preserves SQL operations for review and troubleshooting, while integration with enterprise monitoring tools extends visibility to the broader data ecosystem.

Prophecy expands these capabilities with visual tools that make auditing accessible and actionable. Visual pipeline lineage shows data flow across the organization, making dependencies and impacts immediately clear without complex queries.

Comprehensive auditing, an essential aspect of modern data pipeline best practices, creates transparency without creating friction. Detailed pipeline run history includes execution metrics that help identify performance issues or unexpected behavior. User activity tracking for pipeline creation and modification provides accountability throughout the development lifecycle.

These capabilities are presented through simplified audit reports accessible to non-technical users, democratizing access to governance information. When business users can see how data flows through the organization and understand the controls in place, they become active participants in the governance process rather than passive recipients of restrictions. Enhancing data literacy is key to this empowerment.

6. Control costs with resource management

Self-service environments can quickly lead to runaway costs if not properly managed. As more users create and run data pipelines, compute and storage expenses can increase exponentially without proper guardrails. Effective resource management balances accessibility with fiscal responsibility, ensuring that self-service capabilities remain economically sustainable.

Databricks offers several cost management features that provide a foundation for responsible self-service. Cluster policies define acceptable configurations, preventing users from provisioning unnecessarily powerful (and expensive) resources.

Autoscaling optimizes resource utilization by adjusting compute capacity based on workload, while automated job management with scheduling ensures resources are only active when needed. Usage reporting and cost attribution provide visibility into where resources are being consumed.

Prophecy adds significant cost control capabilities that make resource management accessible to both technical and business users. Pipeline-level cost estimates are provided before execution, giving users immediate feedback on the resource implications of their work.

Optimization recommendations proactively suggest changes that reduce resource consumption without sacrificing performance, helping users develop cost-efficient habits.

Parameterized execution further allows users to balance performance and cost based on the specific needs of each workflow, while simplified scheduling helps optimize resource sharing across the organization. These capabilities put cost visibility directly in the hands of those creating and running pipelines, distributing responsibility rather than centralizing it solely with technical teams.

7. Enable governed access through intuitive interfaces

For governance to be truly effective in self-service environments, it must be accessible to all stakeholders, not just technical teams. Traditional governance approaches often rely on complex technical interfaces that exclude business users from participating in the governance process, creating a disconnect between those setting policies and those affected by them.

Databricks provides governance interfaces through several mechanisms. The Unity Catalog UI offers permission management capabilities, while Data Explorer enables browsing of available assets. SQL interfaces allow querying of metadata for those with appropriate skills, and integration with third-party governance tools extends capabilities for specialized needs.

Prophecy improves governance accessibility by wrapping these technical capabilities in intuitive, low-code visual interfaces, empowering non-technical users. All governance functions are accessible through consistent, business-friendly UIs that don't require technical expertise to navigate.

Natural language search for governance metadata helps users find the information they need without complex query syntax, while simplified version control enables collaboration without requiring Git knowledge.

Governance dashboards provide business stakeholders with at-a-glance visibility into key metrics and controls, making governance transparent and understandable across the organization. This transparency helps build a governance culture where everyone understands and values the controls in place rather than seeing them as obstacles to be worked around.

When governance is accessible to everyone, it becomes a shared responsibility rather than a technical burden. Business users understand and participate in governance processes, while technical teams focus on implementing governance frameworks rather than enforcing rules.

Real-world examples of self-service data governance frameworks

Implementing a comprehensive governance framework for self-service analytics requires balancing theoretical best practices with practical realities. Here are some case studies that examine how organizations successfully navigated this challenge, highlighting their approaches, solutions, and measurable outcomes.

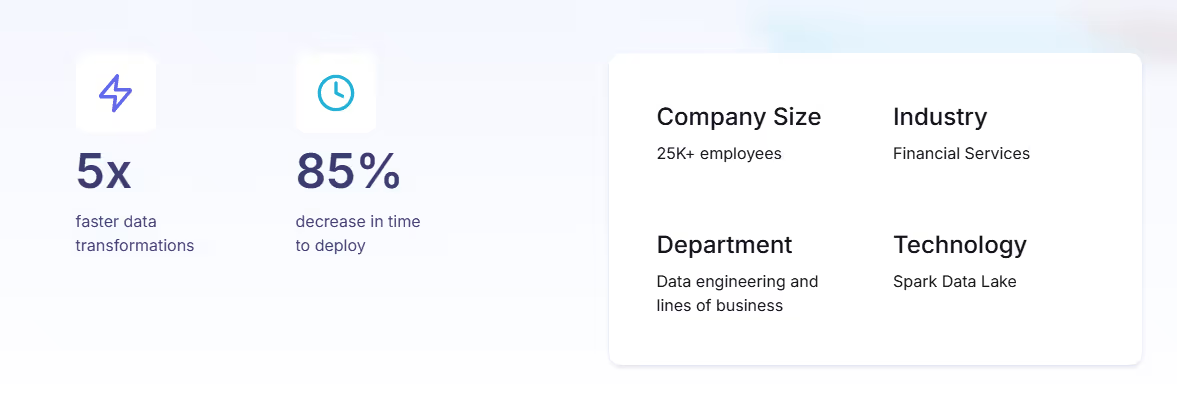

How a financial services firm overcame compliance bottlenecks

A major financial services organization faced the classic governance dilemma: maintaining strict regulatory compliance while meeting business demands for faster analytics. The gap between these priorities created significant tension throughout the organization.

Their compliance-focused approach required a thorough review of all data access and transformation logic, creating backlogs for new data requests. This bottleneck frustrated business teams who needed timely insights to respond to market changes and competitive pressures.

The situation led to shadow IT initiatives as business users created Excel-based analytics with manually extracted data, precisely the compliance risk the organization was trying to prevent. These workarounds not only created governance gaps but also resulted in inconsistent analyses and duplicated effort across teams.

The organization implemented a governance framework in Databricks with several key components:

- A unified permission model through Unity Catalog that enforced appropriate access controls automatically

- Standardized, pre-approved transformation components that encoded compliance requirements

- Automated quality validation throughout the data preparation process

- Comprehensive audit trails for all self-service activities

- Visual interfaces that made these controls accessible to business users

This approach embedded governance into the analytics workflow itself rather than treating it as a separate approval process. By automating policy enforcement, the organization maintained compliance standards without requiring manual reviews for routine operations.

The new framework dramatically transformed their analytics operations. Data preparation time decreased from weeks to hours, and shadow IT activities declined as users found the governed platform more effective.

The key insight from this implementation was that governance works best when it operates invisibly behind intuitive interfaces. By making governance automatic rather than manual, the organization satisfied both business needs for speed and compliance requirements without forcing a trade-off between them.

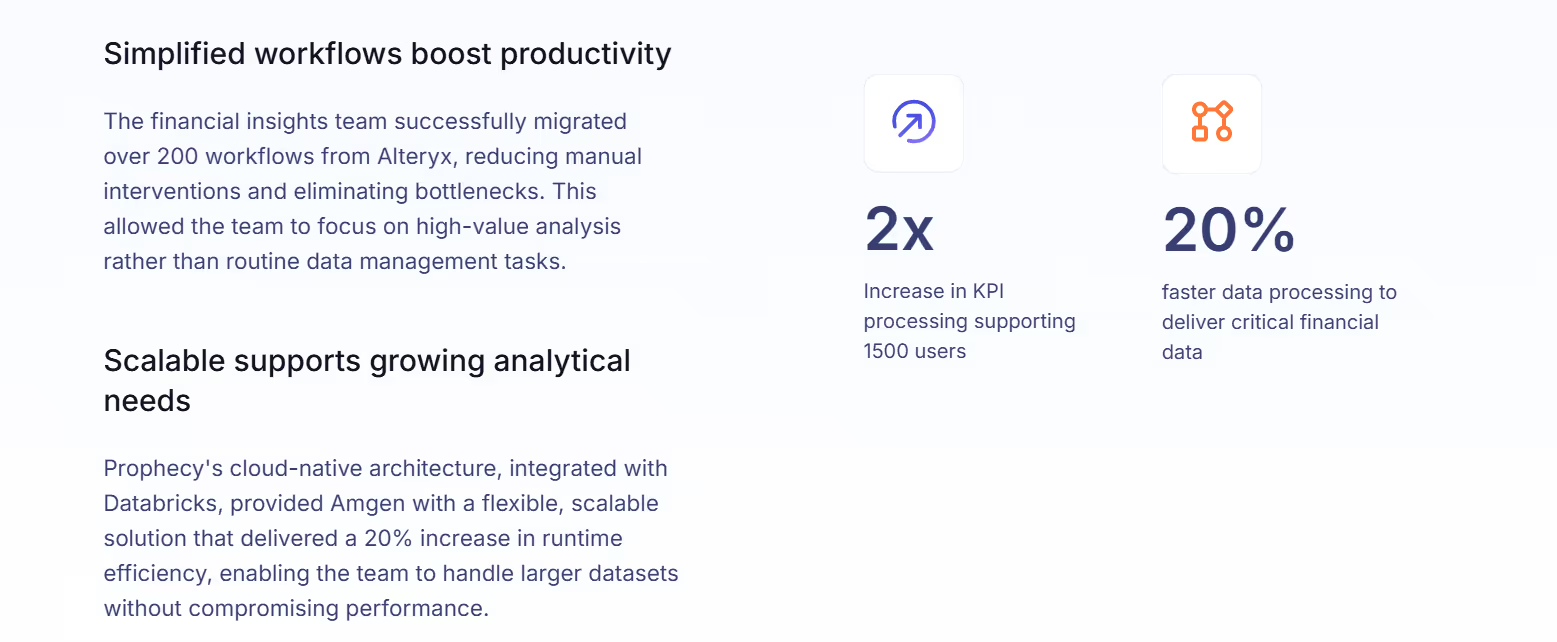

Amgen's approach to standardized data governance

Amgen faced significant governance challenges when attempting to deliver critical financial insights to their healthcare stakeholders. Their traditional approach created complexity, security issues, and inefficiencies that inhibited effective analytics.

According to Drew Davis, Data & Analytics Manager at Amgen, the team was left facing a lot of complexity with disjointed systems when using traditional data preparation tools. The fragmented approach left them with no way to automate user security, and the process required a lot of manual intervention.

This environment created several obstacles:

- Inconsistent governance across different tools and systems

- Manual security management that couldn't scale with demand

- Disconnected workflows that introduced errors and delays

- Limited visibility into data lineage and transformation logic

- High maintenance burden for the technical team

Amgen implemented a comprehensive governance framework focused on standardization and automation:

- Unified their data preparation environment on a single, governed platform

- Implemented automated access controls tied to enterprise identity systems

- Created standardized, reusable components for common financial calculations

- Established consistent quality validation throughout the preparation process

- Deployed comprehensive monitoring and observability tools

This standardized approach ensured consistent governance across all financial analytics while reducing the technical burden on the FIT team. By making governance systematic rather than ad hoc, they created a foundation for efficient, compliant self-service analytics.

The new governance framework eliminated duplicate work across tools and teams, reduced data inaccuracies through standardized transformations, and accelerated time-to-insight for financial analytics.

Amgen's experience demonstrated that effective governance isn't about restricting access—it's about providing the right structure to make self-service both efficient and compliant. By standardizing their approach, they created a foundation that supported both governance requirements and business agility.

Transform your data analytics with governed self-service

Traditional governance approaches force organizations to choose between control and accessibility. Modern frameworks—implemented through intuitive platforms like Prophecy eliminate this false dichotomy, enabling true self-service without compromising governance standards.

Most importantly, these frameworks build a stronger data culture that values both governance and accessibility. When governance is embedded in the tools that everyone uses daily, it becomes an enabler of innovation rather than a barrier. This cultural shift creates a virtuous cycle where self-service and governance reinforce each other rather than competing.

Here’s how Prophecy's visual interface makes this governance framework accessible to all stakeholders:

- Visual Data Lineage: Easily trace data origins and transformations, enabling quick identification of quality issues at their source.

- Automated Quality Checks: Integrate predefined and custom quality rules directly into data pipelines.

- Self-Service Dashboards: Provide business users with intuitive, role-based dashboards that offer real-time visibility into data quality metrics.

- Collaborative Issue Resolution: Enable seamless collaboration between data engineers and business users through integrated workflows.

- Metadata-Driven Governance: Leverage rich metadata to enforce data governance policies consistently across the organization.

To learn more about implementing governed self-service in your organization, explore Self-Service Data Preparation Without the Risk to empower both technical and business teams with self-service analytics strategies.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now