Prophecy vs. Databricks Lakeflow Designer

A practical guide for teams evaluating Prophecy and Lakeflow Designer

When a cloud vendor launches new functionality that seems to overlap with other solutions in the ecosystem, it’s natural to ask: “Why not just use what they’ve built?” It’s a fair question, one we want to answer with clarity and transparency, especially in light of Databricks’ recent announcement.

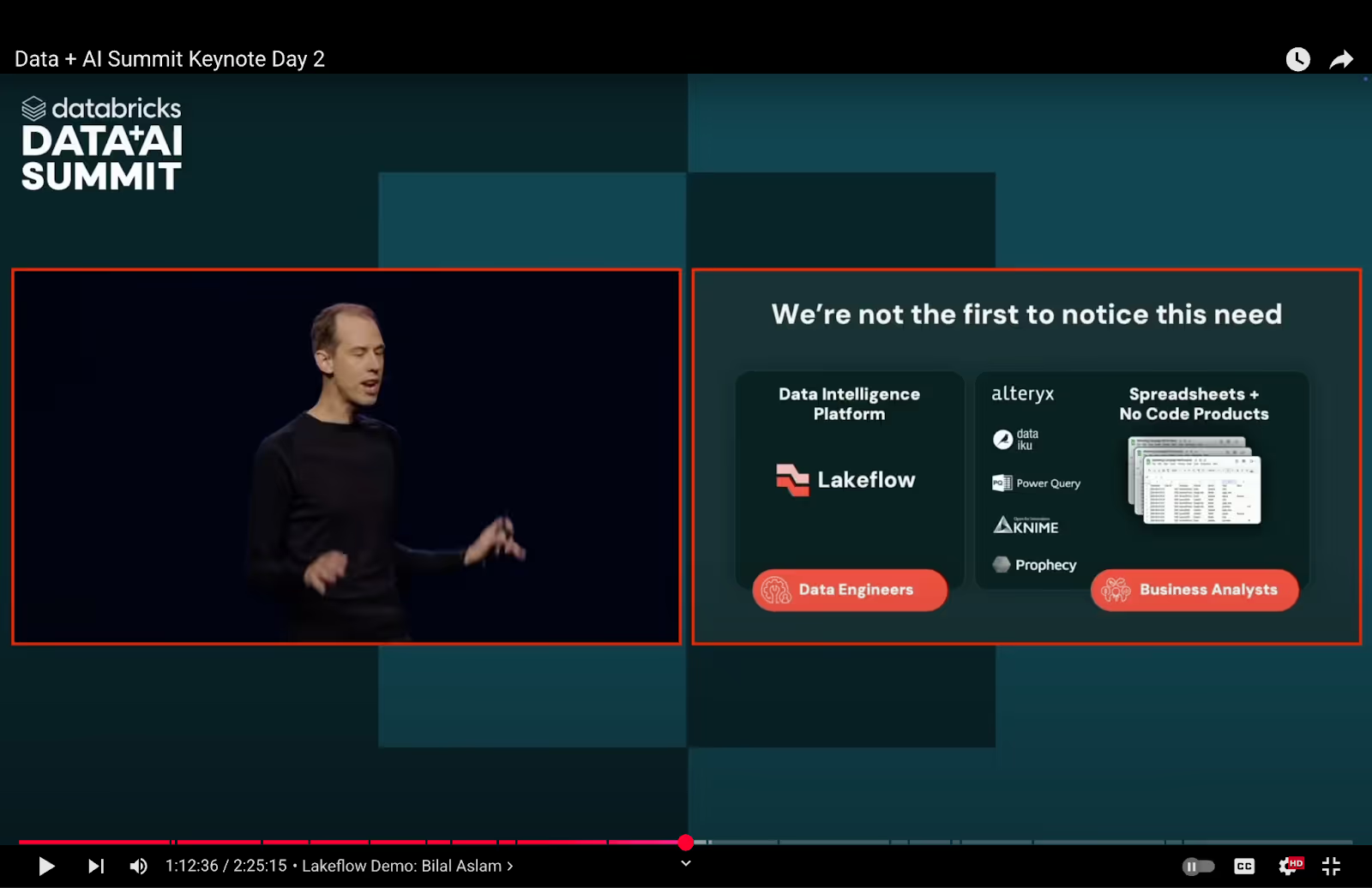

At Databricks Data + AI Summit earlier this month, Databricks introduced Lakeflow Designer Canvas, a visual interface designed to help business analysts build pipelines. That’s the same category Prophecy has been leading for years: governed, self-service data preparation on Databricks that is built for production. So, as experts in the field our customers are asking us to de-clutter the space, and we hope to save everyone a lot of time.

Here’s our perspective: Databricks’ announcement is great news. It’s a strong signal that Databricks recognizes what we’ve known all along—that there’s a massive, untapped opportunity to bring more people into the data platform. Since Databricks Ventures invested in Prophecy, we’ve worked closely with Databricks to add value and build on their innovations such as Spark, SQL and unity catalog, and together we’re focused on providing the best solution to make our customers successful.

There is a long-established, healthy industry dynamic where companies simultaneously partner and compete. In fact, Databricks has such relationships with cloud vendors who ship their own Spark and SQL solutions. So this is par for the course and great for consumers overall.

Consider a Databricks customer today. Every single one uses a Hyperscaler platform from Google, Amazon, or Microsoft. Those customers were fully-aware of the array of data platforms and tools that come “in the box” from their cloud provider, but they chose Databricks anyway. If data is the new oil, don’t I want a world-class refinery? Our joint customers, such as JP Morgan Chase that was featured in the CEO keynote at DAIS, will continue with the success they’ve had by combining the best of Databricks and Prophecy backed by our robust partnership.

Let’s compare and contrast what platforms it is available on, what users and workloads it will support.

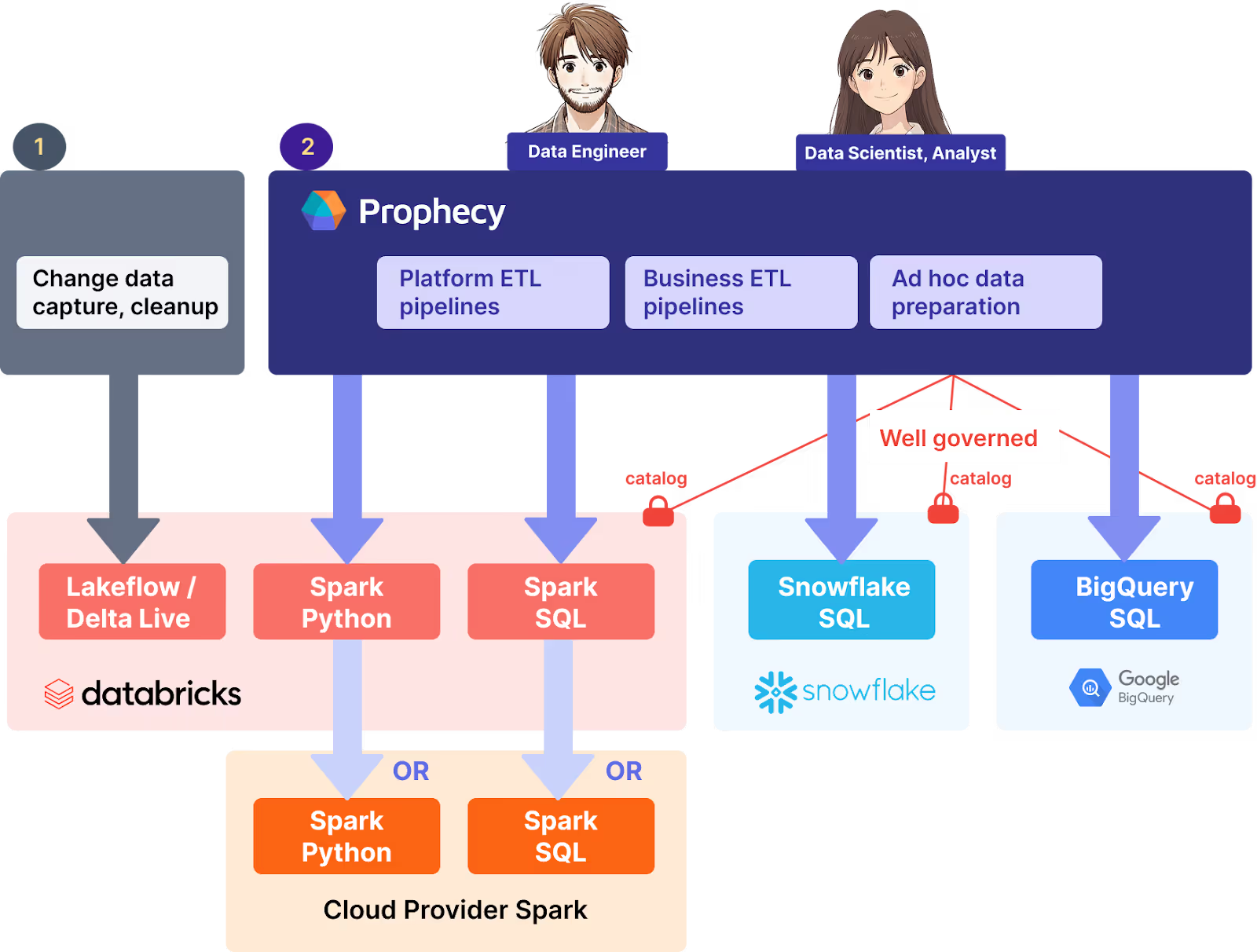

All platforms?

Let’s start with the basics: what platforms are supported?

- Lakeflow Designer is tightly coupled to the Lakeflow (or Delta Live Tables) product within Databricks. It does not support Databricks Spark (Python, Scala) or SQL outside of that context.

- Prophecy, in contrast, supports Spark and SQL pipelines on Databricks, as well as Spark on AWS, GCP, Azure, plus Snowflake and BigQuery.

This matters because most enterprises today are multi-platform. In fact, nearly 50% of Databricks’ largest customers also use Snowflake. Are enterprise business analysts expected to learn a new designer product for every platform?

And for most enterprises, pipeline tooling is a “considered purchase.” Analysts stick with the tools they know for years, often decades. If you're evaluating self-service data prep for analysts, you want something that’s flexible, future-proof, and production-ready. Prophecy is all three.

Verdict: Prophecy supports the varied platforms that real-world data teams use.

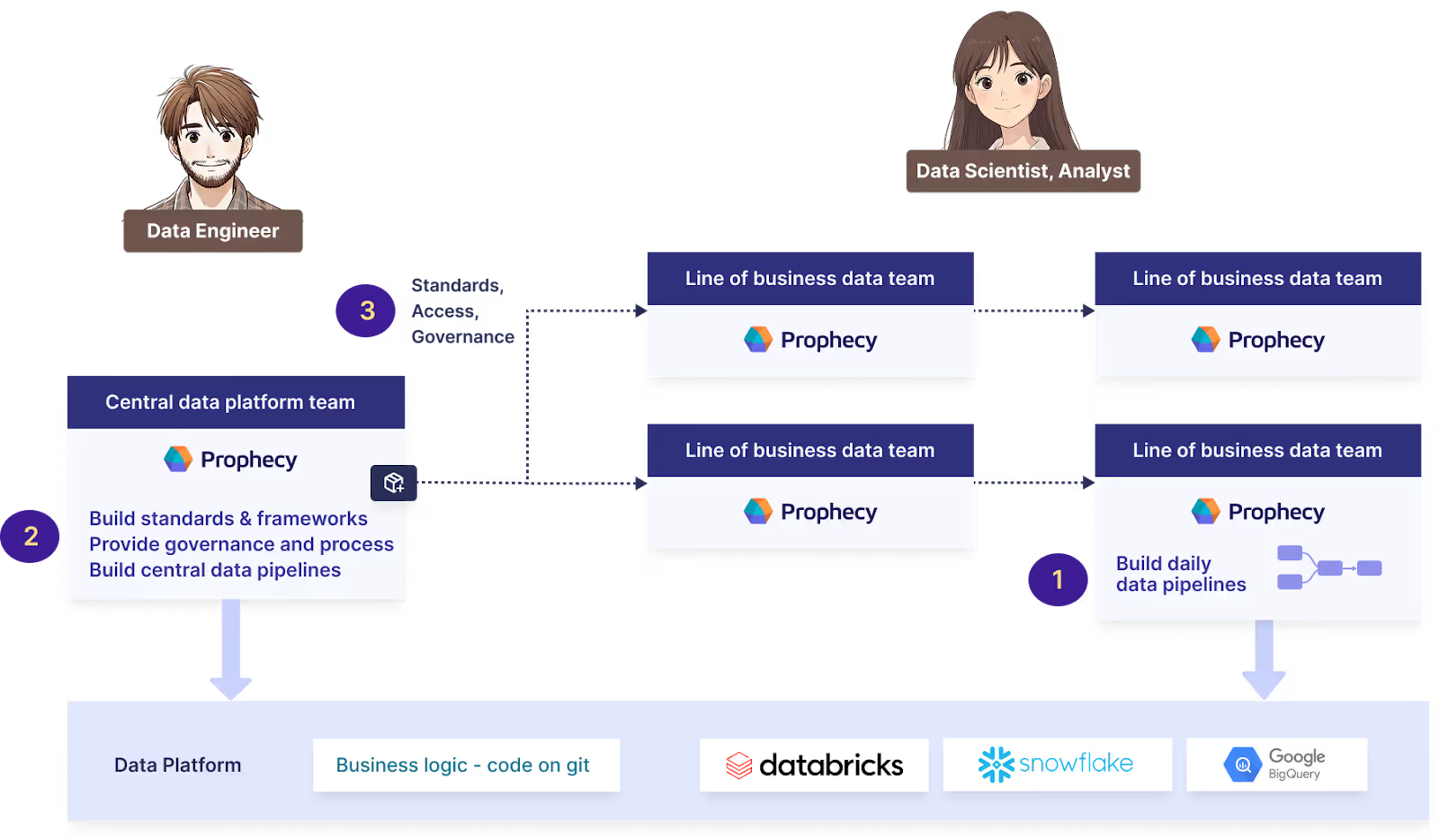

All users?

There are two main things that are required to support modern data teams

- Business users must be able to build daily pipelines

- Daily pipelines or analysis pipelines prepare data and produce reports and dashboards. This requires a rich set of visual operators, and a snappy interface that works for iterative ad hoc workflows.

- Prophecy and Alteryx are excellent at this.

- Lakeflow Designer has five operators on top of a data engineering stack.

- Data platform teams need to govern, build standards and enable reuse

- Out-of-box operators are rarely sufficient for enterprises. In practice, you need high-quality reusable operators for actions such as data quality checks, calls to internal systems, or to abstract repeated business logic.

- Prophecy enables users to create new visual components using Spark or SQL functions, build reusable templates, reusable subgraphs to capture larger reused logic, and reusable business rules to apply consistent definitions of business terms.

- Lakeflow designer does not have any of these concepts.

Verdict: Prophecy has the right architecture, the right constructs and depth to support all users.

All Workloads

ETL workloads need many features developed over decades by Informatica, Ab Initio and IBM DataStage. Prophecy supports this feature set and has moved large enterprises from all these products, running large workloads (tens of thousands of pipelines) in production.

Business prep and analyses need many features developed over decades by Alteryx. Prophecy supports this feature set, and the simple & fast ad hoc experience required by analysts. Prophecy also has an import button for Alteryx and has replaced many workloads.

Lakeflow Designer does not have the tablestakes feature set for either of these workloads.

Verdict: Prophecy is proven to support all workloads on Databricks.

AI-Native Development

AI-native and SaaS dynamics are playing out very differently in the market.

- For slow moving SaaS technologies, large players are able to replicate. For example, SQL has not changed much from Teradata in 1985, so it can be added with adequate investment. Similarly Microsoft was able to build teams and bundle it to compete with Slack.

- For fast moving AI-native technologies such as programming, new players such as Cursor and Windsurf have blown past existing products such as GitHub Copilot from Microsoft.

Prophecy has AI-native architecture that includes:

Data discovery agent that helps you find and understand datasets across Databricks and other data platforms using an integrated chat & visual interface.

Data transformation agent has a generate -> refine mechanism that generates transforms and then helps you refine them to take it from 80% to 100%, this also includes:

- Inspect-and-validate mechanism on results that walks you through inspect the generated results to ensure it is what is expected

- Restore to previous states if you want to backtrack from the path you’ve gone down

AI autonomy slider where initially whole pipelines should be modified and over time you work on narrower scope to add to the work done, all the way to zero assistance

There are other agents where we are working with design partners, that were demo’ed at DAIS 2025

- Documentation agent helps users in regulated agencies such as Finance, Healthcare, and Accounting document the data transformations in the required regulatory formats

- Requirements agent helps users generate data pipelines from requirement documents written by business users, and then as data pipelines are refined, the requirements documents stay up to date

- Fix-It agent helps users fix issues as they’re developing pipelines and by suggesting fixes if some pipeline fails in production

- Migration agent that helps users import Alteryx data pipelines and then step-by-step match data and fix pipelines

Lakeflow Designer has simple one-shot AI rather than planning and agents.

Isn’t Lakeflow Designer free?

The Lakeflow designer seems free, but if you’re moving from a product such as Alteryx, your data processing was nearly free and you’ll pay a lot more in compute to Databricks than you’ll spend on the tools. Your bill will go up substantially.

In the world of data, people are the largest cost—skimping out on their productivity (and spending more than that on compute) is not going to be a good financial decision.

Final Verdict

Lakeflow Designer is an acknowledgement of a need, and an expression of a desire to provide a solution. Lakeflow Designer may be worth exploring if you're building only declarative pipelines on Lakeflow, and enabling a small group of analysts with basic pipeline needs in it.

If you're an enterprise looking to enable governed self-service across business and platform teams—on Databricks and beyond—Prophecy is the proven choice. We support Spark and SQL, integrate deeply with Unity Catalog, and offer critical capabilities like version control, reusable pipeline standards, and cross-platform support.

Prophecy is built for the reality most enterprises face: multiple platforms, multiple users, and the need for production-ready pipelines with governance built in.

Start a free trial of Prophecy today.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now