How Effective Data Orchestration Eliminates Silo-Driven Data Chaos

Learn how effective data orchestration transforms scattered data streams into a cohesive business advantage. Discover how modern data orchestration eliminates silos and accelerate analytics transforming raw data into value.

While most companies today have invested heavily in collecting vast amounts of information, relatively few have mastered the crucial middle ground—the sophisticated coordination of data across systems that turns potential into performance.

This disconnect creates a paradoxical situation where companies are simultaneously data-rich and insight-poor. Business leaders increasingly recognize this problem, with decision-makers left waiting for critical intelligence while valuable data sits untapped in various repositories throughout the organization.

In this article, we'll explore how data orchestration solves this challenge by coordinating the flow of information across your entire data ecosystem. We’ll discover how strategic orchestration breaks down silos, accelerates analytics, and transforms raw data into your most valuable business asset.

What is data orchestration?

Data orchestration is the strategic coordination of data movement, transformation, and access across an organization's entire information ecosystem. Unlike isolated data management approaches, orchestration creates a cohesive framework that ensures data flows seamlessly to where it creates the most value.

Think of data orchestration as the conductor of your data symphony—it doesn't create the music itself but ensures all instruments play in perfect harmony. Without proper orchestration, your data initiatives become a chaotic jumble of disconnected notes rather than a harmonious melody driving business outcomes.

Modern orchestration goes far beyond basic data pipelines, which simply move information from point A to point B. Instead, it provides an intelligent layer that manages the entire data lifecycle—from initial collection through transformation, quality control, governance, and finally to consumption by business users.

The true power of orchestration lies in its ability to break down traditional barriers between technical and business teams. By creating standardized, automated workflows for data movement and preparation, orchestration eliminates bottlenecks that typically slow down data-driven decision making.

For data teams, orchestration means spending less time on repetitive data preparation tasks and more time delivering business value. For business users, it means faster access to trusted data without constant reliance on technical specialists.

Data orchestration vs. data visualization vs. data pipeline

These three concepts are often confused but serve distinctly different purposes in the data ecosystem:

Key components of effective data orchestration

When implementing data orchestration, organizations typically progress through these components in a logical sequence, though the specific approach may vary based on existing infrastructure and business priorities.

Each component addresses a critical aspect of the orchestration process:

- Data source integration: Connect to and extract structured and unstructured data from various sources, including databases, applications, APIs, and file systems, using effective data integration strategies. Modern orchestration platforms support hundreds of connectors to simplify this process without requiring custom coding for each new source.

- Metadata management: Create and maintain a comprehensive catalog of available data assets, their lineage, quality metrics, and business context. This "data about your data" forms the backbone of effective orchestration by making information discoverable and understandable.

- Workflow design and automation: Build reusable data transformation and movement processes using visual interfaces rather than complex code. These workflows define how data moves between systems and how it's processed at each step.

- Quality control and validation: Implement automated checks to ensure data meets quality standards before it reaches downstream systems. This includes validation rules, anomaly detection, and data profiling to identify potential issues early.

- Governance and security: Implement modern data governance models to enforce policies for data access, compliance requirements, and security measures throughout the orchestration process. Modern platforms embed these controls directly into workflows rather than applying them as a separate layer.

- Monitoring and observability: Track the health, performance, and business impact of orchestrated workflows. This visibility enables teams to proactively address issues before they affect business operations and continuously optimize their orchestration processes.

When these six components work in harmony, data teams gain a unified command center that transforms disconnected data streams into a cohesive, business-ready asset.

This complete orchestration foundation eliminates the traditional gaps between raw data and business value, enabling teams to manage the entire data lifecycle with confidence—from initial collection through transformation to final delivery—without the technical debt and fragmentation that typically plague enterprise data initiatives.

The business case for data orchestration and benefits

Effective data orchestration delivers tangible business value that directly impacts bottom-line results:

- Break down entrenched data silos: Connect isolated information pockets without requiring wholesale migration. For example, healthcare organizations leverage orchestration to combine clinical, operational, and financial data for improved patient outcomes and operational efficiency, gaining insights that were impossible when analyzing each domain separately.

- Democratize data access safely: Embed governance directly into workflows instead of treating it as a separate gatekeeping function. Organizations can maintain protection while dramatically simplifying access by tagging sensitive elements and automatically applying appropriate controls based on user roles, enabling innovation without compromising compliance.

- Scale analytics without scaling teams: Automate routine data tasks through visual interfaces and reusable components that enable data analysts and business users to create workflows previously requiring specialized engineering skills. For example, financial institutions consolidate customer data across dozens of systems for unified profiles that drive personalized offerings while strengthening risk management.

- Accelerate time-to-insight: Reduce the lag between business questions and data-driven answers from weeks to hours by eliminating manual handoffs between teams. Organizations gain competitive advantage by responding to market changes faster, optimizing operations in real-time, and identifying opportunities before competitors.

- Improve decision quality: Ensure all stakeholders work from consistent, high-quality information instead of fragmented views. This consistency eliminates contradictory analysis and builds organizational trust in data-driven decision making, creating a virtuous cycle of increased data usage and improved outcomes.

Tools for data orchestration

The data orchestration landscape includes numerous tools with varying capabilities, deployment models, and specializations. Let’s see the most popular ones.

Open-source orchestration frameworks

Open-source frameworks offer powerful orchestration capabilities with maximum flexibility and no licensing costs. These tools have gained widespread adoption due to their extensibility and strong community support, though they typically require more specialized skills to implement and maintain.

Apache Airflow stands out as the most widely adopted open-source orchestration tool. Developed initially at Airbnb, Airflow uses Python to define workflows as directed acyclic graphs (DAGs) and provides extensive monitoring capabilities.

Its strength lies in handling complex dependencies between tasks and integrating with virtually any system through its operator architecture. Organizations with Python expertise often choose Airflow for its flexibility, though it requires significant development expertise.

Apache NiFi takes a different approach with a completely visual interface for designing data flows. Originally developed at the NSA, NiFi excels at moving and transforming data between systems without requiring coding.

Its visual approach makes it accessible to wider audiences, though it may require more server resources than lightweight alternatives. NiFi particularly shines in use cases involving real-time data routing with complex branching logic.

Other notable open-source options include Luigi (developed by Spotify), focusing on batch processing with Python; Prefect, offering modern features like hybrid execution; and Dagster, emphasizing data quality checks within pipelines. Each has distinct advantages for specific use cases, from simple scheduled jobs to complex multi-system orchestration with extensive error handling.

Cloud data platforms

The newest generation of data platforms like Databricks has redefined cloud data engineering by unifying analytics, engineering, and machine learning workloads. These platforms provide the foundational processing layer for modern orchestration, combining massive scalability with sophisticated optimization techniques that dramatically improve performance.

Databricks has emerged as a leading platform, combining the power of Apache Spark, including robust Spark SQL transformation, with an optimized lakehouse architecture that blends data lake flexibility with warehouse performance. Its unified approach eliminates traditional silos between batch processing, streaming analytics, and machine learning workflows.

This integration creates a powerful foundation for orchestration, but implementation still requires specialized expertise in Spark programming and platform management. This expertise gap has driven the development of specialized tools that enhance cloud data platforms with purpose-built interfaces.

Visual data pipeline designers now transform how teams work by making data orchestration accessible to broader audiences beyond specialized engineers. Through drag-and-drop interfaces, data analysts and business users can create sophisticated data workflows without writing complex code.

This balance helps organizations scale their data capabilities while maintaining the control required in regulated environments.

Common challenges with data orchestration

Despite its transformative potential, implementing effective data orchestration comes with significant challenges. Organizations often encounter both technical and organizational obstacles that can derail orchestration initiatives if not properly addressed.

Data quality erosion at enterprise scale

Data quality issues become exponentially more dangerous when orchestration automates data movement across the enterprise. Poor quality data flowing through orchestrated pipelines propagates errors throughout downstream systems, undermining trust in the entire data ecosystem.

This garbage-in, garbage-out reality persists regardless of technological advances. This fundamental challenge intensifies at scale as data volumes grow and sources multiply, requiring organizations to implement automated quality controls that validate information against defined rules before it enters orchestration workflows.

Consistency across distributed datasets presents particular difficulties when multiple teams interact with similar information across different systems. Without coordinated management, orchestration may combine inconsistent versions of ostensibly identical data, leading to conflicting analyses and eroding trust.

Successful organizations implement layered quality management within orchestration frameworks. Source validation identifies issues at entry points, transformation validation ensures processing maintains integrity, and delivery validation confirms information meets consumer requirements. This comprehensive approach catches issues at multiple stages rather than relying on a single quality checkpoint.

Metadata management becomes essential for scaling quality efforts, providing context that enables consistent interpretation across diverse data domains. By maintaining comprehensive information about data lineage, transformation rules, quality metrics, and business definitions, organizations create a foundation for consistency even as orchestration complexity increases.

Performance bottlenecks that stall business decisions

As orchestration scales across more systems and larger data volumes, performance optimization becomes increasingly challenging.

Performance issues quickly escalate into processing backlogs when data pipelines cannot execute at the speed the business requires. When time-sensitive orchestration workflows get stuck in processing queues, business teams make decisions with outdated information or delay actions entirely while waiting for insights.

Resource contention further compounds these performance challenges when multiple orchestration workflows compete for limited processing capacity, storage bandwidth, or network resources. Without appropriate scheduling and prioritization, critical business processes may be delayed by less important but resource-intensive operations. This bottleneck intensifies in shared environments where orchestration competes with other workloads for finite computing resources.

Balancing batch and real-time processing requirements creates additional performance complexity. Many organizations need both approaches—batch for efficiency with large historical datasets and real-time for operational decisions requiring current information. Orchestrating these different processing models within a unified framework requires careful resource allocation to meet varying service-level agreements without creating performance degradation.

Leading organizations also implement dynamic resource management within their orchestration platforms to address these performance bottlenecks. Rather than statically allocating capacity, they use automated scaling that adjusts resources based on workload characteristics and business priorities. This approach optimizes utilization while ensuring critical processes receive necessary resources during peak demand, keeping data flowing at the speed business requires.

Shadow analytics that undermine governance

The enabled with anarchy trap (a term Prophecy CEO and Cofounder Raj Bains uses to explain ungoverned data access) surfaces when organizations provide self-service capabilities without appropriate governance frameworks.

Data platform teams build sophisticated orchestration environments with catalogs and controls, but then data gets copied onto desktops, leading to a situation where business leaders have no idea what represents the source of truth.

This governance gap happens most frequently in regulated industries like healthcare, financial services, and pharmaceuticals. Data privacy regulations, industry-specific compliance frameworks, and corporate governance policies all affect how data can be orchestrated across the enterprise, but become impossible to enforce when data escapes the managed environment.

Access control becomes more complex in orchestrated environments where data flows through multiple systems with different security models. Organizations must maintain appropriate restrictions throughout the entire data lifecycle without creating unnecessary barriers that undermine orchestration benefits.

This balance requires security controls embedded within orchestration workflows rather than implemented as separate processes.

Data residency and sovereignty requirements add another layer of complexity for global organizations. Different jurisdictions impose varying restrictions on where data can be stored and processed, creating orchestration challenges when information must flow across geographic boundaries.

These restrictions may require region-specific orchestration patterns that maintain compliance while still enabling enterprise-wide analytics.

Successful organizations make governance an integral aspect of orchestration design rather than an afterthought. By embedding appropriate controls directly into orchestration workflows, they ensure compliance without creating separate processes that would introduce friction and reduce efficiency.

This "governance by design" approach maintains protection while preserving the performance benefits of effective orchestration.

Fragmented control across disconnected systems

When organizations build their orchestration infrastructure piecemeal over time, they often create what Akram Chetibi, Director of Product Management at Databricks, calls the "seven different systems syndrome". Each tool added to the stack brings its own security profile, network requirements, governance model, and maintenance burden, creating exponential complexity that compounds with every addition.

This fragmentation manifests in practical challenges that directly impact both technical teams and business outcomes. Security practices become inconsistent across systems, metadata standards fail to translate between platforms, and governance policies can't be uniformly applied.

The result is that technical teams spend more time managing connections between systems than extracting value from the data flowing through them.

The problem intensifies as organizations scale, with each new data source or analytical requirement often introducing yet another specialized tool to the mix. Before long, data teams find themselves constantly context-switching between interfaces, juggling multiple authentication systems, and piecing together monitoring across disconnected platforms instead of focusing on business value.

Legacy tools designed for smaller data volumes further exacerbate the problem as they struggle with today's massive and diverse data streams. Many require extensive manual coding that cannot adapt quickly enough to changing business requirements, creating a constant maintenance burden that prevents innovation and limits responsiveness to business needs.

Organizations that successfully avoid this syndrome implement unified control planes that abstract away the complexity of multiple underlying systems. These platforms provide consistent governance, security, and observability across the entire data ecosystem, regardless of where data physically resides.

By focusing on accessibility and context rather than location, they enable a more flexible approach to data management that adapts to business needs rather than technical constraints.

The real cost of outdated data orchestration processes

Organizations often underestimate the true cost of maintaining status quo data workflows. While continuing with existing processes might seem like the safe, cost-neutral option, outdated data approaches impose substantial hidden costs that impact the entire business.

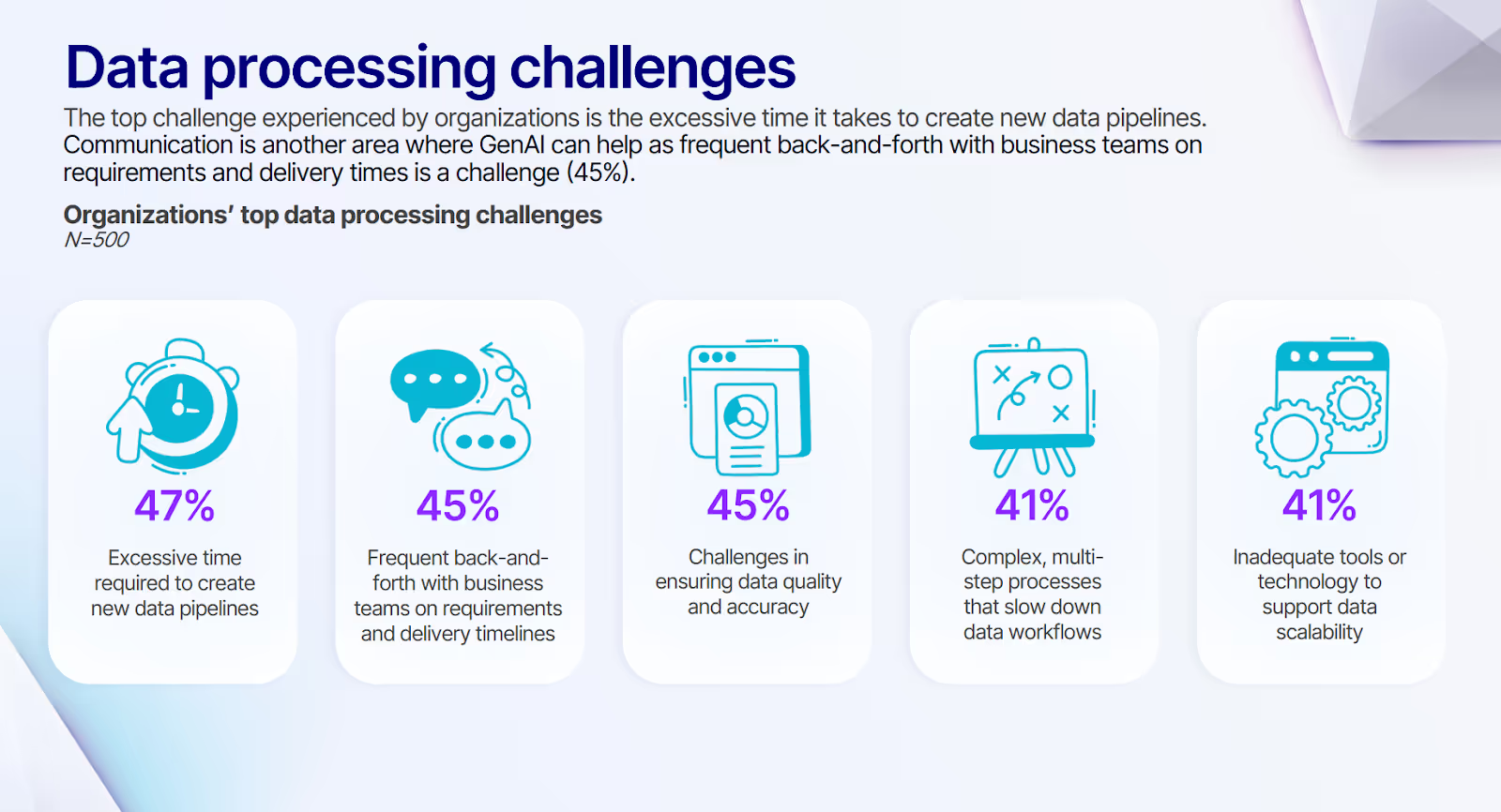

The most immediate impact falls on data teams themselves, who spend up to 47% of their time on data pipeline creation rather than high-value analysis.

This inefficiency isn't just a productivity problem—it's a massive opportunity cost as skilled professionals focus on repetitive tasks instead of insights that drive business value.

Business teams bear even greater costs through delayed decisions and missed opportunities. When market conditions change but data preparation takes weeks, organizations make choices based on outdated information. This delay creates tangible costs—promotions launched too late, inventory decisions based on old trends, or investments that miss emerging patterns.

The quality cost of manual processes may be less visible but equally damaging. When data preparation relies on individual knowledge rather than standardized workflows, inconsistent approaches create varying results. Different teams analyzing the same question may reach contradictory conclusions, leading to fractured decision-making and strategic confusion.

Perhaps most concerning is the innovation cost as organizations struggle to incorporate new data sources or analytical techniques. Without efficient orchestration, each new data initiative requires extensive manual setup that discourages experimentation. Organizations find themselves limited to analyzing the same data in the same ways, while competitors gain an advantage through newer approaches.

Transform your data strategy with end-to-end orchestration

Data orchestration has evolved from a technical capability into a strategic business advantage. Organizations that effectively coordinate their data flows unlock insights that drive competitive differentiation, operational efficiency, and improved customer experiences.

The most successful organizations recognize that modern data orchestration must balance two seemingly contradictory needs: enabling self-service for business users while maintaining production-grade reliability and governance. This balance is what separates strategic orchestration from basic data movement, creating an environment where innovation accelerates without compromising standards.

Here’s how Prophecy provides a complete solution for modern data orchestration built specifically for today's cloud data environments:

- Governed self-service data preparation: Prophecy empowers business analysts to prepare data on their own while operating within guardrails defined by central data teams, eliminating bottlenecks without sacrificing control.

- Visual pipeline building with code quality: Create sophisticated data workflows through intuitive drag-and-drop interfaces that generate high-quality code behind the scenes, making orchestration accessible while maintaining professional standards.

- Integration with cloud data platforms: Leverage the full power of platforms like Databricks while adding the orchestration layer needed for business agility, combining enterprise-scale performance with user-friendly interfaces.

- End-to-end data workflows: Handle the complete orchestration lifecycle from source connection through transformation to destination delivery, eliminating the need to piece together multiple disconnected tools.

- Enterprise-grade governance: Build trust through version control, access management, and lineage tracking that ensure compliance and quality without creating friction or slowing development.

- AI-powered development: Intelligent assistance accelerates pipeline creation while embedding best practices that optimize performance and maintainability.

To transform fragmented data processes that prevent timely insights and strategic decisions, explore How to Assess and Improve Your Data Integration Maturity to unlock competitive advantages through effective end-to-end orchestration.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now