A Guide to Strategic Data Integration that Transforms Business Operations

Master modern data integration techniques that break down silos and drive business value. Learn practical approaches to combine disparate data sources for actionable insights.

This article was refreshed on 05/2025.

Businesses today are accumulating vast amounts of data from multiple sources—internal databases, external systems, IoT devices, social media, and more. Yet despite this wealth of information, many struggle to transform this scattered data into actionable insights that drive business value.

Data integration steps in as a process that combines disparate data sources into a unified view, enabling better decision-making and operational efficiency across an organization. Effective data integration requires not only technology but also the right data engineering skills to manage and process data effectively.

Let's explore data integration, its key processes, and modern approaches to help you extract maximum value from your data.

What is data integration?

Data integration is the process of combining data from different sources into a unified, coherent view that delivers significant business value.

Rather than simply moving data from one place to another, effective integration transforms raw information into something accessible and meaningful for everyone in your organization, whether they're analysts diving deep into the numbers or executives needing quick insights for strategic decisions.

What began as simple database connections has transformed into sophisticated processes capable of handling diverse data types—structured data in traditional databases, unstructured data like videos and documents, and semi-structured data from sources like IoT devices and sensors—all at enterprise scale.

Data integration benefits

Effective data integration delivers several key advantages for organizations:

- Comprehensive view: Combines disparate data sources into a unified perspective, enabling more holistic analysis and understanding of business operations

- Improved decision-making: Provides stakeholders with complete, accurate information to drive better strategic and tactical decisions

- Enhanced operational efficiency: Eliminates redundant processes and manual data entry, streamlining workflows across departments

- Data quality improvement: Identifies and resolves inconsistencies between systems, leading to higher overall data quality

- Greater agility: Enables organizations to quickly adapt to changing conditions by providing timely access to critical information

Data integration use cases and examples

Organizations apply data integration across various scenarios to drive business value:

Customer 360° view

Companies integrate customer data from CRM systems, website interactions, purchase history, and support tickets to create comprehensive customer profiles. This integrated view enables personalized marketing, proactive service, and improved customer experiences. Asset management firms leverage this approach to anticipate client needs and optimize relationship management.

Machine learning and AI

Organizations increasingly integrate diverse data sources to build effective machine learning models, including those powered by generative AI platforms. By combining structured transaction data with unstructured customer feedback and market signals, companies develop more accurate predictive models for everything from fraud detection to product recommendations.

Healthcare analytics

Medical organizations integrate patient records, treatment data, and outcomes across departments to improve care quality and operational efficiency. A Fortune 50 medical company demonstrated significant improvements in patient outcomes by integrating previously siloed clinical and administrative data systems.

Supply chain optimization

Manufacturers integrate production data with inventory systems and supplier information to optimize operations and reduce costs. This integration enables automated inventory management, predictive maintenance, and more accurate demand forecasting, resulting in reduced stockouts and lower carrying costs.

Types of data integration

Organizations employ various integration approaches to meet their specific business needs. Each addresses different technical constraints and use cases, making it important to understand their essential characteristics before implementation.

Integration methods and patterns

When selecting an integration approach, you'll encounter several distinct methods, each with its own advantages:

- Batch Data Integration: Data is collected over time and processed together in scheduled jobs. This approach works well when real-time updates aren't critical, such as nightly financial reconciliation or weekly sales reporting. Batch processing efficiently handles large volumes of data with complex transformations.

- Real-time Data Integration: Data is processed immediately as it's created, enabling instant insights and actions. This method powers time-sensitive operations like fraud detection, inventory management, or personalized customer experiences. It's essential when business decisions depend on up-to-the-minute information.

- Virtual Data Integration: Creates a virtual layer that queries source systems directly without physically moving data. This approach provides real-time access while minimizing data duplication, making it suitable for scenarios where storage space is limited or when you need to maintain data at its source.

- Application Integration: Connects applications to share functionality and data between systems through APIs and middleware. Unlike pure data integration, which focuses on data movement, application integration enables process-level coordination between software systems, supporting operational workflows.

Many organizations implement multiple integration approaches simultaneously. You might use batch processing for historical analytics while employing real-time integration for customer-facing applications.

Application integration versus data integration

While related, application integration and data integration techniques serve different purposes in your technology ecosystem:

Application integration connects different software components to work together, whereas data integration focuses on consolidating information from diverse sources. You'll likely need application integration when your focus is on business process automation or when different systems need to work together seamlessly.

How does data integration work?

Data integration follows a systematic process that transforms disconnected data into unified, valuable information:

- Data Collection: The integration process begins by identifying and connecting to relevant data sources across the organization. These may include databases, applications, cloud services, files, and APIs. Modern integration platforms provide pre-built connectors to simplify this stage.

- Data Extraction: Once connections are established, the integration system extracts data from source systems according to defined schedules or triggers. This may occur in batches at scheduled intervals or in real-time as data changes happen.

- Data Transformation: Raw data from different sources typically requires transformation to create a unified view. This includes standardizing formats (e.g., date formats, units of measurement), cleansing data (removing duplicates, correcting errors), enriching data with additional information, and applying business rules.

- Data Loading: The transformed data is loaded into target systems, such as data warehouses, data lakes, or business applications, in formats appropriate for analysis and operational use. This step ensures that integrated data is available to the right people at the right time.

- Orchestration and Monitoring: The entire integration process is orchestrated through workflows that manage dependencies, handle errors, and ensure data flows as expected. Monitoring tools track integration performance, data quality, and system health.

Organizations implement this process using various technical approaches, with ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) being the most common patterns.

Different approaches to data integration in ETL vs. ELT

In the ETL approach, data integration occurs by first extracting data from source systems, transforming it in a staging area to standardize formats and apply business rules, and finally loading the processed data into target systems.

This pattern creates a structured integration flow where data quality, normalization, and business logic are applied before data reaches its destination. ETL has traditionally been the go-to pattern for integrating data into data warehouses.

As data landscapes evolved with greater volume, variety, and velocity, integration patterns shifted toward ELT methodologies. Here, data integration begins by extracting raw data and loading it directly into the target systems, with transformations occurring afterward using the computing power of the destination platform. This integration pattern provides greater flexibility and leverages modern cloud data platforms' processing capabilities.

Both patterns address data integration differently. ETL provides highly controlled integration with predefined transformation rules, while ELT offers more flexible integration where transformations can be adapted without reloading source data.

Creating a data integration strategy and plan

A successful data integration initiative requires more than just technology—it demands thoughtful planning and a well-defined strategy aligned with business objectives. Let's outline a structured approach to developing and implementing an effective data integration strategy that balances immediate tactical needs with long-term strategic goals.

Assess your current state and define objectives

Begin your data integration journey by thoroughly mapping your current data landscape. Document all existing data sources, systems, integration points, and identify prominent pain points where data silos are causing business friction. Addressing these issues is essential for overcoming data silos. This assessment provides the foundation upon which all subsequent integration efforts will build.

Next, define clear integration objectives that align with broader business goals. Conduct stakeholder interviews and organize use case workshops to gather requirements from business units that will use the integrated data. Remember that effective integration strategies start with understanding business needs, not technology selection.

Create a prioritized list of integration requirements based on business impact and feasibility. A retail company might prioritize integrating inventory and sales data to improve forecast accuracy, while a healthcare provider might focus on connecting patient records across departments to enhance care quality.

Design your integration architecture

When designing your integration architecture, decide between centralized approaches (like data warehouses) and decentralized models (such as data federation). This decision should reflect both your current needs and anticipated future growth. Consider whether real-time processing is necessary or if batch processing will suffice for your business requirements.

Evaluate your deployment options carefully. Cloud-based integration platforms offer scalability and reduced infrastructure management, while on-premises solutions may better address specific security or compliance requirements. Many organizations benefit from hybrid approaches that combine elements of both.

Document your architecture thoroughly, including data flows, transformation rules, and governance protocols. Consider selecting ETL tools that align with your design. This documentation ensures consistent understanding across teams and facilitates maintenance as your integration landscape evolves.

Implement, monitor, and evolve

Implement your integration strategy iteratively, delivering value with each cycle rather than waiting for a massive end-state deployment. Start with high-impact, manageable integration projects that demonstrate clear business benefits while building toward your larger architectural vision.

Establish monitoring mechanisms that track both technical performance metrics (like processing times and error rates) and business impact indicators (such as improved decision-making speed or enhanced customer experience). Tools that provide real-time visibility into data flows enable quick identification and resolution of integration issues.

Review and evolve your integration approach regularly as business requirements change and new technologies emerge. What works today may need adjustment tomorrow. As data volumes grow, you might shift from batch processing to real-time streaming, or as cloud adoption increases, you might migrate from on-premises integration tools to cloud-native services.

The challenges of data integration and their solutions

From technical complexities to organizational barriers, challenges can derail integration initiatives if not properly addressed. So, what are the key challenges?

Data complexity and quality issues

Data integration grows increasingly complex as organizations must combine data from diverse sources, each with unique formats, structures, and quality levels. The measurement of data quality revolves around its fitness for purpose, but this becomes complicated as the same data may serve different functions across contexts. Quality dimensions include accuracy, timeliness, completeness, and consistency—all challenging to maintain as data volume increases.

Entry errors, variations in data formats, and outdated or missing information frequently compromise data quality. When data quality is compromised, business decisions may be based on inaccurate, incomplete, or duplicate data, leading to false insights and poor decision-making. This risk increases exponentially when integrating data from multiple sources that follow different rules and formats.

Establishing clear data standards across the organization provides a framework for consistent data definitions and formats. This standardization simplifies integration efforts and reduces the likelihood of inconsistencies. Automated data profiling helps identify quality issues early, allowing teams to address problems before they impact downstream processes.

Most importantly, data quality should be treated as an ongoing process built into integration workflows rather than a separate activity. Implementing proactive validation methods that check for errors immediately after data collection but before integration prevents poor-quality data from entering systems, ensuring reliable and accurate integrated data for business operations.

Technical and process bottlenecks

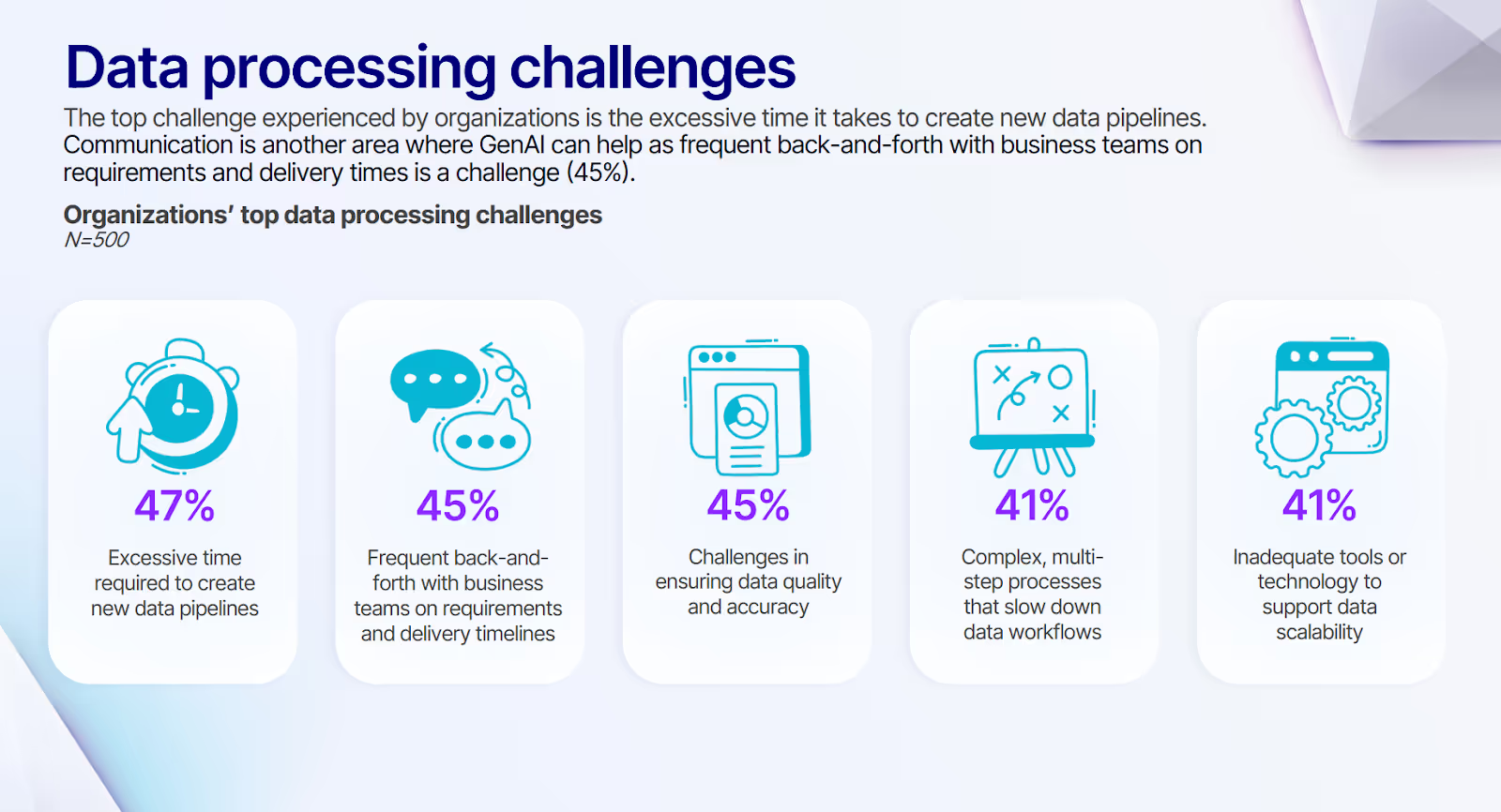

As organizations face increasing data volume and velocity, this significant time investment hinders growth and slows innovation rates. Siloed development approaches create disconnects between technical implementation and business requirements, often resulting in solutions that don't fully address business needs.

Many organizations struggle to clearly define which data needs to be collected and analyzed, its sources, intended systems, analysis types, and update frequencies. This lack of clarity overwhelms IT teams and leads to inefficient integration processes that fail to deliver timely insights to decision-makers.

Modern approaches like self-service integration platforms bridge the gap between technical and business teams, reducing IT strain. These tools, combined with efforts in enhancing data literacy, enable business users to explore, manipulate, and merge new data sources independently without IT assistance, accelerating time-to-insight while freeing technical resources for more complex tasks.

Also, a modular, metadata-driven approach to integration design replaces monolithic processes with reusable components that adapt quickly to changing requirements. Organizations that implement these streamlined processes significantly reduce development cycles, improve responsiveness to business changes, and enhance collaboration between technical and business stakeholders.

Governance and security concerns

Maintaining data lineage—tracking where data originated and how it has been transformed—becomes increasingly difficult when integrating diverse systems. Without proper lineage, organizations struggle to validate data accuracy, understand its context, and demonstrate regulatory compliance.

Traditional approaches often treat governance as an afterthought, implementing controls only after integration is complete. This reactive approach leads to compliance gaps and undermines data trustworthiness, as governance requirements may conflict with existing integration designs. The result is either compromised governance or costly rework.

Security concerns intensify with data integration, as combined datasets often reveal more sensitive information than individual sources.

Inconsistent access controls across source systems may allow unauthorized access to sensitive data, while integration processes themselves can create new security vulnerabilities if not properly secured throughout the data pipeline.

Akram Chetibi, Director of Product Management at Databricks, emphasizes how modern data integration must balance flexibility with governance while supporting increasingly complex data types and sources. As organizations build their data integration strategies, this balance becomes critical for enabling both innovation and compliance across the data lifecycle.

Modern approaches embed governance into the integration process through automated lineage tracking, role-based access controls, and built-in compliance checks. This integration enables organizations to manage data access consistently across sources and transformations, ensuring appropriate controls at every stage of the data lifecycle.

Implementing effective data governance practices not only ensures compliance but also enhances data trustworthiness across the organization.

Effective governance doesn't impede agility—it enables it by providing a framework for responsible data use. Organizations with robust governance frameworks can confidently integrate new data sources, knowing that compliance requirements are systematically addressed.

Scalability and performance issues

As data volumes grow and integration requirements expand, organizations face significant scalability challenges.

Traditional integration approaches often struggle to handle increasing data volumes, leading to extended processing times that delay critical business insights. When integration performance degrades, organizations experience greater time lags, incomplete data synchronization, and potentially compromised data quality.

According to our survey, 41% of organizations cite inadequate tools or technology as their primary barrier to supporting data scalability. This technology gap creates significant bottlenecks as data volumes continue to grow exponentially, preventing businesses from fully leveraging their data assets for competitive advantage.

Several factors impact integration performance, including processing time, system response, database structure, data volume, and data distinctness.

When these elements aren't optimized for scale, organizations face increased expenditures and delays in real-time synchronization that affect applications reliant on timely data. Legacy systems particularly struggle with modern data integration loads, creating bottlenecks that impact the entire integration ecosystem.

Modern approaches address these scalability challenges through distributed processing architectures, cloud-based resources with elastic scaling capabilities, and intelligent data partitioning strategies.

Organizations interested in scaling ETL pipelines can implement efficient data processing algorithms to accelerate extraction, transformation, and loading processes while minimizing resource requirements.

How modern tools approach data integration

The data integration landscape has evolved significantly from traditional SQL-based scripting to comprehensive platforms designed for today's complex data environments, embracing modern data pipeline architecture best practices.

So, what are the characteristics of a modern data integration tool?

- Scalable, Cloud-Native Architecture: Modern integration tools leverage distributed processing and elastic scaling to efficiently handle growing data volumes without performance degradation. Tools that embrace cloud-native data engineering principles enable organizations to build scalable, flexible, and efficient data pipelines that leverage the full power of cloud platforms.

- Scalable, Cloud-Native Architecture: Modern integration tools leverage distributed processing and elastic scaling to efficiently handle growing data volumes without performance degradation. This cloud-native approach allows organizations to pay only for resources they use while automatically adjusting to workload demands, significantly reducing operational costs compared to traditional fixed-capacity systems.

- Support for Diverse Data Types: Effective modern tools handle structured, semi-structured, and unstructured data from various sources without requiring separate processing pipelines. This versatility is crucial as organizations increasingly rely on combining traditional database information with newer sources like IoT devices, social media feeds, and streaming data for comprehensive analytics.

- Real-Time Processing Support: Modern tools provide capabilities for processing data as it's generated rather than in periodic batches. This real-time integration is essential for time-sensitive applications like fraud detection, customer experience personalization, and operational intelligence, where immediate insights directly impact business outcomes.

Databricks' Delta Lake technology exemplifies this modern approach by providing an open-source storage layer that brings reliability to data lakes. This capability is particularly crucial for data integration workflows, as it enables ACID transactions, schema enforcement, and time travel (data versioning) on top of existing data lakes.

For organizations integrating data from multiple sources, these features ensure data consistency and quality throughout the integration process while maintaining the flexibility to handle both batch and streaming data. Databricks' integration with Apache Spark also provides a distributed processing framework ideal for large-scale data transformation and integration workloads.

Enabling governed self-service for integrated data

While modern data platforms like Databricks have made significant strides in addressing core data integration challenges, a critical gap remains between having integrated data and enabling business users to generate insights independently.

Technical complexity often creates bottlenecks that prevent analysts and business teams from accessing and transforming data without specialized expertise or engineering support. Traditional approaches force organizations to choose between restrictive access that creates IT backlogs or ungoverned self-service that introduces security and quality risks.

Prophecy bridges this gap by enabling governed self-service for integrated data, empowering business analysts to prepare and analyze information while maintaining enterprise-grade controls.

Prophecy's governed self-service approach delivers five key capabilities:

- Intuitive visual interface: Business analysts can build and modify data transformation pipelines through an intuitive drag-and-drop experience without writing complex code, making data preparation accessible regardless of technical background.

- Expanded connectivity: Easily bring in data from sources analysts use daily—from Excel and CSV files to SFTP and SharePoint—and seamlessly combine it with enterprise data from Databricks.

- AI-powered assistance: Built-in intelligence helps users understand complex data relationships and suggests optimal transformation approaches, accelerating development while maintaining best practices.

- Enterprise governance: Centralized controls for security, cost management, and quality standards ensure compliance without hampering business agility, maintaining full visibility for data platform teams.

- End-to-end workflows: Business users can create complete pipelines from data loading through transformation to reporting, all within a single unified environment.

To eliminate data access bottlenecks that create IT backlogs and delay business insights, explore How to Assess and Improve Your Data Integration Maturity to empower business users with governed access that maintains enterprise controls.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.