Stop Creating Bottlenecks and Create Data Workflows That Drive Business Growth

Learn how strategic data workflows drive real business growth instead of creating bottlenecks.

Modern businesses generate massive amounts of data, but most struggle to transform this information into a strategic advantage. The disconnect isn't about data volume or storage capacity—it's about workflow design.

Organizations that build strategic data workflows create competitive moats through faster decision-making, operational efficiency, and innovation capabilities. Yet most workflows remain trapped in technical complexity, delivering minimal business impact despite significant investment.

Let’s explore how to design, implement, and optimize data workflows that directly drive business growth and competitive positioning.

What is a data workflow?

A data workflow is a structured sequence of operations that moves, transforms, and processes data to achieve specific business objectives. Unlike simple data pipelines that focus on technical data movement, strategic workflows connect data processing directly to business outcomes like revenue optimization, customer experience improvement, or operational efficiency gains.

Strategic data workflows combine automation, governance, and business logic to create repeatable processes that deliver consistent value. They encompass everything from initial data collection through transformation, analysis, and action, creating an end-to-end system that turns raw information into a competitive advantage.

The most effective workflows bridge the gap between technical capabilities and business needs, enabling domain experts to contribute directly while maintaining enterprise-grade governance and performance. This balance transforms workflows from technical utilities into strategic business assets.

Components in a data workflow process

Effective data workflows follow several components that work together to ensure smooth data processing, analysis, and insights generation:

- Data sources: The foundation begins with identifying and integrating relevant data sources, including CRM systems, ERP platforms, IoT devices, social media, transaction systems, and external providers. These sources include both structured and unstructured data.

- Data integration: The data integration process combines information from different sources to provide a unified view and address challenges like reconciling different data formats and resolving conflicting information.

- Data transformation: Raw data needs conversion into structured formats suitable for analysis through processes like normalization, aggregation, and enrichment. These transformations are key components of the ETL process and can be performed with tools like Spark SQL and Databricks data transformation.

- Data cleansing: Maintaining data integrity involves identifying and correcting inaccuracies, removing duplicates, filling in missing values, and standardizing formats. Robust data cleansing processes are essential for data-driven decision-making.

- Data orchestration: Orchestration tools coordinate the complex interplay between workflow components, ensuring they execute in the correct sequence and timing. Some teams opt to use a separate tool for orchestration, while others prefer an integrated platform like Prophecy, which includes data orchestration capabilities, thereby eliminating the need to manage multiple systems and reducing operational complexity.

- Data analysis: Once data is cleaned, transformed, and integrated, it's ready for applying statistical methods, machine learning algorithms, creating visualizations, and generating reports. Where analysis takes place is determined by the team using the data. Some teams prefer to download a CSV directly from their data platform or data warehouse to work in familiar tools. In contrast, others want to send data directly to specialized data visualization tools or business intelligence platforms.

Benefits of strategic data workflows

Strategic data workflows deliver tangible business advantages that extend far beyond operational efficiency:

- Accelerated decision-making speed: Workflows automate the data preparation and analysis processes that traditionally create bottlenecks, enabling real-time insights that keep pace with business needs.

- Operational cost optimization: By automating repetitive data tasks through ETL modernization and eliminating manual handoffs, workflows reduce the resource burden on technical teams while improving data quality and consistency. This efficiency creates capacity for higher-value strategic initiatives.

- Competitive intelligence acceleration: Strategic workflows enable continuous monitoring of market conditions, competitor activities, and customer behavior patterns, creating early-warning systems that inform proactive business strategy rather than reactive responses.

- Revenue growth enablement: Workflows that connect customer data, sales performance, and market insights create feedback loops that optimize pricing, inventory, and marketing investments, directly contributing to revenue growth and customer lifetime value improvement.

Four types of data workflows

Effective data workflows balance technical accuracy with business comprehensibility, enabling both technical implementers and business stakeholders to understand workflow structure and dependencies.

Real-time streaming workflows

Real-time workflows process data continuously as it arrives, enabling immediate responses to changing business conditions. These workflows excel in scenarios requiring instant decision-making, such as fraud detection, dynamic pricing, or operational monitoring.

The technical complexity of streaming workflows often limits their adoption, but modern platforms have simplified implementation through visual interfaces and pre-built components. Organizations typically start with high-impact use cases like customer experience personalization or security monitoring before expanding to broader operational applications.

Success with streaming workflows requires careful consideration of data volume, processing complexity, and infrastructure costs. The business value must justify the additional technical overhead compared to batch processing alternatives.

Batch processing workflows

Batch workflows process data in scheduled intervals, making them ideal for comprehensive analysis, reporting, and data integration tasks. They offer superior cost-efficiency for large data volumes and complex transformations that don't require immediate results.

Most organizations rely heavily on batch workflows for essential business processes like financial reporting, customer segmentation, and performance analytics. The predictable resource requirements and simplified error handling make batch processing the foundation of enterprise data operations.

Modern batch workflows benefit from cloud elasticity, automatically scaling processing resources based on data volume and complexity. This scalability enables organizations to handle peak loads without over-provisioning infrastructure for average conditions.

Event-driven workflows

Event-driven workflows trigger automatically based on specific business events or data conditions, creating responsive systems that adapt to changing circumstances. These workflows bridge real-time and batch processing by responding immediately to important events while processing comprehensively.

Common implementations include customer lifecycle management, inventory replenishment, and compliance monitoring. Event-driven workflows excel at orchestrating complex business processes that span multiple systems and require coordinated responses.

The key to successful event-driven workflows lies in defining meaningful business events and ensuring reliable event detection and processing. Organizations must balance responsiveness with processing efficiency to avoid overwhelming downstream systems.

Hybrid workflow architectures

Hybrid workflows combine multiple processing patterns to optimize for different business requirements within a single system. They might use streaming for real-time monitoring, batch for comprehensive analysis, and event-driven triggers for specific business actions.

This architectural flexibility enables organizations to optimize each workflow component for its specific requirements while maintaining overall system coherence. Hybrid approaches reduce complexity compared to managing separate workflow systems for different processing patterns.

Designing hybrid workflows often requires careful consideration of data storage options. Comparing data storage options can help organizations select the right infrastructure to support their data strategies.

The challenge with hybrid architectures lies in maintaining consistency and governance across different processing models. Success requires unified monitoring, security, and data quality management across all workflow components.

How data workflow challenges prevent business value delivery

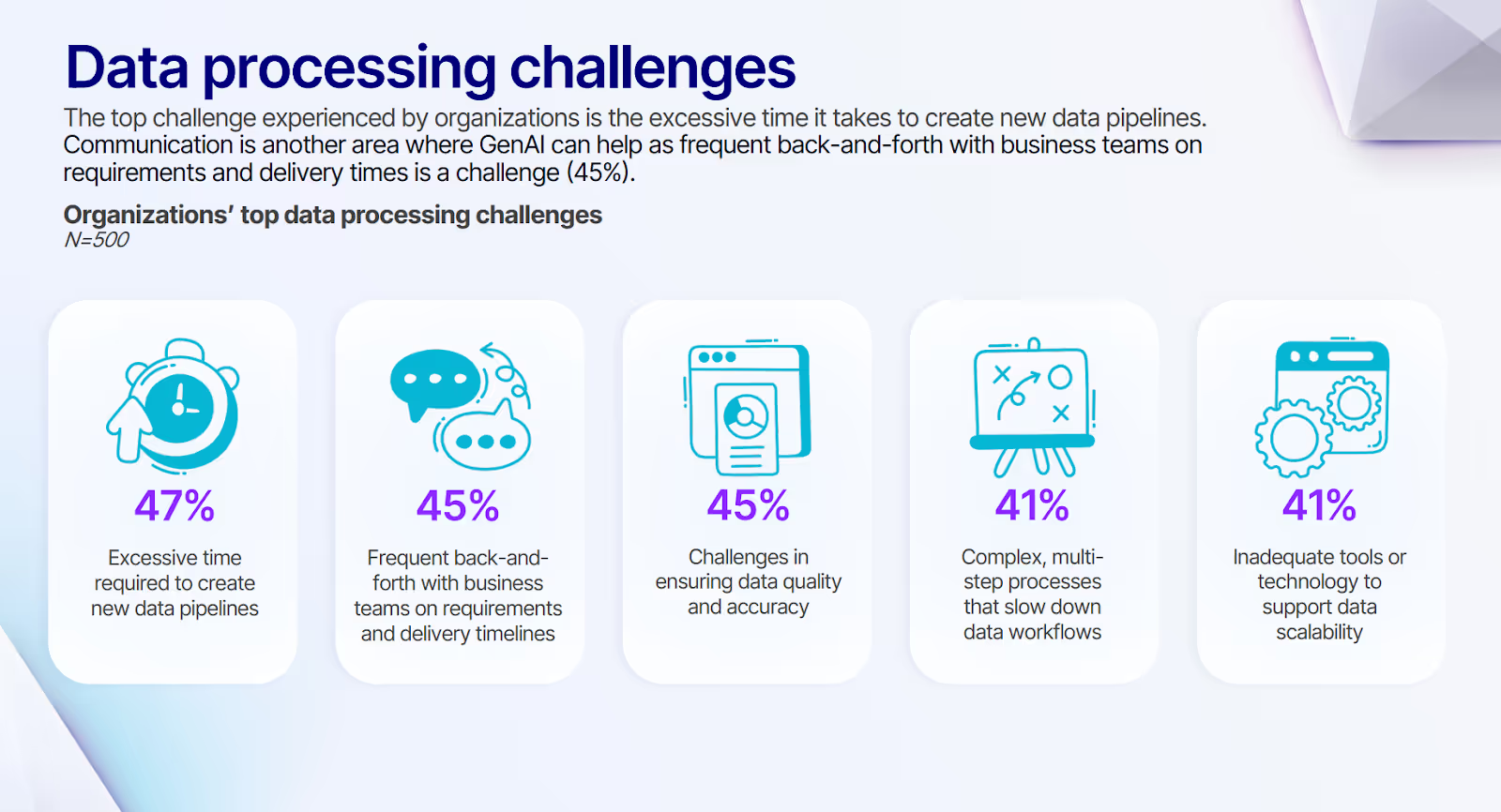

The majority of workflow failures stem from unnecessary complexity that transforms workflows from business enablers into operational bottlenecks. Organizations often build workflows that require extensive technical expertise to operate and maintain, limiting their adoption and business impact, as seen in our survey.

This complexity manifests in several ways: workflows that require manual intervention at multiple steps, processes that break frequently due to fragile dependencies, and systems that demand specialized knowledge to modify or extend.

As a result of these complex processes, a blocked and backlogged scenario emerges when this workflow complexity overwhelms technical teams, creating request queues that frustrate business users and slow decision-making.

Business teams submit workflow requests to overloaded engineering teams, then wait weeks or months for implementation while opportunities disappear.

Technical-business disconnect undermines strategic value

Many workflows fail because they optimize for technical efficiency rather than business outcomes, creating sophisticated data processing systems that don't address real business needs. This disconnect occurs when technical teams build workflows in isolation from business stakeholders who understand the intended use cases.

The communication gap between business requirements and technical implementation leads to workflows that process data correctly but don't support actual decision-making processes. Business users receive technically accurate results that don't match their workflow needs or decision-making timelines.

This problem intensifies when organizations maintain separate tools and processes for business and technical teams, preventing the collaboration necessary for strategic workflow development. Business users resort to manual workarounds while technical teams build sophisticated solutions that go unused.

Scattered tools, incoherent results

Organizations often build workflow ecosystems piecemeal, adding specialized tools for different workflow components without considering overall system coherence. This fragmentation creates exponential complexity as each tool introduces its own interfaces, security models, and maintenance requirements.

The seven different systems syndrome emerges when organizations struggle to manage multiple disconnected workflow platforms, each optimizing for specific use cases but failing to create cohesive business solutions. Teams spend more time managing tool integration than creating business value.

This fragmentation becomes particularly problematic as workflows scale across multiple business units and use cases. Different teams adopt different tools for similar workflow requirements, creating duplicated effort and inconsistent results that undermine organizational decision-making.

Governance gaps create compliance and quality risks

Many workflow implementations treat governance as an afterthought, applying security and compliance controls after workflows are built rather than embedding them into the development process. This retrofitting approach creates vulnerabilities and often requires extensive rework when governance requirements change.

Without embedded governance, workflows can become sources of compliance risk rather than business enablers. Data quality issues propagate through ungoverned workflows, creating downstream problems that undermine trust in workflow outputs and limit business adoption.

As a result, business users gain the ability to create workflows but lack the guardrails needed to ensure consistent quality, security, and compliance.

Four key principles for building governed and strategic data workflows

Creating workflows that deliver sustained business value requires balancing accessibility with governance, enabling innovation while maintaining enterprise standards. These principles guide organizations toward workflow implementations that scale effectively across business units and use cases.

While some of these approaches might seem unattainable, modern platforms like Prophecy are specifically built with these principles in mind, making strategic workflow development achievable for organizations of any size.

Build reusable workflow components

Reusable components accelerate workflow development while ensuring consistency and quality across different business units and use cases. These standardized building blocks capture organizational knowledge and best practices in forms that can be easily shared and maintained.

Develop component libraries that address common workflow patterns like data validation, transformation, and notification, enabling teams to focus on business-specific logic rather than rebuilding fundamental capabilities. This approach reduces development time while improving workflow quality and maintainability.

Establish governance processes for component development and maintenance that ensure reusable elements meet enterprise standards for security, performance, and documentation. This investment in component quality pays dividends across all workflows that leverage shared capabilities.

Create mechanisms for sharing components across business units and teams, enabling organizational learning and preventing duplicated effort. Successful component strategies include searchable catalogs, usage examples, and feedback mechanisms that guide ongoing improvement and development.

Modern data integration platforms like Prophecy make component reusability practical through features like Package Builder and Package Hub, which enable organizations to create, share, and maintain standardized workflow components across teams.

This approach transforms workflow development from custom coding exercises into the assembly of proven, enterprise-grade components that maintain consistency while accelerating delivery.

Design for business outcomes, not just data movement

Strategic workflows begin with clear business objectives rather than available data or technical capabilities. This outcome-focused approach ensures workflows solve real business problems and deliver measurable value that justifies ongoing investment and resources.

Start each workflow project by defining the specific business decisions or processes it will support, then work backward to identify required data sources and transformations. This approach prevents the common scenario where technically successful workflows fail to impact business performance.

Involve business stakeholders throughout workflow development to ensure the final solution matches actual decision-making needs and organizational processes. This collaboration creates workflows that integrate naturally into existing business processes rather than requiring users to adapt their workflows to match technical implementations.

Measure workflow success through business metrics like revenue impact, cost reduction, or customer satisfaction improvement rather than just technical performance indicators. This focus on business outcomes guides optimization efforts and demonstrates ongoing value to organizational leadership.

Platforms designed for self-service data preparation without risk enable this business-outcome focus by providing intuitive interfaces that let domain experts directly participate in workflow creation while maintaining enterprise governance standards.

Build bridges between visual and code environments

The traditional divide between visual workflow tools for business users and code-based approaches for technical teams creates artificial barriers that limit collaboration and workflow sophistication. Modern strategic workflows require environments where both audiences can contribute effectively.

Implement intuitive platforms that provide visual interfaces for business users while generating high-quality code that technical teams can review, modify, and extend. This bidirectional approach enables rapid prototyping by business users and professional-grade optimization by technical teams within the same workflow system.

Create shared development environments where business domain experts and technical specialists can collaborate on the same workflow assets, eliminating the translation errors and delays that occur when these teams work separately. This collaboration improves both business relevance and technical quality.

Establish governance frameworks that maintain code quality and security standards regardless of whether workflows are created visually or through direct coding. This consistency enables broader participation in workflow development without compromising enterprise requirements.

Create unified control planes for governance

Effective workflow governance requires unified visibility and control across all workflow activities rather than managing governance through separate systems for different workflow types or business units. This unified approach reduces complexity while strengthening compliance and security.

Implement comprehensive monitoring that tracks both technical performance and business impact metrics, providing early warning of issues that could affect workflow reliability or business value. This dual perspective enables proactive optimization and demonstrates ongoing ROI to business stakeholders.

Establish automated quality gates that validate data quality, security compliance, and performance standards throughout workflow execution rather than applying checks only at specific points. This continuous validation approach prevents issues from propagating through workflow systems and affecting business outcomes.

Create centralized governance policies that apply consistently across all workflow development and execution, while allowing flexibility for different business requirements. This balance maintains enterprise standards while enabling innovation and experimentation by business teams.

Enable self-service in workflows without sacrificing standards

Organizations need environments where domain experts can contribute directly to workflow development while maintaining the quality, security, and performance standards that enterprise operations require.

Here's how Prophecy enables this transformation through its comprehensive data integration platform:

- Visual workflow development with enterprise-grade outputs: Enable business users to create sophisticated workflows through intuitive drag-and-drop interfaces while automatically generating high-quality code that meets enterprise standards for performance, security, and maintainability.

- Unified business-technical collaboration: Bridge the divide between business domain expertise and technical implementation through shared development environments where all stakeholders can contribute effectively to workflow design and optimization.

- Governed self-service capabilities: Provide business teams with direct access to workflow development tools while embedding governance controls that ensure compliance, quality, and security without creating barriers to innovation and experimentation.

- Adaptive workflow intelligence: Leverage AI-assisted development that learns from organizational patterns and best practices to suggest optimizations, identify potential issues, and accelerate workflow creation while maintaining consistency with enterprise standards.

- End-to-end business impact tracking: Monitor workflow contribution to business outcomes through comprehensive analytics that connect technical performance to revenue impact, cost reduction, and competitive advantage creation.

- Scalable enterprise integration: Connect workflows seamlessly with existing business systems and data platforms, enabling workflow insights to surface directly within operational processes where business decisions are made.

To prevent costly architectural missteps that delay data initiatives and overwhelm engineering teams, explore 4 data engineering pitfalls and how to avoid them to develop a scalable data strategy that bridges the gap between business needs and technical implementation.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.