The Analytics Leader's Playbook for Building Data Observability That Scales

Discover proven data observability strategies that analytics leaders use to build organizational reliability and prevent costly data failures.

Data reliability has become the invisible foundation of every strategic business decision. When analytics leaders discover their quarterly forecasts are wrong because of a silent pipeline failure, or when customer insights become unreliable due to schema drift, the cost extends far beyond technical fixes.

These failures erode trust in data-driven decision making and force organizations back into gut-based strategies just when competitors are accelerating with AI and advanced analytics.

Even companies with sophisticated data platforms struggle with what feels like an endless cycle of reactive firefighting. Teams spend more time investigating data issues than delivering insights, while business stakeholders lose confidence in the very data assets that should be driving competitive advantage.

In this article, we explore how analytics leaders can build proactive data observability strategies that transform reactive monitoring into organizational reliability, creating the foundation for confident decision-making at scale.

What is data observability?

Data observability is the ability to understand, monitor, and manage the health of data throughout its entire lifecycle by providing comprehensive visibility into data quality, lineage, and system performance.

Unlike traditional monitoring that focuses on infrastructure health, data observability examines the data itself—tracking freshness, accuracy, completeness, and consistency as information flows through complex data pipelines and transformations.

This comprehensive approach enables organizations to detect, diagnose, and resolve data issues before they impact business decisions or downstream applications. Data observability platforms collect metadata, monitor data flows, and analyze patterns to provide insights into both current data health and potential future problems.

The evolution of data observability emerged as organizations recognized that traditional infrastructure monitoring was insufficient for modern data environments.

Early approaches focused on simple uptime monitoring, but the explosion of cloud data platforms, real-time analytics, and AI applications created new challenges that traditional monitoring couldn't address. Modern data observability represents a fundamental shift from reactive problem-solving to proactive quality management.

Data observability vs. data monitoring vs. data governance

Understanding the distinction between these three approaches is crucial for building effective data reliability strategies:

- Data observability: Provides comprehensive visibility into data health, quality, and lineage across the entire data ecosystem. Modern observability platforms combine automated monitoring with intelligent analysis to detect anomalies, track data lineage, and provide actionable insights for both technical and business teams.

- Data monitoring: Focuses on tracking specific metrics and alerting when predefined thresholds are breached. Traditional monitoring typically examines infrastructure performance, system uptime, and basic data volume metrics, but lacks the context and intelligence needed to understand data quality or business impact.

- Data governance: Establishes policies, procedures, and standards for data management across the organization. Modern data governance frameworks, such as a governance framework in Databricks, define roles, responsibilities, and compliance requirements but rely on observability and monitoring tools to ensure policies are actually being followed and to detect violations.

While these three approaches serve different purposes, they work together to create comprehensive data reliability. Governance establishes the framework for what good data looks like, monitoring provides the basic alerts when things go wrong, and observability delivers the intelligence needed to understand why problems occur and how to prevent them.

Benefits of effective data observability

Data observability delivers measurable value across technical, operational, and strategic dimensions of data management:

- Proactive issue detection: Advanced observability platforms identify data quality problems before they impact business operations or decision-making. By analyzing patterns in data freshness, volume, and distribution, these systems can detect anomalies that would otherwise go unnoticed until they cause downstream failures.

- Accelerated problem resolution: Comprehensive lineage tracking and impact analysis enable data teams to quickly identify the root cause of issues and understand their potential business impact. This dramatically reduces the time spent investigating problems and allows teams to focus on prevention rather than reactive firefighting.

- Improved data team productivity: Automated monitoring and intelligent alerting reduce the manual effort required to maintain data quality and reliability. Teams spend less time on routine quality checks and more time on strategic initiatives that drive business value, leveraging automation in data operations.

- Enhanced business confidence: Transparent visibility into data quality metrics and reliability trends, achieved through effective data quality monitoring, builds trust between data teams and business stakeholders. When business users understand the quality and freshness of their data, they can make more confident decisions and better understand the limitations of their analyses.

- Reduced operational costs: Preventing data quality issues is significantly less expensive than fixing them after they've impacted business operations. Early detection and resolution minimize the downstream costs of poor data quality, including incorrect business decisions, customer service issues, and regulatory compliance problems.

Organizations with mature observability practices can move faster on new initiatives because they have confidence in their data foundation, while those without observability capabilities remain trapped in reactive cycles that limit their ability to capitalize on data-driven opportunities.

The five pillars that make data observability work

Comprehensive data observability rests on five fundamental pillars that work together to provide complete visibility into data health and reliability:

- Freshness: Monitors how recently data has been updated and whether it arrives within expected timeframes. Freshness tracking is essential for time-sensitive business decisions and helps identify broken pipelines or delayed data sources before they impact downstream applications.

- Quality: Evaluates data accuracy, completeness, and consistency against defined business rules and expectations. Quality monitoring, often involving data auditing, goes beyond simple null checks to examine data distributions, detect outliers, and verify that data conforms to expected patterns and business logic.

- Volume: Tracks the amount of data flowing through pipelines and compares it to historical patterns and expectations. Volume monitoring helps detect missing data, duplicate records, and upstream system failures that might not be immediately obvious through other monitoring approaches.

- Schema: Monitors data structure changes, including new columns, modified data types, and altered table structures. Schema monitoring is crucial for preventing downstream application failures and ensuring that data transformations continue to work correctly as source systems evolve.

- Lineage: Tracks data movement and transformations across the entire data ecosystem, providing visibility into how data flows from source to destination. Lineage information is essential for impact analysis, debugging data quality issues, and understanding the business context of data assets, aligning with data mesh principles.

Modern observability platforms integrate these pillars into unified dashboards and alerting systems that provide actionable insights rather than overwhelming users with raw metrics.

Four reasons analytics leaders fail at implementing data observability

Even organizations with sophisticated observability platforms often struggle to achieve reliable data operations. These failures typically stem from organizational and strategic issues rather than technical limitations.

Tools deployed, processes unchanged

Many implementations fail because they focus exclusively on deploying monitoring technology without addressing the organizational changes needed to act on observability insights. Teams install comprehensive platforms but continue operating in reactive modes because they haven't established processes for proactive quality management.

This technical-only approach creates a scenario where teams have access to monitoring data but lack the governance framework to use it effectively. Different teams interpret alerts differently, leading to inconsistent responses and conflicting priorities when issues arise.

Successful implementations require clear escalation procedures, defined roles and responsibilities, and service-level agreements that connect observability metrics to business outcomes. Without these organizational elements, even the most sophisticated monitoring becomes just another dashboard that teams check after problems have already occurred.

The most effective approach treats observability as a capability that spans people, processes, and technology rather than a technical solution that can be deployed independently. This requires training programs, process documentation, and cultural changes that emphasize proactive quality management over reactive firefighting.

Organizations must also address the skills gap between traditional infrastructure monitoring and data-specific observability. Teams need to understand how to interpret data quality metrics, assess business impact, and coordinate responses across technical and business stakeholders.

Finally, choose a tool that integrates natively with your existing data stack rather than requiring extensive custom development. The most effective platforms connect directly to your data catalogs, inherit permissions from your data platform, and operate through metadata analysis rather than data sampling.

This approach minimizes operational overhead while ensuring observability insights reflect actual data flows in your environment.

Ownership gaps leave issues orphaned

Data observability reveals quality issues, but without clear ownership models, these insights don't translate into accountability or action. Many implementations fail because no one has clear responsibility for addressing the issues that monitoring identifies.

This ownership gap becomes particularly problematic in complex data environments where information flows through multiple teams and systems. When schema changes break downstream pipelines, who is responsible for the fix? When data quality in shared datasets degrades, which team has the authority to implement corrections?

When observability identifies issues but the responsible teams lack the capacity or authority to address them quickly, data quality problems accumulate in engineering backlogs. At the same time, business stakeholders lose confidence in the reliability of data.

Successful implementations establish domain-oriented ownership models where specific teams have clear responsibility for data quality within their areas of expertise. This approach leverages the knowledge of teams closest to the data while ensuring accountability for quality issues.

These ownership models must also include escalation paths for issues that span multiple domains or require coordination across teams. Clear protocols for cross-domain collaboration prevent quality issues from becoming organizational bottlenecks that delay resolution and impact business operations.

Overwhelming teams with alerts instead of actionable insights

Poorly configured observability platforms generate alert fatigue that undermines their effectiveness and trains teams to ignore monitoring notifications. Many implementations generate numerous alerts without providing the necessary context to prioritize responses or understand the business impact.

This alert overload often results from treating observability platforms like traditional infrastructure monitoring, where any deviation from normal triggers an alert. Data environments naturally exhibit more variability than infrastructure systems, necessitating more sophisticated alerting approaches that take into account business context and data patterns.

Teams become overwhelmed when alerts fail to distinguish between critical issues that require immediate attention and minor anomalies that can be addressed during regular maintenance windows. Without proper prioritization, teams either ignore most alerts or spend excessive time investigating low-impact issues.

Successful implementations focus on actionable insights rather than comprehensive alerting. This means configuring alerts based on business impact rather than technical metrics, providing context about affected downstream systems, and including recommended response actions.

Organizations must also establish alert triage processes that help teams quickly assess and prioritize responses. This includes standardized severity classifications, impact assessment templates, and escalation procedures that ensure critical issues receive appropriate attention.

Enterprise complexity crushing simple solutions

Many observability implementations fail because they underestimate the complexity of enterprise data environments and the effort required to achieve comprehensive monitoring. Organizations often start with simple use cases but struggle to scale observability across diverse data sources, transformation tools, and analytical applications.

This complexity manifests in multiple dimensions: technical integration challenges across different data platforms, varying data quality requirements across business domains, and the need for organizational coordination to implement consistent monitoring approaches across multiple teams.

The "seven different systems syndrome" that plagues many data environments creates particular challenges for observability implementation. Each platform may have different monitoring capabilities, metadata formats, and integration approaches, making unified observability significantly more complex than anticipated.

Successful implementations acknowledge this complexity and adopt phased approaches that gradually expand observability coverage rather than attempting comprehensive monitoring from the start. This allows organizations to learn from early implementations and refine their approaches before scaling across the entire data environment.

Organizations must also plan for the ongoing maintenance overhead required to keep observability systems up to date as data environments evolve. This includes updating monitoring configurations, maintaining lineage information, and adapting alerting rules as business requirements change.

How AI is transforming the future of data observability

Artificial intelligence is fundamentally reshaping data observability from reactive monitoring to predictive quality management. Modern AI-powered platforms can now detect subtle patterns in data behavior that would be impossible for human operators to identify, while automatically adapting to changing data patterns without requiring constant reconfiguration.

The integration of AI enables observability platforms to understand context in ways that traditional rule-based systems cannot. These systems learn normal data patterns across different business cycles, detect anomalies that consider seasonality and business context, and provide predictive insights that help teams address potential issues before they impact operations.

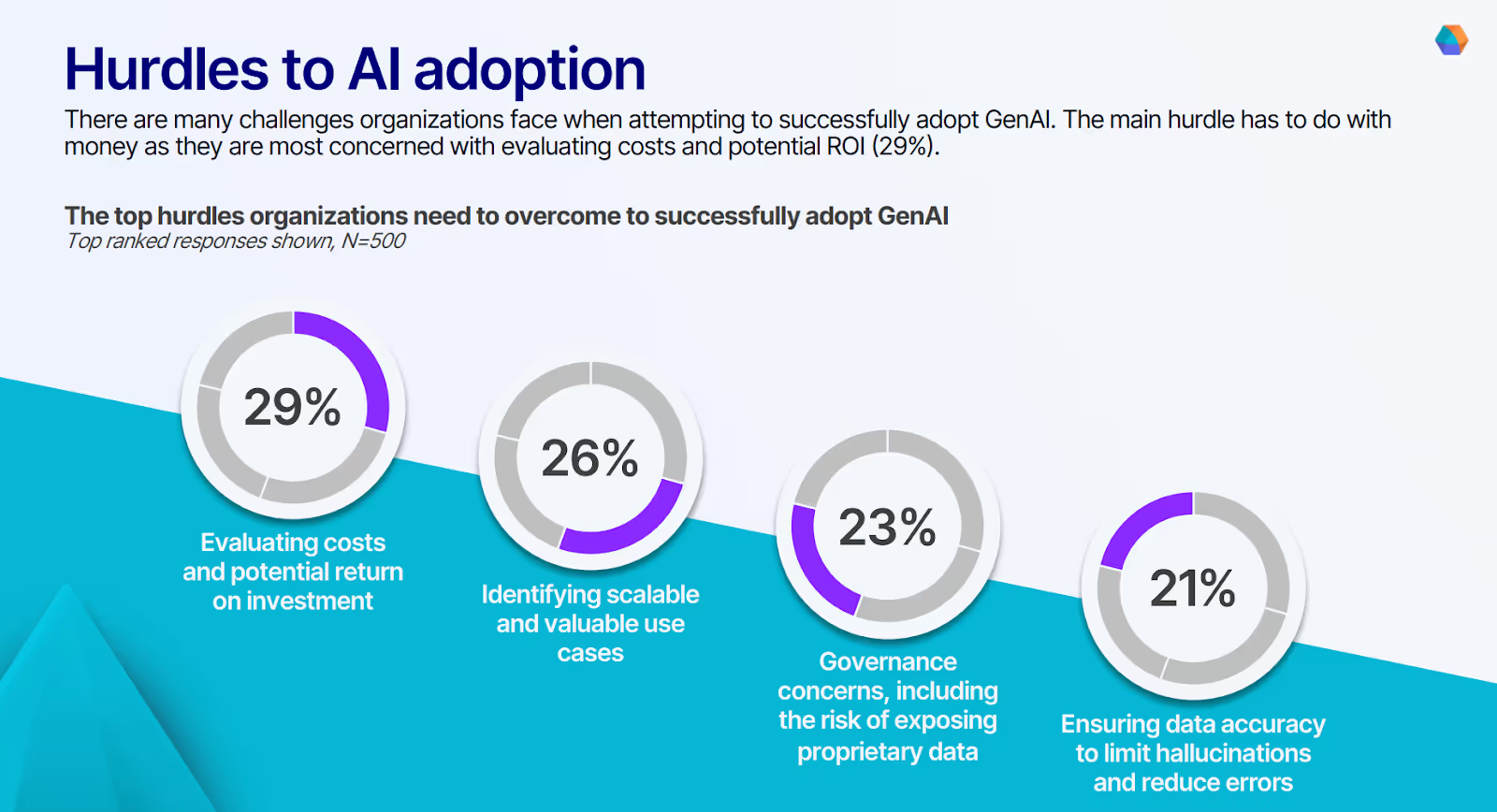

However, AI-powered observability also introduces new governance challenges that organizations must address carefully. Our survey reveals how organizations identify governance concerns, including the risk of exposing proprietary data, as a primary hurdle to GenAI adoption.

These concerns become particularly acute in observability platforms that require access to sensitive data to provide comprehensive monitoring.

The solution lies in implementing AI-powered observability within governed frameworks that maintain data privacy while delivering intelligent insights. Modern platforms achieve this through techniques like differential privacy, federated learning, and metadata-based analysis that provide comprehensive observability without exposing sensitive data elements.

As AI capabilities continue to advance, the future of data observability will likely include autonomous quality management systems that can not only detect and diagnose issues but also implement corrective actions automatically. This evolution will enable organizations to achieve levels of data reliability that would be impossible through manual processes alone.

Transform data operations with AI-powered, integrated observability

The difference between data-driven organizations that thrive and those that struggle often comes down to reliability. While reactive teams spend their time investigating yesterday's problems, proactive organizations build observability capabilities that prevent issues and accelerate innovation.

Here’s how Prophecy's data integration platform addresses data reliability challenges through integrated observability that works with your existing data stack:

- Native observability integration: Built-in monitoring and quality checks that work automatically as you build data pipelines, eliminating the need for separate observability tools that create additional complexity and maintenance overhead.

- Visual pipeline development with quality assurance: Create data workflows through intuitive interfaces while automatically embedding quality checks, lineage tracking, and monitoring capabilities that ensure reliability from development through production.

- Proactive anomaly detection: AI-powered monitoring that understands your data patterns and business context, providing intelligent alerts that focus on business impact rather than overwhelming teams with technical noise.

- Cross-team collaboration platform: Enable both technical and business teams to participate in data quality management through shared visibility into data health, lineage, and business impact.

- Governed self-service capabilities: Democratize data access while maintaining quality standards through embedded governance controls that prevent the "enabled with anarchy" trap.

To transform your data operations from reactive firefighting to proactive quality management, explore Self-Service Data Preparation Without the Risk and discover how integrated observability accelerates both reliability and innovation.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now