How to Build a Data Team Structure That Scales Impact Instead of Headcount

Discover data team structure strategies that eliminate bottlenecks and scale capabilities without endless dependencies.

Most organizations approach data team structure backward—they focus on hiring specialists and building technical capabilities while inadvertently creating dependency bottlenecks that slow business decisions.

The result is a familiar pattern: business teams submit requests and wait weeks for responses, while data engineers become overwhelmed gatekeepers rather than enablers of insights. This structural dysfunction can’t be solved by adding more people or more tools—the way forward requires rethinking how data responsibilities are distributed across your organization.

In this article, we explore how to build data team structures that scale capabilities without creating endless dependencies.

What is a data team structure?

Data team structure is the organizational framework that defines how data responsibilities, decision-making authority, and technical capabilities are distributed across your organization. It encompasses who owns what aspects of data operations, how teams collaborate on data initiatives, and the governance mechanisms that ensure quality and compliance without creating bottlenecks.

There is not one single approach that is the right fit for every organization. But what is always true is that an effective data team structure goes beyond job titles and reporting relationships to address fundamental questions:

- Who can access and modify data?

- How are conflicting priorities resolved?

- What decisions can teams make independently versus requiring coordination?

The answers to these questions determine whether your data capabilities accelerate business outcomes or become sources of frustration and delay.

Modern data team structures must balance autonomy with accountability, enabling domain experts to work with data directly while maintaining the standards and governance that enterprise operations require.

Essential roles in a modern data team

While specific titles and responsibilities vary across organizations, successful data teams typically include these core capabilities and skills, which often fall into these job titles:

- Data engineers: Build and maintain the infrastructure, pipelines, and systems that move and transform data at scale. They often work extensively with ETL and data integration processes and are involved in ETL modernization efforts to improve data processing efficiency.

- Analytics engineers: Bridge the gap between data engineering and business analysis by transforming raw data into analysis-ready datasets, often specializing in tools like dbt for data modeling and transformation.

- Data scientists: Apply statistical and machine learning techniques to extract insights and build predictive models, requiring expertise in both analytical methods and business domain knowledge.

- Data analysts: Focus on interpreting data to answer business questions and support decision-making, typically working closest to business stakeholders and translating technical outputs into actionable recommendations. They may sit on a dedicated data team or on a line of business unit. Depending on where they sit, they also might have a title like “business analyst” or simply analyst. And on some teams, someone with a completely different title ends up becoming responsible for analyst work.

- Data product managers: Own the strategy and roadmap for data products and platforms, balancing technical feasibility with business value and user experience considerations.

- Data platform engineers: Specialize in building and maintaining the underlying infrastructure, cloud platforms, and tools that enable other team members to work effectively with data.

- Data governance specialists: Ensure compliance, quality, and security standards across data operations while enabling appropriate access and usage for business teams. These responsibilities are often owned by someone with a broader title on a data platform team, but some organizations find value in hiring someone for this specific role.

Centralized vs decentralized vs federated data team structure

Organizations typically choose between three primary structural approaches, each with distinct advantages and limitations that affect how quickly teams can access data and implement solutions:

- Centralized structure: All data professionals report through a single organization with standardized processes, tools, and governance, creating consistency but potentially slower response to diverse business needs.

- Decentralized structure: Data professionals are embedded within business units, enabling faster response and domain expertise but potentially creating inconsistencies and duplicated efforts across teams.

- Federated structure: Combines central platform capabilities with distributed domain ownership, allowing business units to operate independently while leveraging shared infrastructure and standards. Federated structures align with data mesh principles, combining central platform capabilities with distributed domain ownership.

The choice between these structures often reflects organizational maturity and scale. Early-stage companies often begin with decentralized approaches that facilitate rapid experimentation, whereas large enterprises tend to adopt centralized models that ensure consistency.

However, both extremes create problems at scale—centralization becomes a bottleneck, while decentralization leads to fragmentation and duplicated effort.

Six steps to build a data team structure that eliminates bottlenecks

Creating an effective data team structure requires moving beyond traditional organizational models and addressing common data team dysfunctions toward approaches that enable business teams while maintaining appropriate governance.

Each structural decision should reduce dependencies rather than create new ones.

Break the endless request-response cycle that stifles agility

Business executives consistently report the same frustration: their teams submit data requests and wait weeks for responses while competitors make faster decisions with similar information. This request-response dynamic creates the "blocked and backlogged" scenario, where data teams become unwilling bottlenecks rather than business enablers.

The core problem is structural dependency. When every analytical question requires a formal request to a separate team, you've designed a delay into your decision-making process. Smart executives recognize that this structure fails because it treats data work as a specialized service rather than treating it as Data as a Product and a core business capability.

Transform this dynamic by eliminating the request queue entirely. Instead of building teams that fulfill requests, create environments where business teams can answer their own questions using governed data and intuitive self-service analytics tools.

This means giving marketing analysts direct access to customer data, enabling finance teams to build their own forecasting models, and empowering operations managers to create real-time performance dashboards.

Start by identifying your most common request types and building self-service alternatives for each. Replace custom report requests with configurable dashboard templates. Substitute ad hoc analysis requests with guided exploration tools.

When business teams can get answers immediately instead of submitting tickets, your data specialists can focus on building capabilities rather than fulfilling repetitive requests.

Embed governance into workflows, not as gates

Most data leaders unknowingly create the exact problem they're trying to solve: ungoverned data usage. Traditional governance approaches position data teams as gatekeepers who approve access and validate usage, creating delays that business teams circumvent through spreadsheets and personal databases.

This gatekeeping model inevitably leads to a scenario where business leaders lament how everyone has different data and nobody knows the source of truth.

The fundamental flaw in gatekeeping governance is that it separates control from usage. When governance happens outside the tools that business teams use daily, compliance becomes an obstacle to productivity rather than a foundation for reliable insights. Smart organizations recognize that effective governance must be invisible to users while being comprehensive in coverage.

Redesign your governance approach by embedding controls directly into business workflows. When data catalogs automatically enforce access policies, transformation tools include built-in quality validation, and reporting platforms maintain audit trails, governance becomes helpful infrastructure rather than bureaucratic overhead.

This approach enables self-service analytics compliance, making governance a helpful infrastructure rather than a bureaucratic overhead.

Implementing data quality metrics within self-service tools helps ensure governance is embedded into workflows. Visual tools that provide data lineage solutions can enhance governance by offering transparency into data flows.

Focus your governance specialists on building systems rather than processes. Instead of reviewing individual requests for data access, create automated systems that grant appropriate access based on roles and responsibilities.

Rather than manually validating data quality, implement automated checks that prevent poor data from entering business workflows. This systematic approach scales governance capabilities without scaling gatekeeping delays.

Dismantle the skills hoarding bottleneck that limits organizational learning

Many data teams inadvertently hoard expertise by concentrating specialized skills in small groups while leaving business teams dependent on their availability. This concentration creates single points of failure where business initiatives stall waiting for specific individuals, and knowledge doesn't transfer effectively across the organization.

The traditional approach of hiring specialized experts and protecting their time through access controls sounds logical, but it creates structural dependencies that limit organizational agility.

When only three people understand your customer data model, those three people become bottlenecks for any customer-related analysis. When data scientists jealously guard their model development processes, business teams can't adapt quickly to changing requirements.

Address this challenge by designing knowledge transfer into your team structure rather than treating it as an optional activity. Create hybrid roles that bridge business and technical domains, like analytics engineers who can translate between business requirements and technical implementation.

Establish mentoring relationships where specialists teach business team members to handle routine tasks independently and enhance data literacy.

Build documentation and training programs that democratize technical knowledge without requiring deep specialization. Business analysts should understand enough about data quality to spot problems early.

Marketing teams should know enough about statistical significance to interpret A/B testing results correctly. This distributed expertise reduces dependencies while improving decision quality across the organization.

Eliminate the "seven different systems" complexity trap

Organizations often create what industry experts call the "seven different systems" syndrome—where teams manage multiple disconnected tools, each with its own interfaces, security models, and maintenance requirements.

This fragmentation forces data teams to spend more time managing system integrations than delivering business value, while business teams struggle to understand which tool answers which questions.

The complexity multiplies exponentially as organizations add specialized tools for different use cases. Marketing teams use one analytics platform, finance teams use another, and operations teams use a third. Each system requires separate training, maintenance, and integration work, creating coordination overhead that slows every initiative.

Consolidate your data ecosystem around unified platforms that provide consistent experiences across different use cases rather than specialized tools for each department. When marketing, finance, and operations teams use the same underlying data platform with customized interfaces, you eliminate integration overhead while maintaining functional specialization.

Design your technology architecture to minimize context switching and maximize skill transferability. Teams should learn one set of core capabilities that apply across multiple use cases rather than maintaining expertise in dozens of disconnected tools.

This consolidation reduces training overhead, improves collaboration, and enables teams to adapt quickly when business priorities shift.

Build decision-making velocity into your organizational design

Most data team structures optimize for technical efficiency rather than decision-making speed, creating delays that compound throughout business processes. When market conditions change rapidly, the organizations that respond fastest gain competitive advantages that slower competitors struggle to recover.

Traditional structures require multiple approvals, handoffs, and reviews that add days or weeks to analytical workflows. By the time insights reach decision-makers, market conditions may have changed, making the analysis less relevant. This structural lag creates a permanent disadvantage in dynamic markets where first-mover advantages determine success.

Restructure decision rights to minimize approval layers and handoff points in your analytical workflows. Empower domain teams to make routine decisions independently while escalating only exceptional cases that require specialized expertise or cross-functional coordination.

This means trusting marketing teams to interpret customer behavior data, enabling sales teams to adjust pricing strategies based on pipeline analysis, and allowing operations teams to modify processes based on performance metrics.

Create clear escalation paths and decision criteria that help teams identify when independent action is appropriate versus when coordination is required. This framework provides guidance without creating bureaucratic delays, enabling teams to move quickly while maintaining appropriate oversight for significant decisions.

Design multiplication effects, not just addition

The most successful data team structures create multiplication effects where each new capability enables multiple business outcomes rather than just solving individual problems. This approach allows organizations to scale data impact without proportional increases in specialized headcount, creating sustainable competitive advantages.

Traditional scaling approaches add specialized resources for each new requirement: hire data scientists for machine learning projects, add data engineers for integration work, and recruit analysts for business intelligence needs. This additive approach quickly becomes expensive and creates coordination overhead that limits agility.

Instead, design your structure around capabilities that multiply across use cases. Self-service analytics platforms enable multiple business teams to create their own insights. Automated data pipelines support numerous analytical workflows without requiring custom development.

By building data products, reusable analytical components accelerate project delivery across different domains while maintaining consistency and quality.

Focus your specialized resources on building these multiplicative capabilities rather than delivering individual solutions. Data engineers should create platforms that enable others rather than building custom integrations for each request.

Data scientists should develop frameworks and tools that business analysts can apply rather than building models for specific use cases. This shift from solution delivery to capability building creates exponential returns on your data investments.

How GenAI is transforming data team economics

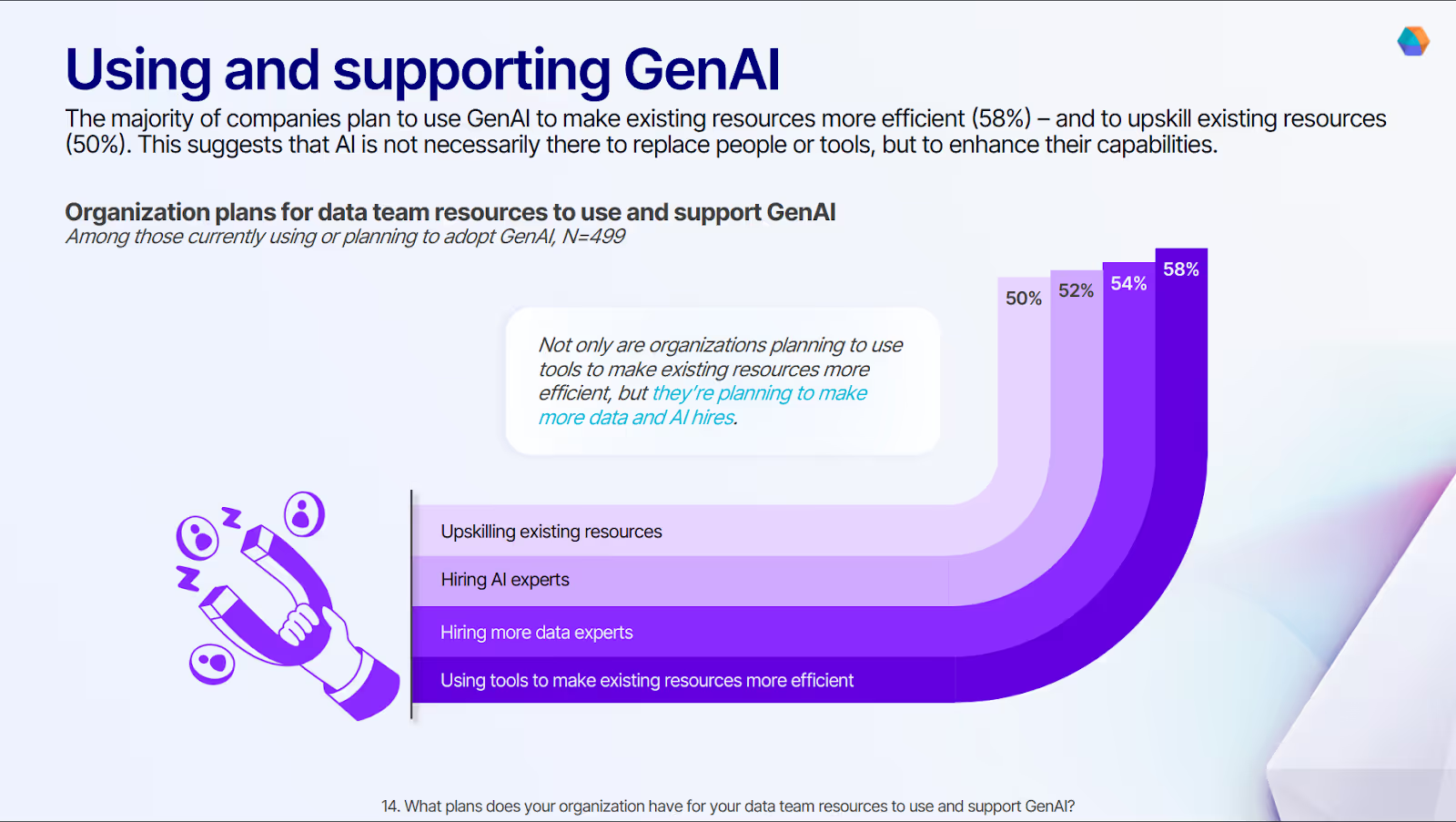

The emergence of GenAI is reshaping how organizations think about data team capabilities and resource allocation. As GenAI reshapes data teams, organizations plan to use these GenAI tools to enhance the efficiency of existing resources, while also considering the need to hire additional data experts.

This dual approach—enhancing current capabilities while expanding teams—reflects a fundamental recognition that AI amplifies human potential rather than replacing it entirely.

However, organizations that focus solely on AI adoption without addressing structural bottlenecks miss the bigger opportunity. AI can accelerate data processing and automate routine tasks, but it can't fix organizational designs that create dependencies and delays.

When business teams still need to submit requests and wait for responses, AI simply makes those responses faster—it doesn't eliminate the underlying friction that slows decision-making.

The most effective approach combines AI capabilities with structural reforms that enable direct collaboration between business and technical teams. AI-powered self-service tools can democratize analytical capabilities, but only when embedded within organizational structures that support autonomous decision-making.

Similarly, AI can automate governance and quality controls, but only when those controls are designed into workflows rather than imposed as separate approval processes.

Smart data leaders recognize that AI's greatest impact comes not from making existing processes faster, but from enabling entirely new ways of organizing work that weren't previously feasible. This requires rethinking team structures alongside technology adoption.

Relieve the dependency burden strangling your data team initiatives

Creating an effective data team structure involves designing workflows that eliminate dependencies while maintaining the quality and governance required by enterprise operations. The most successful organizations recognize that structure serves strategy, not the reverse, and continuously adapt their approaches as business needs and technical capabilities evolve.

Here's how Prophecy transforms traditional data team bottlenecks into accelerators for business outcomes:

- Visual collaboration environments that enable business analysts and data engineers to work together on the same pipelines, eliminating the translation errors and delays that plague traditional handoff processes.

- Governed self-service capabilities that provide business teams with direct access to data transformation tools while automatically enforcing quality standards, security policies, and compliance requirements without creating approval bottlenecks.

- Reusable component libraries that turn common data tasks into drag-and-drop operations, enabling domain teams to build sophisticated analytics without deep technical expertise while maintaining consistency across the organization.

- Automated quality controls that catch issues before they affect downstream users, reducing the coordination overhead typically required to maintain data reliability across distributed teams.

- Native cloud platform integration that leverages existing infrastructure investments while adding the collaboration layer needed to support distributed accountability models.

- End-to-end lineage tracking that provides transparency into how data flows through your organization, enabling both governance and troubleshooting without requiring central coordination for routine operations.

To transform your data team structure from a bottleneck into a competitive advantage, explore Self-Service Data Preparation Without the Risk and discover how governed self-service enables distributed accountability without sacrificing enterprise standards.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now