Why Data Products Fail and How to Deliver Business Value Beyond Dashboards

Discover how effective data products make complex information accessible to business users while maintaining governance and quality, bridging the gap between raw data and actionable insights.

Despite several investments in data infrastructure, enterprises are drowning in information while starving for insights. This paradox leaves business leaders frustrated as they watch competitors leverage the same technologies to drive innovation while their own teams struggle with dashboards that answer yesterday's questions and reports that require technical translation.

Enter data products—the bridge connecting technical data capabilities with practical business solutions. These purpose-built information packages leverage data catalogs, AI, and modern integration tools to deliver specific value to business users without requiring technical expertise to interpret or use them.

What are data products?

Data products are packaged, purpose-built solutions that transform raw data into consumable, valuable assets that solve specific business problems. Unlike traditional reports or dashboards, data products combine data, analysis logic, and interactive interfaces to deliver actionable insights directly to business users.

Zhamak Dehghani, who pioneered the data mesh concept, established key principles that define successful data products. These principles have become foundational for organizations building strategic data assets.

According to Dehghani, effective data products must be:

- Discoverable through clear metadata about ownership and quality metrics

- Addressable via unique identifiers

- Understandable with a well-documented structure and semantics

- Trustworthy with verified accuracy and quality

- Accessible through appropriately governed interfaces

These principles, along with practices like data auditing, transform raw data from a technical asset into a product that delivers specific business value.

The concept represents an evolution in thinking, shifting from treating data as a technical byproduct to designing it as a product with defined users, use cases, and value propositions. This product-centric approach emphasizes usability, quality, and business impact rather than just data availability or completeness.

At their core, data products embody the democratization of data. They make complex information accessible and useful to non-technical stakeholders who need insights to make decisions but lack the technical skills to extract them from raw data sources by leveraging self-service analytics tools.

The data product lifecycle encompasses everything from initial discovery of business needs through development, deployment, and ongoing evolution. Each stage focuses on maximizing business value while ensuring technical quality and governance compliance.

Data product vs data-as-a-product

Though often confused, "data products" and "data-as-a-product" represent distinct but complementary approaches. Data products are solutions built with data to solve specific business problems—they combine data with analysis logic and interfaces to deliver complete answers to defined questions.

Data-as-a-product, meanwhile, treats datasets themselves as products with defined interfaces, quality standards, and ownership. This approach focuses on making consistent, reliable data available for consumption by various downstream applications and users. The emphasis lies on the quality, documentation, and accessibility of the data itself rather than a specific business solution.

The distinction matters because effective organizations typically need both approaches. Data-as-a-product creates a foundation of trusted data assets with clear ownership and quality standards, while data products build upon this foundation to deliver specific business value through purpose-built solutions.

Characteristics and architecture of data products

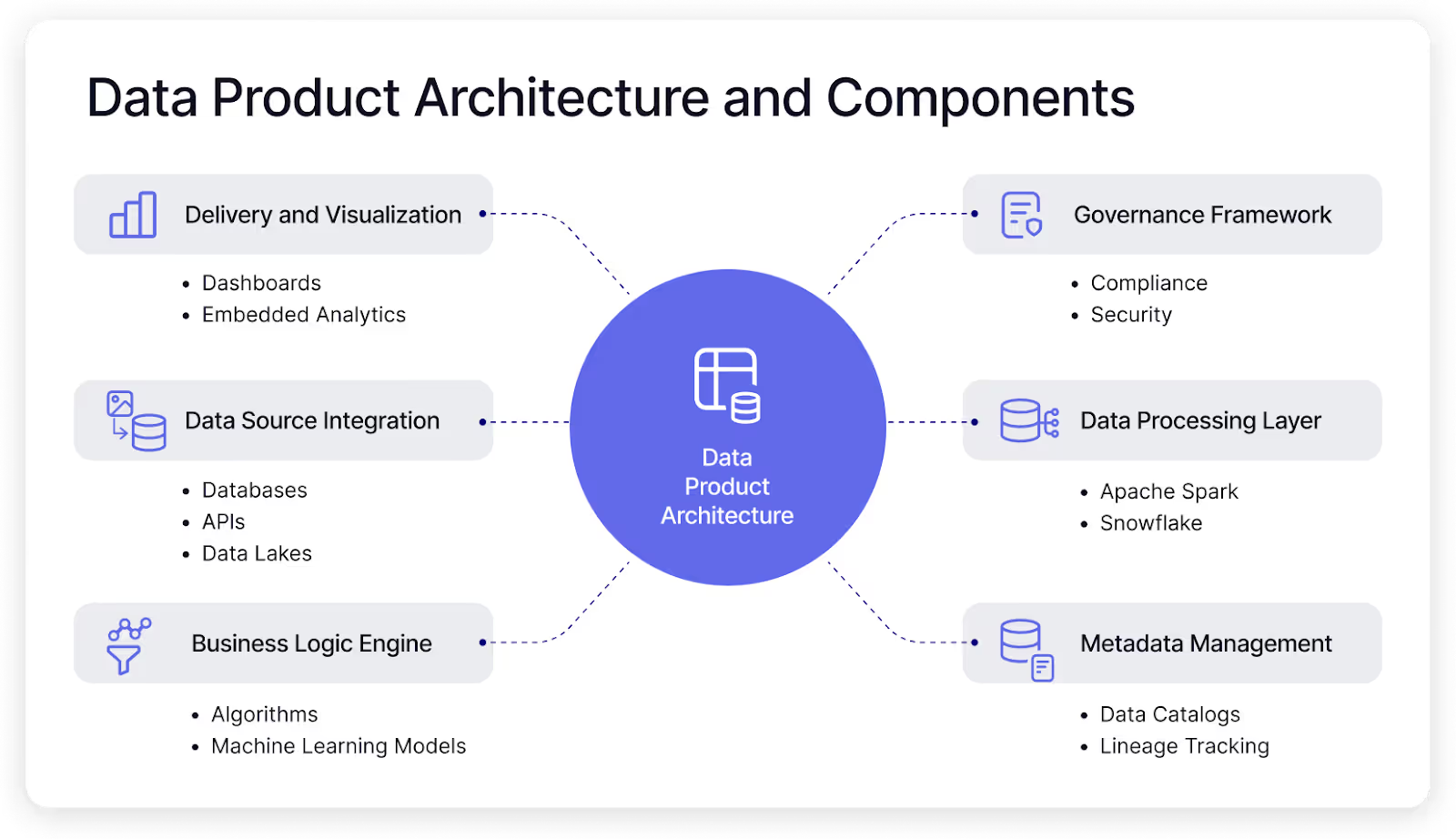

Effective data products combine multiple architectural components working seamlessly together to deliver business value:

To build robust data products that truly serve business needs, organizations must master these interconnected elements. Each component addresses a specific challenge in transforming raw data into accessible intelligence, and a weakness in any area can compromise the entire solution:

- Data source integration: Connectors that pull information from various origins, including databases, applications, APIs, and unstructured sources, often stored in data lakes or data warehouses. Modern architectures support both batch and real-time data acquisition, often utilizing a data integration platform to streamline this process.

- Data processing layer: Components that transform, combine, and prepare raw data for analysis. Organizations often debate between technologies like Apache Spark vs Snowflake when designing the data processing layer—components that handle cleaning, normalization, enrichment, and business rule application without exposing complexity to end users.

- Business logic engine: The intelligence that turns processed data into actionable information. This may include algorithms, machine learning models, rules engines, and calculation frameworks aligned with business objectives.

- Metadata management: Systems that maintain comprehensive information about data definitions, lineage, quality metrics, and usage patterns. Data catalogs serve as the central repository for this critical context.

- Delivery and visualization: Interfaces through which users consume insights, including dashboards, APIs, embedded analytics, and automated notifications. These components emphasize intuitive access over technical complexity.

- Governance framework: Controls that ensure compliance, security, and quality throughout the product lifecycle. This component balances accessibility with appropriate protections for sensitive information.

Examples and business benefits of data products

Successful data products appear across virtually every industry, transforming how organizations leverage information to create business value. These examples illustrate how data products solve real problems by making the right information available to the right people at the right time:

- Customer analytics unification: Transform fragmented customer information into actionable profiles that drive marketing and sales success. For example, healthcare providers use data products to optimize patient care through predictive analytics, while retailers create unified views of customer behavior across all touchpoints.

- Real-time operational intelligence: Deliver immediate visibility into business processes to identify inefficiencies before they impact the bottom line. Supply chain data products track materials, forecast demand, and identify potential disruptions, resulting in cost reduction and improved resource utilization.

- Precision in financial forecasting: Financial institutions deploy data products to analyze transactions and detect fraud in real-time. Advanced financial data products incorporate predictive models that forecast revenues and expenses based on historical patterns, enabling truly data-driven management across organizations.

- Context-aware recommendation systems: Create AI-powered recommendation engines that analyze user behavior to suggest relevant products or actions. Modern recommendation data products combine multiple data sources to deliver personalized suggestions that feel remarkably relevant to individual users without requiring technical expertise.

Four reasons why data products fail

The data landscape has fundamentally transformed, while many organizations' approaches to data products remain stuck in the past. Today's businesses demand insights at unprecedented speed, yet their data transformation processes often crawl along at yesterday's pace.

Technical bottlenecks create insurmountable request backlogs

Data engineers find themselves increasingly overloaded with requests they can't possibly fulfill in time. This blocked and backlogged scenario creates a fundamental disconnect where business teams submit requirements to data platform teams with year-long queues, creating frustration on both sides.

When every data preparation task requires specialized coding skills and deep technical knowledge, inevitable bottlenecks emerge. Business analysts wait weeks for critical information while opportunities slip away, and by the time solutions arrive, business needs have often evolved, creating a perpetual cycle of playing catch-up.

Consequently, this backlog problem also compounds over time as data volumes grow exponentially while data engineering teams rarely scale at the same rate. Organizations caught in this cycle find themselves unable to capitalize on emerging opportunities because their data infrastructure can't keep pace with business demands.

The growing disconnect between what the business needs and what technical teams can deliver creates friction that prevents innovation. Business teams feel unsupported while technical teams feel overwhelmed, creating a negative dynamic that further entrenches organizational silos.

Siloed expertise prevents effective collaboration

Traditional development approaches create artificial boundaries between those who understand the data technically and those who understand its business context. This disconnect leads to an "enabled with anarchy" scenario, where business users have access to data but lack proper governance.

In this scenario, data engineers set up sophisticated environments with governance and catalogs, but then data gets copied over to everyone's desktop. Line-of-business managers often find themselves unable to determine the source of truth as team members create their own versions of critical datasets.

This communication gap further leads to extensive back-and-forth iterations that delay development and introduce errors through misinterpretation. The problem intensifies when organizations maintain separate tools and workflows for business and technical teams, preventing true collaboration.

Business users resort to creating ungoverned data workflows using tools like spreadsheets, while technical teams build sophisticated solutions that don't fully address business needs. This divide prevents organizations from achieving the critical combination of business relevance and technical excellence required for truly impactful data products.

Governance becomes a barrier instead of an enabler

In traditional approaches, governance controls often appear as roadblocks that slow down development rather than enablers of sustainable value. Security, compliance, and quality measures are applied after solutions are built, frequently requiring substantial rework and creating tension between governance and development teams.

Organizations struggle with fragmented security, reliability issues, and governance when managing multiple disjointed systems. The inability to implement a unified approach forces teams to juggle seven different systems instead of focusing on delivering business value.

This retrofitting approach inevitably leads to compromises that either weaken governance protections or diminish solution effectiveness. Organizations struggle to maintain consistent data definitions and lineage across different projects, creating confusion and undermining trust in the resulting insights.

As regulatory requirements intensify globally, this governance gap poses increasing business risks beyond just analytical shortcomings. Without governance embedded directly into development workflows, organizations face impossible choices between speed and compliance, preventing them from achieving either objective effectively.

Legacy tools transform from assets to anchors

The tools and methodologies that served organizations in the past simply cannot handle today's data challenges. Organizations continue to struggle with fragmented data environments that create inconsistent results. The disconnect between data silos means valuable insights remain trapped, preventing teams from delivering the high-quality data needed for effective AI and analytics.

Legacy systems designed for smaller data volumes struggle with today's massive and diverse data streams, creating performance bottlenecks and reliability issues. Tools that require extensive manual coding cannot adapt quickly enough to changing business requirements, creating a constant maintenance burden that prevents innovation.

The way organizations think about data access needs a fundamental transformation. Moving away from rigid data warehousing toward flexible data playlisting enables organizations to create data access on demand by focusing on accessibility and context rather than location.

As AI capabilities raise expectations for what's possible, the gap between legacy capabilities and modern requirements continues to widen, making fundamental transformation increasingly necessary. Organizations that cling to outdated approaches find themselves falling further behind as competitors adopt more flexible, responsive data strategies.

How to build powerful and fully governed data products

Creating successful data products requires more than just the right technology—it demands a fundamental rethinking of how teams collaborate and how data flows through your organization.

Bridge the business-technical divide

The most common reason data products fail is the disconnect between those who understand the business context and those who implement the technical solution.

Start with business challenges rather than available data. Ask yourself: What decisions are being made? What information would improve those decisions? How would better insights change actions and outcomes? This approach ensures you're solving real problems instead of just using data because it exists.

To implement this approach effectively, bring domain experts directly into the development process. When business users work alongside technical teams, you eliminate translation errors that create endless back-and-forth iterations. This direct connection dramatically reduces misinterpretation and rework while building mutual understanding between teams.

As part of this collaborative process, conduct user research by observing how potential users currently make decisions. This approach uncovers needs that stakeholders often can't articulate directly, leading to products that solve real problems rather than hypothetical ones. These insights then form the foundation for your product requirements.

When defining those requirements, focus on outcomes rather than features. Define the questions your product must answer and the actions it should enable, not specific visualizations or calculations. This outcome-based approach gives technical teams flexibility while keeping business value in focus, creating a clear path from problem to solution.

Create flexible data access, not rigid warehouses

While bridging the business-technical divide addresses how teams work together, you also need to rethink your approach to data itself. Traditional data management focuses on centralization—pulling everything into monolithic warehouses or lakes. Modern data products take a different approach that's faster and more adaptable.

Begin by implementing modern catalog capabilities that document your available datasets, their quality, lineage, and usage patterns. This systematic approach accelerates development compared to relying on tribal knowledge about what data exists where. It also creates a foundation for self-service discovery that empowers business users.

With your data properly cataloged, the next step is transforming raw data into analytics-ready assets through cleaning, standardization, and enrichment. The most effective approaches leverage cloud-native ELT patterns that provide flexibility to transform structured and unstructured data in multiple ways without duplicating extraction efforts. This flexibility is essential for supporting diverse business needs from a common data foundation.

To make these capabilities accessible to business teams, deploy visual interfaces that allow analysts to create sophisticated data transformations without writing code. Combined with AI assistants that suggest transformations and identify quality issues, you dramatically reduce bottlenecks while maintaining quality standards. This democratization puts data product creation and the ability to build data pipelines in the hands of those who understand the business context best.

Throughout this process, remember that the goal isn't to move all data to one location but to create a flexible access layer that connects to information where it lives. This approach delivers faster time-to-insight without requiring massive migration projects, allowing you to adapt quickly as business needs evolve.

Unify visual and code environments

With business teams and technical experts collaborating, and flexible data access in place, you next need to address the tools they use. The traditional divide between visual tools for business users and code-based approaches for engineers creates artificial barriers to collaboration. Your data products need environments where both can work together.

Start by implementing bidirectional synchronization between visual interfaces and code. This ensures that visual changes automatically update the underlying code, while code modifications appear instantly in the visual interface. This two-way connection eliminates the silos that typically prevent effective collaboration, creating a unified canvas where all team members can contribute.

Using this unified environment, build data products through iterative development rather than extensive upfront design. Create minimum viable versions quickly, gather input from actual users, and refine based on real usage patterns. This cycle reduces risk and ensures your final product truly meets user needs, while allowing both technical and business teams to contribute throughout the process.

As your product takes shape, extend your testing beyond basic functionality to include data quality validation, performance under various loads, security controls, and usability by the intended audience. These comprehensive checks prevent issues that would undermine user trust and adoption, addressing the most common reasons data products fail after deployment.

Finally, bridge your development and production environments through automated processes that ensure consistency and reliability. Modern CI/CD pipelines reduce errors and accelerate updates, ensuring self-service developments can be productionized without sacrificing quality. This automation creates a smooth path from initial concept to deployed product, maintaining governance throughout.

Build a single control plane for governance, not several

With your unified development environment established, the final key step is ensuring long-term success through proper governance and management. Data products require ongoing monitoring, maintenance, and evolution to continue delivering value. This requires a unified approach rather than disconnected monitoring systems.

Begin by implementing usage monitoring that provides feedback about how your product actually supports decision-making. Track which features users access most frequently and whether usage patterns align with intended use cases to guide future enhancements. This user-centric approach ensures your product continues to solve real business problems over time.

As usage grows, maintain continuous attention on data quality as source systems evolve and business definitions change. Deploy automated monitoring to detect anomalies, completeness issues, and potential quality degradation before they impact user trust. This proactive quality management prevents the erosion of confidence that often occurs after initial adoption.

To ensure consistent performance as your product scales, establish baseline metrics for response times, resource utilization, and throughput. Constantly monitor for deviations that could indicate emerging problems, allowing you to address issues before they affect business operations. This performance management maintains user satisfaction even as demands increase.

Make continuous improvement easier by embedding feedback mechanisms directly in your product, creating easy channels for users to report issues, suggest improvements, or request new capabilities. This direct connection provides invaluable insights about evolving needs and opportunities to increase business value.

Bring all these governance capabilities together in a single unified control plane that provides consistent security and observability across your entire data ecosystem. This approach eliminates the need to jump between systems and allows your team to focus on creating value rather than managing infrastructure, ensuring your data products remain relevant and trusted as business needs evolve.

The future of data products in the AI era

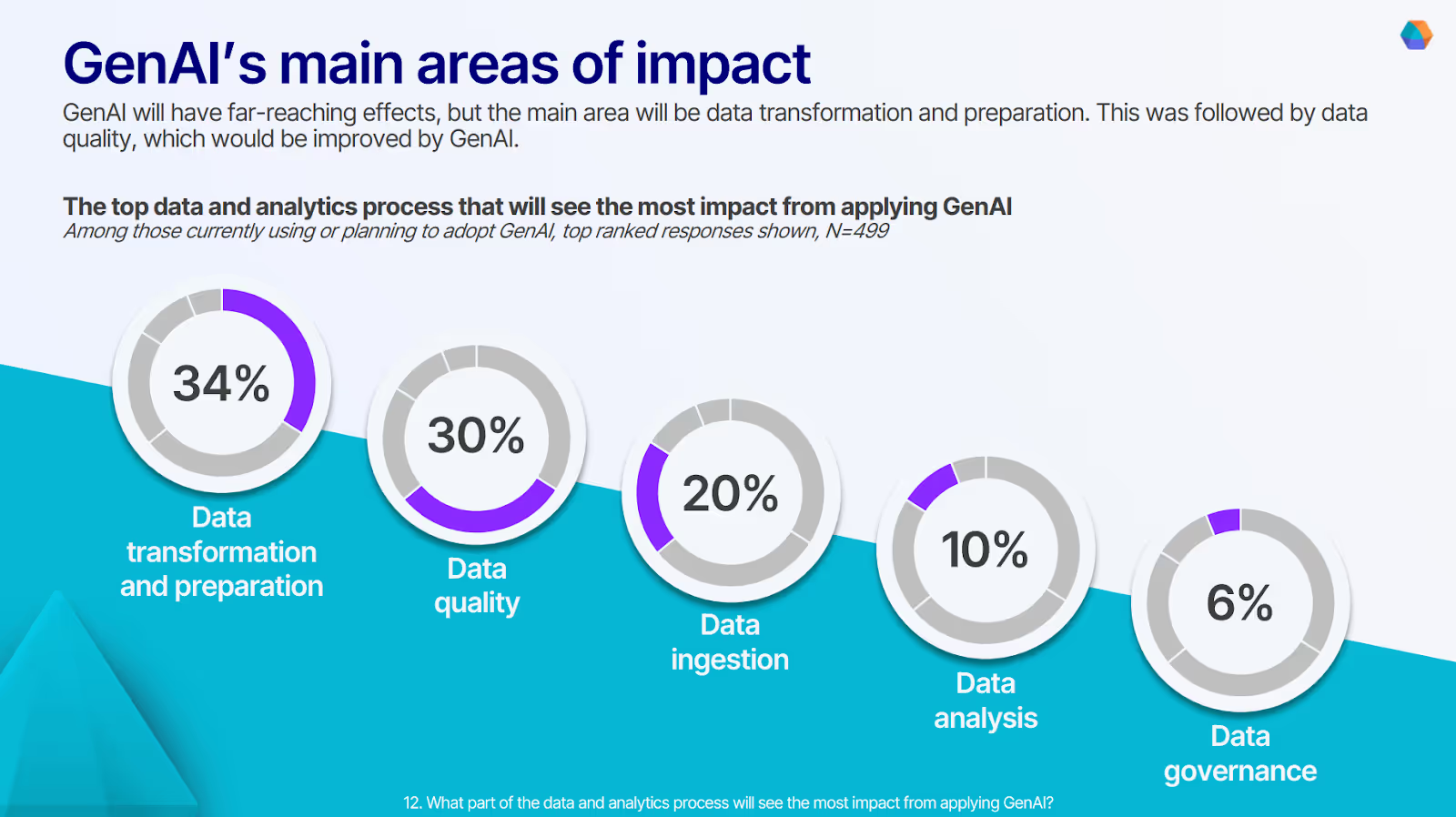

The rapid evolution of artificial intelligence is transforming how organizations create and leverage data products. This technology shift promises to dramatically enhance both the development process and the capabilities of the resulting solutions.

Generative AI represents perhaps the most significant advancement, enabling non-technical users to create data products through natural language interactions.

With GenAI, rather than learning complex tools, business users can simply describe the insights they need, and AI assistants translate these requests into functional data products. This capability dramatically expands who can participate in the data product creation process.

AI-powered data preparation automatically handles traditionally time-consuming tasks like cleaning, standardizing, and enriching raw data. These capabilities detect patterns, suggest transformations, and identify quality issues far faster than human analysis alone, accelerating the development cycle while improving data reliability.

Embedded intelligence within data products transforms passive information delivery into proactive insight generation. Modern solutions don't just present data—they automatically identify significant patterns, anomalies, and trends, then surface these insights to users without requiring them to hunt for meaningful signals amid the noise.

Organizations that embrace these AI capabilities gain substantial competitive advantages through faster development cycles, more sophisticated analytics, and wider access to insights. As these technologies mature, the gap between AI-powered and traditional approaches will only widen, making AI adoption increasingly critical for data-driven organizations.

Accelerate your data products creation with Prophecy

Creating successful data products requires escaping the false choice between the "blocked and backlogged" scenario, where analysts wait for overloaded data platform teams, and the "enabled with anarchy" scenario, where self-service happens without governance.

When you think about why there's a lack of enterprise readiness, it comes back to strategy. The organizations that succeed embed governance into the self-service experience rather than treating it as an afterthought or a barrier.

Here's how Prophecy addresses this paradox through its governed self-service data preparation platform:

- Visual development environment that empowers business analysts to prepare data without coding while automatically generating high-quality, standardized code behind the scenes

- AI-assisted data preparation that accelerates development through intelligent recommendations while ensuring transformations follow best practices and organizational standards

- Built-in governance controls that enforce access policies, data quality rules, and compliance requirements without creating friction for business users

- Version control integration that maintains the complete lineage of all changes while enabling collaboration between business and technical teams

- Reusable components that standardize common transformations and enforce best practices while accelerating development

- Native cloud platform integration that leverages the scalability and performance of modern data platforms while maintaining consistent security and access controls

- Comprehensive observability that monitors data quality, usage patterns, and performance to ensure ongoing trust and reliability

To learn more about implementing governed self-service in your organization, explore Self-Service Data Preparation Without the Risk to empower both technical and business teams with self-service analytics strategies.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.