A Guide to Effective Data Quality Checks and Testing for Rapidly Scaling Businesses

Discover essential data quality checks and testing methods that scale with your business. Learn how automated checks catch costly errors before they impact critical decisions.

Data quality issues cost organizations millions annually, not just in direct expenses but in flawed strategic decisions that ripple through operations. A Gartner study found that poor data quality costs businesses an average of $15 million yearly, yet many continue relying on compromised data for critical decisions. As data volumes grow exponentially, the challenge of maintaining quality becomes increasingly complex.

While most organizations acknowledge data quality's importance, many postpone implementing systematic quality checks until problems become obvious—when reports don't match, analytics produce contradictory insights, or AI initiatives fail to deliver value.

Data quality testing plays a pivotal role in validating and verifying data to meet standards of accuracy, completeness, consistency, and reliability. Without robust data quality checks, businesses risk making decisions based on flawed or incomplete information, leading to significant negative consequences.

This article explores data quality testing and essential check methods that safeguard decision-making without sacrificing the agility modern businesses require.

What is data quality testing?

Data quality testing is the systematic process of verifying whether your data meets the standards required for business operations and decision-making. At its core, it's about answering a fundamental question: "Can we trust this data to make critical business decisions?"

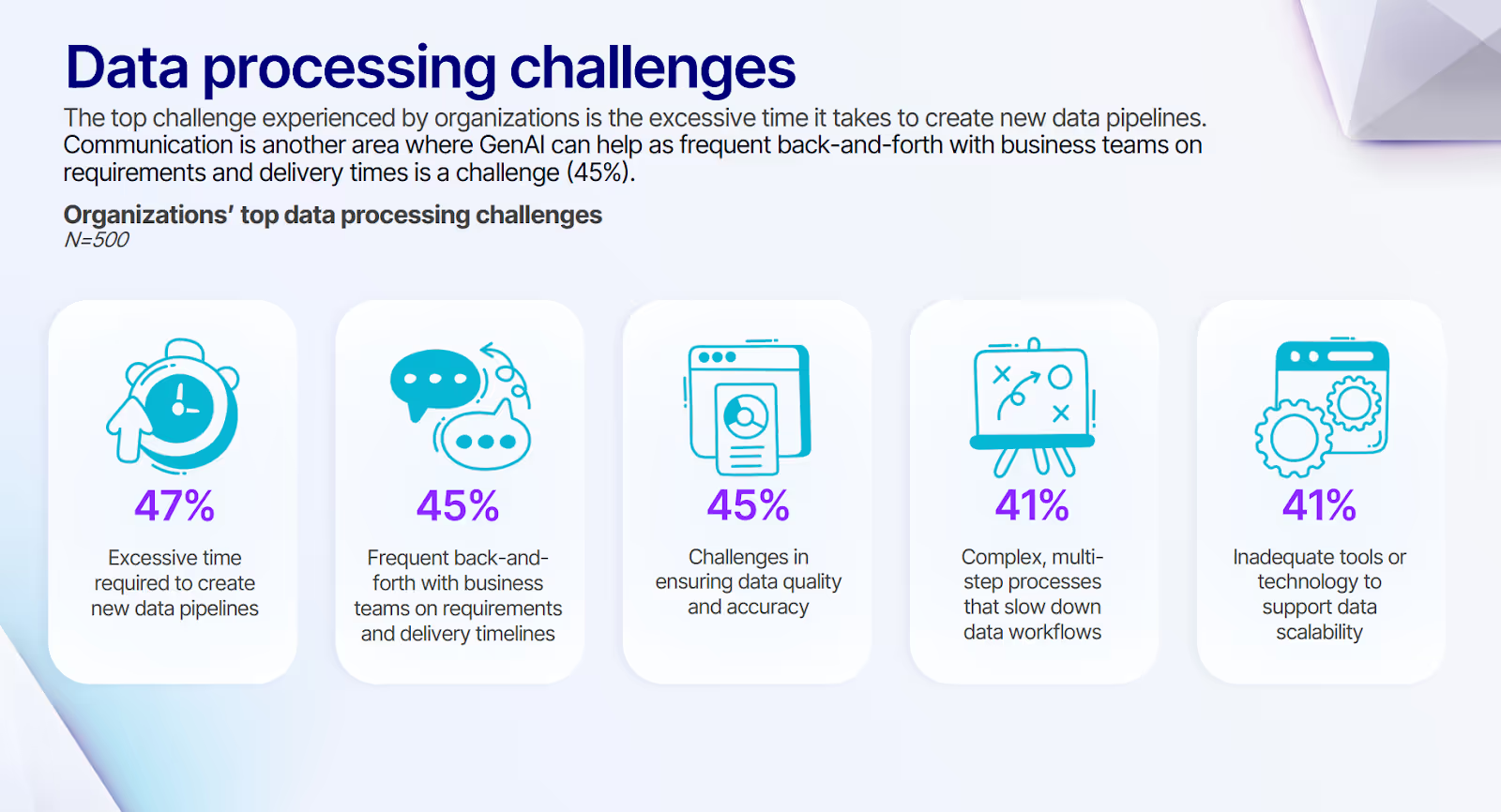

This challenge is widespread and growing—according to our recent survey, 45% of organizations report significant challenges in ensuring data quality and accuracy, making it increasingly difficult to extract reliable insights from their expanding data assets.

Without systematic approaches to data quality, this problem only compounds as data volumes increase. Unlike software testing, which focuses on functionality, data quality testing examines the actual content flowing through your systems.

Poor quality data directly impacts business outcomes in tangible ways. When marketing and sales teams operate from different customer datasets, campaign targeting becomes ineffective. When product inventory counts are inaccurate, stock-outs or overstocking occur. When financial data contains errors, forecasting becomes unreliable.

Data quality testing has evolved from a purely technical IT function to a shared responsibility between technical teams and business users. Data engineers establish the testing frameworks and automated checks, while business users define quality standards based on operational needs.

This collaboration is especially crucial in today's self-service analytics environments, where more users access and transform data without technical oversight. Organizations must balance democratizing data access with maintaining governance, ensuring that when business users prepare data, they do so within established quality frameworks that maintain data integrity across the enterprise.

Where and when should you perform data quality testing?

Data quality testing needs to happen throughout your data's journey to catch problems early. Here are the key checkpoints:

- At ingestion: Perform data quality checks on incoming data against expected structures. A robust data ingestion strategy stops bad data at the front door.

- During transformation: Apply business rules and perform data quality checks on relationships. Effective data transformation processes keep information logical and aligned with business needs.

- Pre-analytics verification: Ensure your processed data represents what it should before anyone makes decisions with it.

- Post-load reconciliation: Confirm data moved correctly from source to destination.

With more people preparing their own data, automated data quality checks embedded in workflows help maintain trust while giving users freedom.

Key components of data quality testing

Data quality testing covers several essential elements:

- Completeness: Making sure all needed data exists. Every customer record needs a valid email address for you to reach them.

- Accuracy: Checking data is correct against known sources. Product prices in your system should match the official pricing.

- Consistency: Ensuring data aligns across systems. A customer's information should match between your CRM and billing system. They're essential for maintaining trust in your analytics and can be facilitated by unifying data lakes and warehouses.

- Timeliness: Confirming data is current enough to use. Sales data that's a week old might be useless for daily decisions.

- Validity: Verifying data follows proper formats. Dates need to be actual dates, not random text.

- Uniqueness: Preventing duplicates that skew your analysis or cause operational problems.

As your data grows exponentially, maintaining these components gets harder but becomes even more critical for preserving trust.

Data quality testing vs. data quality checks

Data quality testing is the full mechanic's inspection, more thorough and complex. It might involve checking order data across multiple systems, verifying relationships between fields, and confirming calculations are correct.

What are data quality checks?

Data quality checks are individual validations you can automate and build into workflows. They let non-technical users maintain quality without needing to write code. Data quality checks are like your car's dashboard indicators—quick signs of a problem. They might verify that customer IDs in your orders actually exist in your customer database.

Checks give quick insights while comprehensive testing ensures your data meets all criteria throughout its life. You need both: checks for immediate feedback during processes, and testing for deeper assurance of overall data health.

A smart mix of quick data quality checks and thorough testing creates a strong quality framework, giving you both speed and confidence in your data.

7 essential data quality checks for businesses

Strong data quality checks let organizations work with data at scale without sacrificing accuracy. As you grow, your approach to these checks should become more sophisticated. Good news: many checks can be implemented in visual pipeline tools without coding expertise, making them accessible to both engineers and analysts.

1. Completeness checks

Completeness checks confirm that required fields contain data. Think of it as making sure no critical information is missing. You might set a rule that customer records must always include email addresses.

Implementing effective completeness checks requires understanding business context and impact. For example, an incomplete shipping address might block order fulfillment entirely, while a missing preference field merely reduces personalization opportunities.

Advanced implementations track completeness trends over time, alerting teams when completion rates decline below historical patterns—often an early indicator of upstream system issues. Consider implementing progressive completeness requirements that evolve as data flows through your pipeline: raw ingestion might accept partial records, while consumption-ready datasets enforce stricter standards.

In modern data platforms, you'll often set thresholds for missing data. Critical fields like customer IDs might require 100% completeness, while others can tolerate some gaps.

Remember that "complete" doesn't always mean perfect—it means having enough data to make good decisions. A marketing dataset might be fine with 95% of email addresses present, but financial data might need every transaction amount.

2. Consistency checks

Consistency checks ensure your data tells the same story across different datasets. They're essential for maintaining trust in your analytics. You might implement cross-field validation rules to verify that customer data matches between sales and marketing systems—same IDs, names, and contact details.

When data doesn't match up, the consequences can be serious. Different sales figures in different reports create confusion and erode confidence. As more people access data through self-service tools, keeping everything consistent becomes both harder and more important. Strategies like overcoming data silos are crucial for ensuring data consistency.

Modern consistency checks operate at multiple levels, from field-level validations to complex cross-system reconciliations. For instance, customer lifetime value should align with historical purchase totals; inventory counts should reconcile between warehouse and finance systems.

Implementing hash-based comparison techniques can efficiently detect inconsistencies in large datasets without requiring exhaustive record-by-record review. Organizations with mature data governance establish "golden records" or single sources of truth for key entities, against which all other representations are validated.

This approach prevents the proliferation of conflicting versions that undermine analytical confidence and decision quality.

3. Validity checks

Validity checks make sure data follows the required formats and business rules. They verify that email addresses look like email addresses, dates fall within realistic ranges, and numbers make business sense.

You'll often use pattern matching for format validation and constraint rules for business logic. For example, customer ages must be between 0 and 120, and order amounts can't be negative.

Invalid data creates real business problems. Wrong contact information means failed communications and unhappy customers. Document your validity rules clearly so everyone applies them consistently.

Beyond simple format validation, sophisticated validity checks incorporate contextual business knowledge. For example, while any value between 0-100 might be technically valid for a percentage field, certain metrics like conversion rates typically fall within narrower industry-specific ranges.

Temporal validity also matters—promotional codes should only activate during campaign periods; seasonal product availability should align with actual seasons. Leading organizations maintain centralized business rule repositories that define validity standards, ensuring these rules evolve alongside changing business requirements.

These repositories often include metadata explaining the business justification behind each rule, improving understanding and compliance across technical and business teams.

4. Freshness checks

Freshness checks verify that your data is current enough to be useful. They're critical in fast-moving businesses where decisions depend on up-to-date information.

You might use timestamps, modification flags, or time-based policies. Different data types need different freshness standards—dashboards might need data no older than 5 minutes, while quarterly reports might be fine with daily updates.

Stale data can be worse than no data at all. Imagine promising products to customers based on yesterday's inventory counts. By enhancing data literacy, teams can better understand the importance of data freshness and implement checks appropriately.

Effective freshness checks require nuanced approaches tailored to business velocity. Organizations should define and document service-level agreements (SLAs) for data freshness based on business impact and operational requirements. For instance, e-commerce inventory might require 5-minute refresh cycles, while customer demographic information could update weekly.

Implementing differential freshness monitoring—comparing current lag times against historical patterns—can reveal processing bottlenecks before they impact decision-making. Advanced implementations track both data freshness (when data was last updated) and processing freshness (when pipelines last ran successfully), providing comprehensive visibility into data timeliness across complex ecosystems.

When freshness requirements conflict with processing constraints, consider implementing confidence degradation metrics that indicate when data ages beyond optimal freshness.

5. Anomaly detection

Anomaly detection spots data that doesn't follow normal patterns. It's your early warning system for both data issues and business changes.

Methods range from simple statistical rules to advanced machine learning, often implemented within AI-powered ETL tools. For business users, this means identifying things like sudden traffic spikes, unusual sales patterns, or outlier transactions.

This detection works like a smoke alarm for both data problems and business opportunities. An e-commerce company might use it to catch potential fraud (suspiciously large orders) and emerging trends (unexpected product category growth).

However, implementing robust anomaly detection requires balancing sensitivity with specificity. Too sensitive, and you'll overwhelm teams with false positives; too permissive, and genuine issues go undetected.

Effective implementations often employ multi-layered approaches—simple statistical methods for common patterns, machine learning for complex seasonal data, and deep learning for high-dimensional datasets with subtle interrelationships. Context-aware anomaly detection also considers business calendars (accounting for holidays, promotions, or fiscal periods) to reduce false alarms during expected fluctuations.

Leading organizations implement collaborative feedback loops where business users confirm or reject detected anomalies, continuously training systems to better distinguish between genuine problems and normal business variability, creating increasingly intelligent detection over time.

6. Relationship and referential integrity checks

These checks verify that connections between datasets remain intact. Every order should link to a valid customer, and every product in an order should exist in your catalog.

In structured environments, you'll use foreign key constraints. For semi-structured data, you'll need custom logic. Maintaining these relationships prevents serious problems like orders that can't be fulfilled or analytics showing wrong totals.

As data becomes more distributed and self-service analytics become more common, keeping these connections intact gets harder. Strong data quality checks in your data pipeline prevent many downstream headaches.

Beyond simple foreign key relationships, comprehensive referential integrity extends to complex hierarchical and many-to-many relationships spanning diverse data storage technologies. For example, maintaining consistency between product hierarchies across e-commerce, inventory, and financial systems requires sophisticated rulsets that account for different hierarchical representations.

Multi-dimensional integrity checks validate that aggregation relationships hold true—ensuring department totals equal the sum of team totals, or that geographic hierarchies maintain consistency from global to regional to local levels.

Organizations with mature data governance implement bi-directional integrity monitoring that detects both orphaned child records and parent records missing expected children, providing complete relationship validation across the data ecosystem.

7. Distribution and statistical checks

Distribution checks confirm your data follows expected statistical patterns. You might verify that customer age distributions remain consistent over time, or that order values stay within historical ranges.

Simple statistical methods can be automated in your pipeline. Visualizations make these checks accessible to business users, helping them quickly spot changes in patterns.

Big shifts in distributions might signal data problems or important business changes. Implementing a medallion architecture can aid in managing data quality and governance during such checks. A sudden change in new customer ages could be a data issue, or it might show your latest marketing campaign is attracting a different demographic.

Sophisticated distribution checks implement multivariate analysis rather than examining single variables in isolation. While a change in average order value alone might not raise concerns, simultaneous shifts in geographic distribution, product mix, and payment methods likely indicate either a significant data issue or a substantial business change requiring investigation.

Leading organizations employ distribution fingerprinting—creating statistical signatures for datasets that enable quick comparison between historical and current patterns. This approach efficiently detects subtle distribution changes across dozens or hundreds of attributes simultaneously.

Temporal distribution checks that account for seasonality, day-of-week patterns, and known business cycles reduce false positives while maintaining sensitivity to genuine anomalies. For maximum effectiveness, calibrate distribution thresholds based on business impact—tighter tolerances for financial metrics directly affecting reporting accuracy, broader ranges for exploratory analytics.

Tools and frameworks for implementing data quality checks

As data becomes the backbone of business decisions, robust data quality checks are non-negotiable. Let's see how these tools help maintain data integrity in modern environments.

dbt data quality checks

dbt has become the Swiss Army knife for many data teams, with built-in testing that fits seamlessly into transformation workflows. Its generic tests include ready-to-use data quality checks like not_null, unique, accepted_values, and relationships, which can be configured in YAML files for quick implementation of common quality validations.

For complex business rules, dbt supports custom SQL tests, providing flexibility to embed nuanced logic directly in your transformation layer. The dbt-expectations package further expands testing capabilities with pre-built tests for distributions, value ranges, and row comparisons.

Within the dbt framework, teams can assign severity levels to tests, controlling how failures are handled. This granular control means critical issues can block deployments while minor ones might just log warnings, creating a practical approach to quality management that aligns with business priorities.

Prophecy's platform further enhances these capabilities by natively integrating with dbt, allowing teams to incorporate both standard and custom dbt tests directly into their Prophecy projects.

This integration is particularly powerful for organizations looking to democratize data quality management—data engineers can define and implement sophisticated quality checks using dbt, which business users can then leverage through Prophecy's visual interface without needing to write code.

This approach bridges the traditional gap between technical quality frameworks and business-friendly tools, enabling organizations to scale their quality practices across all data users.

By making DBT's robust testing capabilities accessible through a low-code interface, Prophecy empowers more teams to participate in data quality management while maintaining centralized governance and technical rigor.

The Great Expectations framework

Great Expectations serves as a Python-based validation framework that helps teams maintain quality throughout the data lifecycle. Its expectation suites function as collections of data rules that serve dual purposes—both as automated tests and as clear documentation.

The framework's data profiling capabilities can automatically generate expectations from data samples, significantly reducing the effort required for initial adoption.

Integration flexibility represents one of Great Expectations' key strengths. The tool works with various orchestration platforms and data warehouses, and can even be incorporated within dbt pipelines for comprehensive coverage.

Great Expectations particularly excels at creating human-readable documentation alongside technical validation, effectively connecting data engineers with business stakeholders through shared understanding of quality standards.

Open-source solutions

Beyond the big names, several open-source tools contribute meaningfully to the data quality ecosystem. Apache Griffin provides a unified platform for measuring and analyzing data quality across diverse environments.

Amazon's Deequ library, built on Apache Spark, defines "unit tests for data" that efficiently measure quality in large datasets without sacrificing performance. OpenRefine, while primarily designed for data cleaning, offers powerful features for exploring and transforming datasets that complement formal quality processes.

Modern data platforms

Modern platforms like Databricks deliver integrated solutions for quality management throughout the data lifecycle. Delta Lake, an open-source storage layer, provides transaction support, scalable metadata handling, and unified processing, all supporting reliable data quality checks in complex environments.

These platforms seamlessly work with specialized tools like Great Expectations and dbt, allowing teams to implement data quality checks directly within their processing pipelines.

Built-in profiling capabilities in modern platforms offer automated data analysis, helping teams quickly identify potential issues before they propagate through systems. These integrated approaches help implement data quality checks that scale effectively with growing data volumes and complexity, maintaining performance while enhancing reliability.

Effective data quality requires selecting the right combination of tools, frameworks, and practices for your specific environment. Whether you choose dbt for transformation-centric testing, Great Expectations for comprehensive validation, or modern platform capabilities, the key is integrating data quality checks throughout the data lifecycle.

However, Akram Chetibi, Director of Product Management at Databricks, emphasizes how "successful data quality programs require both technical frameworks and organizational alignment. When teams share the same quality standards across the data lifecycle, they create a foundation for trusted analytics that scales with business growth."

This ensures quality isn't treated as an afterthought but remains core to data management, ultimately leading to more reliable analytics and better business decisions.

Balancing access and trust with governed self-service data quality checks

As organizations still struggle with the quality-access balance, self-service analytics now needs built-in guardrails to prevent bad data from spreading. Central data teams can't manually review everything, so the answer lies in automated data quality checks that give users freedom while protecting data integrity.

Automated data quality checks throughout the data lifecycle help organizations scale analytics without sacrificing trust. By building validation rules, anomaly detection, and profiling directly into pipelines and self-service tools, companies catch issues early and stop flawed data from contaminating systems.

Here’s how Prophecy helps tackle these challenges:

- Visual data quality rules: Define and manage data quality checks without coding.

- Automated profiling: AI-powered analysis to spot anomalies and suggest data quality rules.

- Centralized governance: One platform to manage quality policies across your organization.

- Real-time monitoring: Continuous data quality checks with alerts for violations.

- Self-service enablement: Business users can validate their datasets within safe boundaries.

To overcome the growing backlog of data quality issues that prevent organizations from fully trusting their data, explore How to Assess and Improve Your Data Integration Maturity to empower both technical and business teams with self-service quality monitoring.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The AI Data Prep & Analysis Opportunity

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar