From Data Mess to Business Success Through Strategic Data Curation

Learn how data curation turns your data from a cost center to a growth driver and enhances business success.

Your organization generates more data every month than most companies handled in entire decades just years ago. Yet despite unprecedented access to information, business leaders consistently report that they can't get the insights they need when they need them.

The problem isn't data scarcity—it's the growing gap between raw information and strategic intelligence. While competitors turn data into competitive advantage, many organizations remain trapped in cycles of collection without curation, accumulating vast digital assets that deliver minimal business value.

Let’s explore how strategic data curation breaks this cycle by transforming chaotic information streams into reliable business intelligence that drives growth and competitive differentiation.

What is data curation?

Data curation is the systematic process of organizing, cleaning, and contextualizing raw data to make it reliable, discoverable, and valuable for business decision-making. It goes beyond simple data management by applying domain expertise and business context to ensure information meets specific quality standards and serves defined purposes.

The curation process transforms chaotic data streams into structured assets that business users can trust and understand. This involves validating accuracy, standardizing formats, enriching with metadata, and organizing information according to business logic rather than technical convenience.

Modern data curation combines automated processes with human expertise, leveraging technology to handle routine tasks while applying business knowledge to make contextual decisions about data meaning and quality. This approach scales curation efforts without sacrificing the domain expertise that makes data truly valuable.

Data curation vs data management vs data governance

While these terms are often used interchangeably, they serve distinct purposes in your data strategy:

Data management provides the technical foundation for storing and moving information between systems. Data governance establishes the rules and policies that ensure compliance and security. Data curation sits between these functions, applying business knowledge to make raw data useful for specific purposes.

The most effective organizations integrate these functions rather than treating them as separate activities. Governance policies inform curation standards, while management systems provide the infrastructure that supports both functions.

This integration creates a virtuous cycle where better curation improves data quality, which strengthens governance and reduces management complexity.

Why data curation matters more than collection

Organizations often assume that collecting more data automatically leads to better insights, but volume without context creates noise rather than intelligence. Strategic curation delivers measurable business benefits that raw data collection cannot achieve:

- Accelerated decision-making: Curated data eliminates the weeks typically spent validating and preparing information for analysis, enabling faster responses to market changes and opportunities.

- Improved decision quality: Clean, contextualized data, supported by effective data quality monitoring, reduces the risk of conclusions based on errors, inconsistencies, or misunderstood information that plague uncurated datasets.

- Enhanced collaboration: Standardized, well-documented data enables teams across departments to work with consistent information, eliminating conflicts between different analyses of the same business questions.

- Reduced operational costs: Automated curation processes eliminate repetitive manual work while preventing the downstream costs of decisions based on poor-quality data.

- Increased innovation capacity: Teams spend more time generating insights and less time hunting for reliable data, freeing creative energy for strategic analysis and experimentation.

The return on curation investment typically exceeds the cost of raw data collection by an order of magnitude. While storage and processing costs decrease over time, the value of context and quality increases as business complexity grows.

Steps to effective data curation

Implementing strategic data curation requires a systematic approach that balances automation with human expertise. Each component addresses specific challenges while building toward a comprehensive curation capability that serves your entire organization:

- Data discovery and profiling: Effective curation begins with understanding what data you have and its current condition through cataloging sources and revealing quality issues. Modern discovery tools automatically scan your data landscape, identifying new sources and changes while profiling algorithms analyze distributions and suggest improvements.

- Quality assessment and cleansing: This step involves improving data quality by identifying and correcting errors, standardizing formats, and resolving inconsistencies that would undermine analytical results. Quality assessment should focus on metrics and dimensions that matter for business decisions: accuracy, completeness, consistency, and timeliness.

- Enrichment and contextualization: Raw data rarely contains all the context needed for business analysis, so enrichment adds valuable information from external sources or derived calculations. Contextualization provides business meaning that makes data interpretable by non-technical users through clear definitions and explanations.

- Organization and accessibility: The final component involves organizing curated data for easy discovery and use by business teams through logical structures and appropriate access controls. Organization should reflect business logic rather than technical convenience, demolishing data silos and reducing confusion.

How medallion architecture overcomes data curation challenges

The medallion architecture provides a structured framework for systematic data curation and data integration, addressing enterprise-scale challenges. This approach, pioneered by Databricks' cloud data platform, creates sustainable solutions for organizations struggling with traditional data management approaches.

Bronze layer: Raw data ingestion with quality gates

The bronze layer serves as your organization's foundation for all downstream curation activities, capturing raw data from source systems while implementing essential quality controls that prevent problems from propagating through your data ecosystem.

This layer focuses on faithful replication of source data with minimal transformation, preserving original context while adding metadata that supports downstream curation activities. Quality gates at this stage focus on structural validation rather than business logic, ensuring that subsequent layers can process data effectively.

Modern bronze implementations include automated schema detection and evolution capabilities that adapt to changes in source systems without requiring manual intervention. This automation prevents the brittleness that often affects traditional data pipelines when source systems change.

The bronze layer also establishes the lineage tracking that becomes crucial for understanding data relationships and debugging quality issues in downstream layers. This foundational metadata investment pays dividends throughout the curation lifecycle by enabling rapid troubleshooting and impact analysis.

Silver layer: Cleaned and standardized business data

The silver layer transforms raw bronze data into cleaned, standardized formats that serve as the foundation for business analysis. This is where most traditional curation activities occur, including data cleansing, standardization, and basic enrichment that makes information suitable for business use.

Silver layer processing applies business rules and domain knowledge to resolve quality issues identified in the bronze layer. This includes standardizing formats, resolving inconsistencies, and enriching data with additional context that business users need for analysis.

The silver layer enables reusable curation logic that can be applied consistently across different business use cases. Rather than recreating similar cleansing processes for each new analysis, teams can leverage standardized silver layer assets that maintain quality while accelerating development.

This layer also provides the ideal location for implementing data governance controls that ensure consistency without impeding business agility. Access controls, quality monitoring, and compliance checks can be embedded in silver layer processing without affecting end-user experience.

Gold layer: Analytics-ready data products

The gold layer creates purpose-built data products optimized for specific business use cases, representing the culmination of your curation efforts in formats that directly serve analytical and operational needs.

Gold layer assets are designed with specific consumers in mind, incorporating the business logic and context that makes information immediately useful for decision-making. This might include pre-calculated metrics, industry-specific enrichments, or customized formats that match existing business processes.

The gold layer enables self-service analytics by providing data in formats that business users can consume directly without requiring additional technical expertise. This democratization reduces bottlenecks while ensuring users work with properly curated, consistent information.

Gold layer data products also serve as the integration point with business applications, providing clean APIs and interfaces that support operational use cases beyond traditional analytics. This operational integration maximizes the value of curation investments by supporting both analytical and transactional business needs.

How democratized data curation frees organizations from engineering bottlenecks

Modern organizations need to balance the speed and flexibility of self-service curation with the consistency and quality that enterprise governance requires. This balance is achievable through platforms that embed controls directly into user workflows rather than imposing them as separate approval processes.

Reduce analyst dependency on engineering teams

Traditional curation approaches create artificial dependencies where business analysts must rely on engineering teams for even basic data preparation tasks. This dependency creates delays that frustrate business users while consuming engineering resources that could be better used for strategic projects.

The fundamental issue is that most curation platforms require technical skills that business analysts don't possess. SQL programming, data pipeline development, and infrastructure management are specialized capabilities that shouldn't be prerequisites for business data analysis.

Modern self-service analytics platforms provide visual interfaces that enable business users to perform sophisticated curation tasks with low-code. These interfaces generate the technical implementation automatically while maintaining professional standards for performance, security, and maintainability.

The key is providing abstraction layers that hide technical complexity while preserving full functionality. Business users can focus on applying their domain expertise to data curation while platforms handle the underlying technical implementation automatically.

Scale curation expertise across business domains

Traditional curation approaches assume that technical specialists can understand and apply business context across all organizational domains. This assumption breaks down as companies grow and data needs become more specialized across different business functions.

Each business domain—whether marketing, finance, operations, or sales—has unique data requirements, quality standards, and analytical needs that generic technical approaches cannot address effectively. Domain experts understand the nuances of their data that technical teams simply cannot acquire without years of specialized experience.

Modern self-service platforms enable domain experts to apply their specialized knowledge directly to curation processes, creating higher-quality results than technical teams working in isolation. This approach aligns with data mesh principles, scaling curation expertise by distributing it to where business knowledge resides rather than centralizing it in technical teams.

The key is providing platforms that capture and codify domain expertise in reusable workflows, enabling knowledge sharing across similar business functions while maintaining the specialized understanding that makes curation truly valuable.

Prevent ungoverned data sprawl

Self-service capabilities without appropriate governance quickly lead to data sprawl, where different teams create incompatible versions of similar analyses. This sprawl undermines the consistency and collaboration that effective curation should enable.

The traditional response to this risk is restricting self-service capabilities, but this approach simply recreates the bottlenecks that make self-service necessary. A better approach involves implementing self-service governance, embedding controls directly into self-service platforms so that compliance happens automatically.

Modern platforms now enforce data quality standards, security policies, and consistency requirements without requiring users to understand or manually implement these controls. Users work within guardrails that ensure compliance while providing the flexibility they need for effective analysis.

This embedded governance approach also provides audit trails and lineage tracking that support compliance requirements without imposing additional work on business users. Governance becomes an invisible enabler rather than a visible obstacle.

Build organizational curation capabilities

Effective curation requires ongoing capability development that goes beyond training on specific tools or techniques. Organizations need systematic approaches to building and maintaining curation expertise across both technical and business teams.

Self-service platforms accelerate this capability development by enabling learning through experimentation rather than formal training programs. Business users can develop curation skills gradually while working on real business problems, building confidence and expertise through practical application.

The most effective platforms include built-in guidance and best practices that help users improve their curation techniques over time. This embedded learning approach builds organizational capability more effectively than one-time training sessions that quickly become outdated.

Capability building also involves creating communities of practice where users can share techniques, troubleshoot challenges, and develop organizational standards collaboratively. These communities create sustainable knowledge sharing that continues beyond any individual project or initiative.

The future of data curation: AI-powered and business-driven

Artificial intelligence is fundamentally transforming how organizations approach data curation, shifting from manual, technical processes to automated, business-driven workflows. This transformation addresses persistent challenges while opening new possibilities for strategic data use.

The most significant development is AI's ability to understand business context alongside technical data structures. Traditional curation focused primarily on technical quality issues like format consistency and completeness.

AI-powered approaches, especially in AI in ETL, can now identify business logic errors, suggest enrichments based on industry knowledge, and optimize data organization for specific analytical use cases.

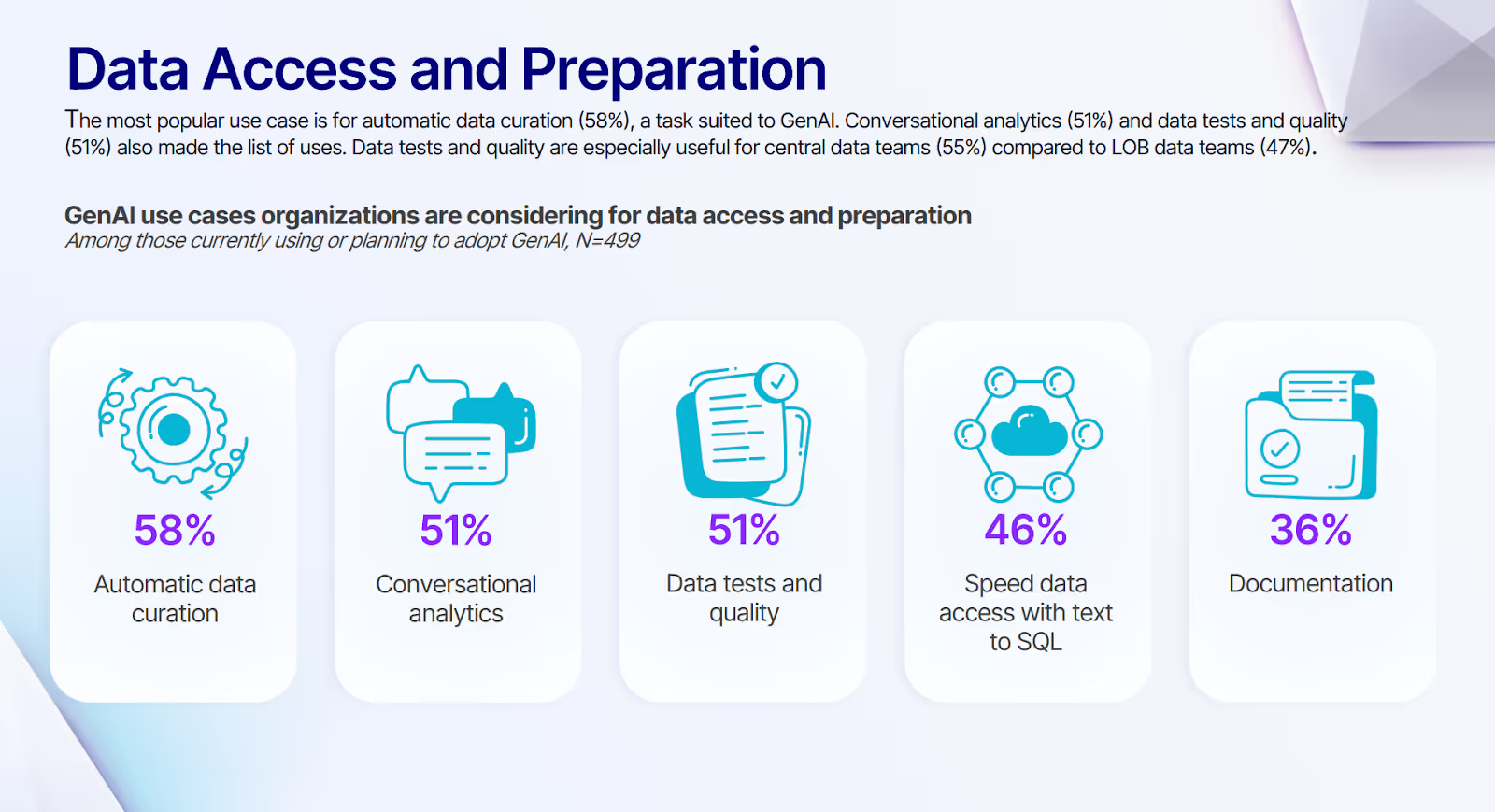

As a result, automatic data curation has now emerged as the most popular GenAI use case, as evidenced by our survey, with 58% of organizations either using or planning to adopt AI for this purpose.

This adoption reflects AI's particular suitability for curation tasks that require pattern recognition, consistency checking, and rule application at scale. Organizations report significant time savings and quality improvements when AI handles routine curation tasks while human experts focus on strategic decisions.

The integration of business intelligence into curation workflows represents a paradigm shift from technical data processing to strategic asset creation. AI systems can now understand business terminology, recognize industry-specific patterns, and suggest optimizations based on how data will actually be used for decision-making.

This business-aware curation produces data assets that serve strategic purposes rather than just meeting technical requirements.

However, the most transformative aspect isn't the technology itself but how it democratizes curation capabilities. Business domain experts can now guide curation processes directly, applying their specialized knowledge without requiring technical intermediaries.

This democratization eliminates bottlenecks while ensuring that curated data reflects actual business needs rather than technical assumptions about what might be useful.

Evolve from data collection to strategic intelligence creation

The challenge most organizations face isn't identifying what data to curate, but creating sustainable processes that scale with business growth while remaining responsive to changing analytical needs. Traditional approaches that rely on central teams and manual processes simply cannot keep pace with modern data volumes and business velocity.

Here’s how Prophecy helps through a comprehensive data integration platform:

- Visual curation workflows that enable business experts to apply domain knowledge directly while automatically generating production-quality code and maintaining enterprise governance standards

- Medallion architecture support that implements bronze, silver, and gold layers seamlessly, providing the structured approach to curation that enterprises need while maintaining flexibility for diverse business requirements

- AI-powered assistance that accelerates routine curation tasks while learning from business decisions to improve recommendations and automate increasingly sophisticated data preparation workflows

- Collaborative environments where business analysts and data engineers work together on the same assets, eliminating translation errors and accelerating development cycles through shared understanding

- Embedded governance controls that ensure compliance and quality without creating friction, automatically enforcing policies and maintaining audit trails without requiring additional effort from business users

- Enterprise integration capabilities that connect seamlessly with existing data infrastructure while providing the scalability and performance required for strategic business applications

To prevent costly architectural missteps that delay data initiatives and overwhelm engineering teams, explore 4 data engineering pitfalls and how to avoid them to develop a scalable data strategy that bridges the gap between business needs and technical implementation.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.