9 Steps to Build Reusable Data Pipelines That Eliminate Duplicate Development

Build reusable data pipelines that multiple teams can use by following these 9 steps.

While organizations invest millions in sophisticated data platforms, teams continue rebuilding the same customer segmentation logic, revenue calculations, and data quality checks across dozens of independent projects.

Our survey data reveals how the top data processing challenge experienced by organizations is the excessive time it takes to create new data pipelines. This is a scaling crisis that prevents organizations from capitalizing on their data investments.

The challenge intensifies in large organizations where teams often don't know each other's work exists. Marketing builds customer attribution pipelines while sales creates similar customer journey analysis, and finance develops yet another variation for revenue tracking.

These nine proven steps transform data engineering from isolated craft work into collaborative, scalable systems that accelerate innovation while reducing development costs.

Step #1: Establish pipeline discovery and cataloging systems

The foundation of pipeline reusability lies in solving the discovery problem that plagues large organizations. Teams can't reuse what they can't find, and most enterprises lack comprehensive visibility into existing data assets and processing logic across different departments.

Modern data catalogs provide the infrastructure needed to make pipeline components discoverable and understandable. Rather than relying on tribal knowledge or hoping teams will stumble across relevant work, implement centralized systems that document available pipelines, their business logic, data sources, and usage patterns.

Start by inventorying your existing pipeline landscape to understand what already exists across different teams and business units. Customer data processing, financial calculations, and operational metrics often appear in multiple variations throughout the organization. This audit reveals both reuse opportunities and standardization priorities.

Create searchable metadata that enables teams to find relevant pipeline components based on business function rather than technical implementation. When marketing teams search for "customer segmentation," they should discover relevant pipelines regardless of which technology stack originally implemented them.

Most importantly, establish governance processes that require teams to document their pipeline logic in the central catalog before deployment. This proactive approach prevents the accumulation of undocumented assets that become impossible to discover or reuse over time, aligning with key data mesh principles.

Step #2: Create standardized reusable components and libraries

Pipeline reusability depends on modular architectures that break complex processing logic into discrete, composable components. While monolithic pipelines serve specific immediate needs, complementing them with libraries of reusable functions enables teams to accelerate future development by combining proven components for their unique requirements, ultimately leading to effective data products.

Focus your component library on business logic that frequently appears across various use cases. Customer data cleansing, address standardization, revenue calculations, and data quality checks represent prime candidates for reusable components that deliver value across multiple teams and projects.

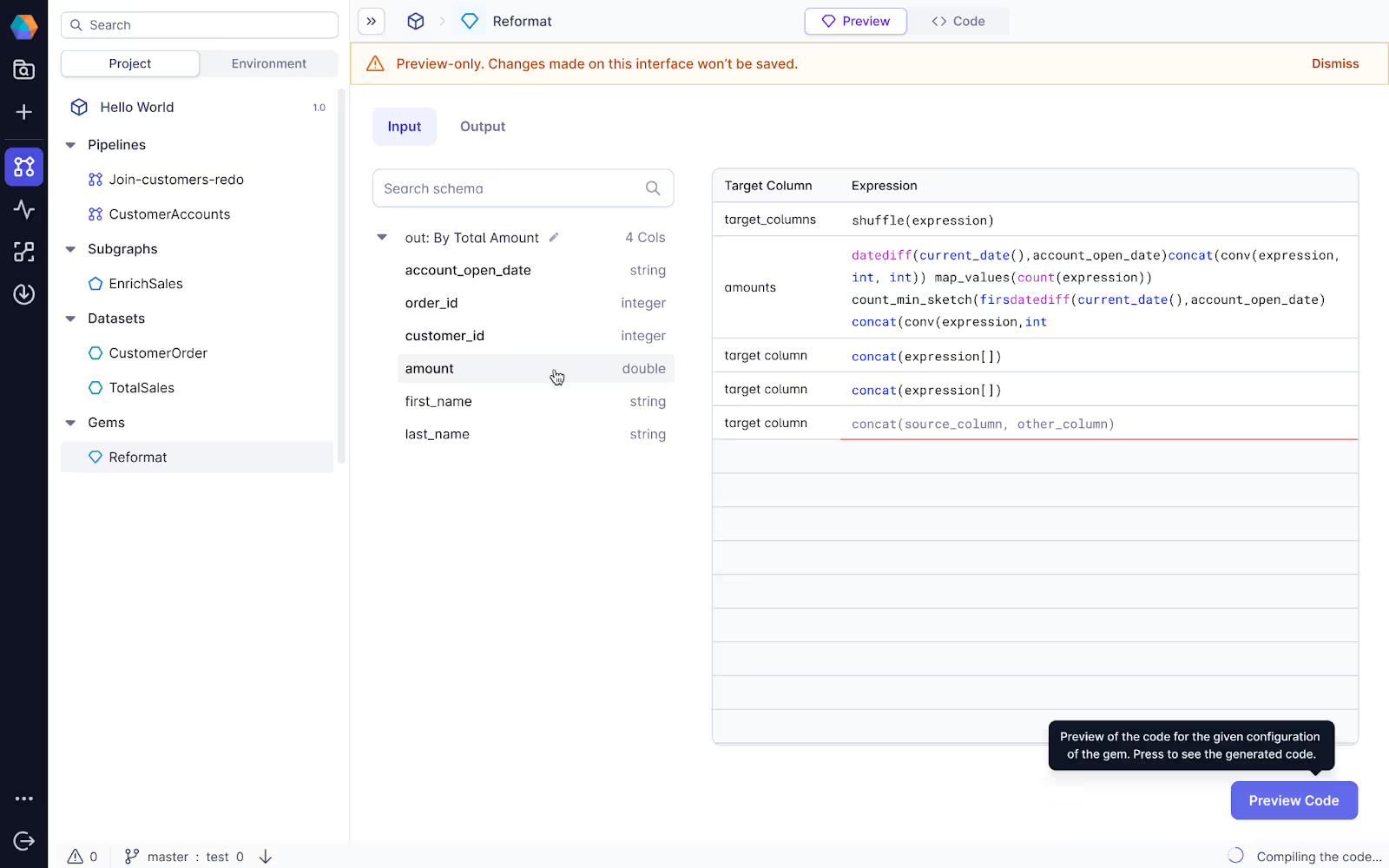

Prophecy's Package Builder enables organizations to create these standardized components through visual interfaces that generate high-quality code behind the scenes. Teams can build reusable transformation logic without extensive coding expertise by leveraging AI-enhanced ETL, while the platform ensures consistent implementation patterns and documentation standards.

When designing reusable components, prioritize flexibility over specific optimization. A customer segmentation component that accepts configurable parameters serves more teams than one optimized for a single department's exact requirements. This design philosophy maximizes adoption while maintaining performance for most use cases.

Version control becomes critical when multiple teams depend on shared components. Implement semantic versioning that clearly communicates the impact of changes, and provide migration paths when breaking changes become necessary. Teams need confidence that updating shared components won't break their existing pipelines unexpectedly.

Step #3: Build cross-team collaboration workflows and channels

Technical infrastructure alone cannot solve the collaboration challenges that prevent effective pipeline reuse. Organizations need communication workflows that connect component creators with potential consumers across departmental boundaries and geographic locations.

The key is enabling self-service access to reusable components while maintaining appropriate governance controls. Teams need the freedom to discover and utilize existing pipeline logic without waiting for central approval, but they also need guardrails that ensure quality and compliance standards.

This governed self-service approach prevents the common trap where democratization leads to ungoverned proliferation of duplicate solutions across the organization.

Create communities of practice around specific data domains where teams can share knowledge, discuss requirements, and coordinate component development. Customer analytics practitioners from marketing, sales, and product teams benefit from regular forums where they can align on common needs and avoid duplicate development.

Rather than forcing teams to submit formal requests for every component enhancement, establish contribution workflows that enable distributed development while maintaining quality standards. Teams should be able to propose improvements, submit code changes, and participate in component evolution without bureaucratic overhead.

Documentation becomes essential for enabling cross-team adoption of reusable components. Teams need clear examples, parameter descriptions, and usage guidelines that help them evaluate whether existing components meet their needs. Poor documentation often drives teams to rebuild components rather than invest time in understanding existing solutions.

Implement feedback mechanisms that capture usage patterns and requests for improvement from component consumers. This information guides component evolution and helps identify opportunities for new reusable assets that would benefit multiple teams across the organization, aligning with DataOps practices.

Step #4: Deploy automated testing and validation across dependencies

Shared pipeline components create complex dependency networks that require sophisticated testing strategies to maintain reliability. When changes to a customer data cleansing component could impact dozens of downstream pipelines, manual validation becomes impossible at enterprise scale.

Comprehensive test suites should validate component behavior under various input conditions and edge cases that different teams might encounter. Customer address data from international markets creates different validation challenges than domestic addresses, requiring test coverage that reflects the full range of organizational usage patterns.

Integration testing becomes particularly important when components interact with external systems or depend on other shared components. Changes to upstream data sources or dependencies can break component functionality even when the component code itself remains unchanged.

Create staging environments that mirror production dependencies where teams can validate component changes before deployment. These environments should include representative data from different business units to ensure changes work correctly across various usage scenarios.

Monitor component performance and usage patterns to identify optimization opportunities and potential issues before they impact consuming teams. When a shared component begins consuming excessive resources or producing quality issues, component owners need immediate visibility to address problems proactively.

Step #5: Set up version control for distributed teams

Managing component evolution across distributed teams requires sophisticated version control strategies that balance innovation with stability. Teams need access to the latest improvements while maintaining the option to delay updates that might disrupt critical processes.

Implement branching strategies that enable parallel development of component enhancements while maintaining stable release channels for production usage. Feature branches allow teams to collaborate on improvements without affecting teams that require stable component behavior.

Semantic versioning provides clear communication about the impact of component changes. Patch releases should fix bugs without changing behavior, minor releases can add new features while maintaining backward compatibility, and major releases signal breaking changes that require consumer updates.

Create automated release pipelines that validate component changes, update documentation, and notify consuming teams about available updates. Teams should receive clear information about new features, bug fixes, and any actions required to adopt new versions.

Most importantly, establish rollback procedures that enable quick recovery when component updates cause unexpected issues. Teams need confidence that adopting shared components won't create irreversible dependencies that could strand their pipelines.

Step #6: Create comprehensive documentation systems

Documentation quality often determines whether teams adopt existing components or build new ones from scratch. Incomplete or outdated documentation creates friction that drives teams toward independent development rather than reuse.

Component documentation should include a clear business context that helps teams evaluate relevance. Technical specifications matter, but teams first need to understand what business problems the component solves and how it fits into broader analytical workflows, thereby enhancing data literacy.

Provide working examples that demonstrate component usage in realistic scenarios. Teams can evaluate component fit more effectively when they see actual implementations rather than abstract parameter descriptions. These examples also accelerate adoption by providing starting points for integration.

Maintain API documentation that stays current with component evolution. When parameter names, input formats, or output schemas change, documentation updates should deploy automatically alongside code changes to prevent confusion and integration errors.

Create contribution guidelines that help teams understand how to propose improvements, report issues, and participate in component development. Clear processes encourage community involvement while maintaining code quality and consistency standards.

Step #7: Establish performance benchmarks and SLA frameworks for shared components

Shared components create new accountability challenges that don't exist with isolated pipeline development. When dozens of teams depend on a single customer data processing component, performance degradation affects the entire organization rather than just one project.

Define clear service level agreements that specify expected performance, availability, and response time requirements for different component categories. Customer-facing analytics components might require sub-second response times, while batch processing components can tolerate longer execution windows in exchange for cost efficiency.

Create automated performance monitoring that tracks component execution times, resource consumption, and error rates across all consuming teams. This visibility enables proactive optimization before performance issues impact business operations, while also providing data for capacity planning and resource allocation decisions.

Establish escalation procedures for performance issues that ensure rapid resolution when shared components affect multiple teams. Component owners need clear responsibilities for maintaining performance standards, while consuming teams need defined channels for reporting issues and requesting optimizations.

Most importantly, implement resource isolation strategies that prevent one team's heavy usage from degrading performance for other consumers. Proper resource management ensures that shared components provide consistent performance regardless of varying demand patterns across different business units.

Step #8: Scale governance across distributed teams and geographies

As reusable pipeline architectures mature, organizations must scale governance frameworks that maintain quality and consistency across distributed teams without creating bureaucratic bottlenecks that slow innovation.

Federated governance models assign component ownership to domain experts while establishing organization-wide standards for quality, security, and compatibility, addressing the challenges of data fabric vs mesh architectures. The customer analytics team might own customer data components, while financial calculations belong to the finance technology group.

Create automated compliance checking that validates components against organizational standards, eliminating the need for manual review with every change. Security scans, performance benchmarks, and code quality metrics can gate component releases while enabling teams to iterate quickly on approved changes.

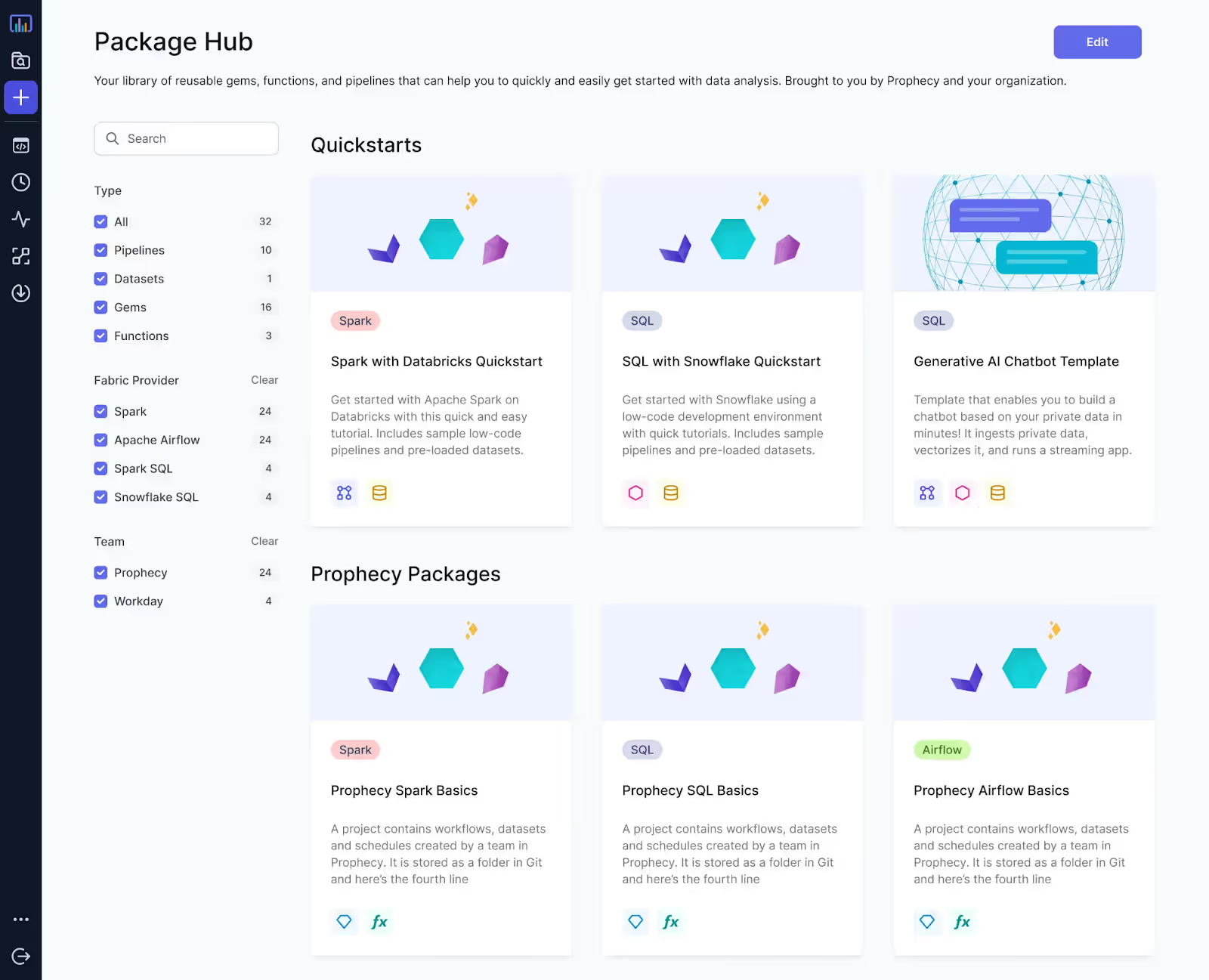

Prophecy's Package Hub offers a centralized marketplace where teams can discover, evaluate, and utilize reusable components throughout the organization. This approach combines the discoverability of centralized catalogs with the distributed ownership models that enable scaling.

Regional considerations become important when organizations operate across different regulatory environments. Components that handle personal data must adapt to varying privacy requirements, while financial calculations might need different implementations for different jurisdictions.

Training and enablement programs help teams across the organization understand how to effectively discover, evaluate, and contribute to the reusable component ecosystem. As adoption scales, organizations need systematic approaches to building component literacy rather than relying on informal knowledge transfer.

Step #9: Monitor usage patterns and optimize for adoption

Component reuse succeeds only when teams actually adopt shared assets instead of building independent solutions. Monitoring usage patterns reveals which components deliver value and which barriers prevent broader adoption across the organization.

Track component adoption rates across different teams and business units to identify successful patterns and potential obstacles. High adoption rates might indicate well-designed components that meet common needs, while low adoption could signal documentation problems, functionality gaps, or discovery issues.

Measure development time savings achieved through component reuse to quantify the business value of shared assets. When teams can demonstrate that reusable components reduce project timelines by 40-60%, stakeholders better understand the ROI of investment in reusable architectures.

Analyze component modification patterns to understand how teams adapt shared assets for their specific requirements. Frequent customizations might indicate opportunities to enhance component flexibility or create specialized variants that serve different use cases.

Regular usage reviews help identify components that no longer provide value and can be deprecated, preventing the accumulation of unused assets that create maintenance overhead without delivering benefits.

End pipeline duplication once and for all

Building reusable data pipelines requires more than just good intentions and component libraries. Organizations need platforms that make reusability natural and rewarding rather than an additional burden that teams try to avoid.

Here's how Prophecy accelerates your journey toward reusable pipeline architectures:

- Visual component libraries that enable teams to build and share reusable pipeline logic without extensive coding expertise, dramatically reducing the technical barriers to creating discoverable assets

- Automated governance frameworks that enforce quality standards, security policies, and documentation requirements without creating bureaucratic overhead that discourages component sharing

- Centralized Package Hub that provides discovery, versioning, and dependency management for reusable components across distributed teams and geographies

- Cross-team collaboration tools that enable distributed development and knowledge sharing while maintaining component quality and consistency standards

- Built-in testing and validation that ensures shared components work reliably across different usage scenarios and team requirements

To eliminate redundant pipeline development that wastes engineering resources and slows delivery, explore How to Build Data Pipelines on Databricks to accelerate development through reusable, scalable pipeline architectures.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The AI Data Prep & Analysis Opportunity

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar