How Enterprise Analytics Leaders Can Stop Data Quality Problems Before They Multiply

Discover how to stop data quality problems before they scale. Learn six strategies that transform quality crises into competitive advantages.

The proliferation of data sources, accelerated digital transformation, and pressure to democratize analytics have created a perfect storm where data quality problems compound faster than teams can resolve them.

Data leaders now spend their time on quality firefighting rather than strategic initiatives, while business teams increasingly rely on gut instinct when data contradicts their expectations.

This crisis demands more than technical fixes—it requires comprehensive organizational frameworks that address quality challenges at their source while empowering teams to act decisively when problems arise.

In this article, we'll explore how analytics leaders can transform data quality crises into competitive advantages.

What is data quality?

Data quality is the measure of how well data serves its intended purpose for business decision-making, consistently meeting business requirements, and enabling reliable decision-making across organizational systems and processes. The stakes for data quality have never been higher.

As organizations integrate AI and machine learning into their core business processes, poor-quality data can corrupt automated decisions that impact thousands of customers simultaneously.

A single quality failure in training data can propagate through production models for months before detection, amplifying business impact far beyond traditional analytics scenarios.

Yet most organizations approach data quality with the same methodologies designed for simpler, more centralized data environments. The emergence of real-time analytics, streaming data sources, and self-service platforms demands fundamentally different quality frameworks that can scale with organizational complexity.

Data quality vs. data governance vs. data integrity

While these concepts interconnect, they serve distinct functions in enterprise data management:

Dimensions of data quality

Effective quality management requires understanding the specific dimensions that impact business outcomes:

- Accuracy: Data correctly represents real-world entities and events without errors or distortions that would mislead business decisions.

- Completeness: All required data elements are present and populated, eliminating gaps that could compromise analytical conclusions.

- Consistency: Data maintains uniform formats, definitions, and values across different systems and time periods within the organization.

- Timeliness: Information arrives when needed for decisions, balancing freshness requirements with processing time constraints.

- Validity: Data conforms to defined business rules, formats, and acceptable value ranges established for each data element.

- Uniqueness: Records represent distinct real-world entities without problematic duplicates that skew analysis or create operational confusion.

Six strategies analytics leaders can use to solve data quality hurdles

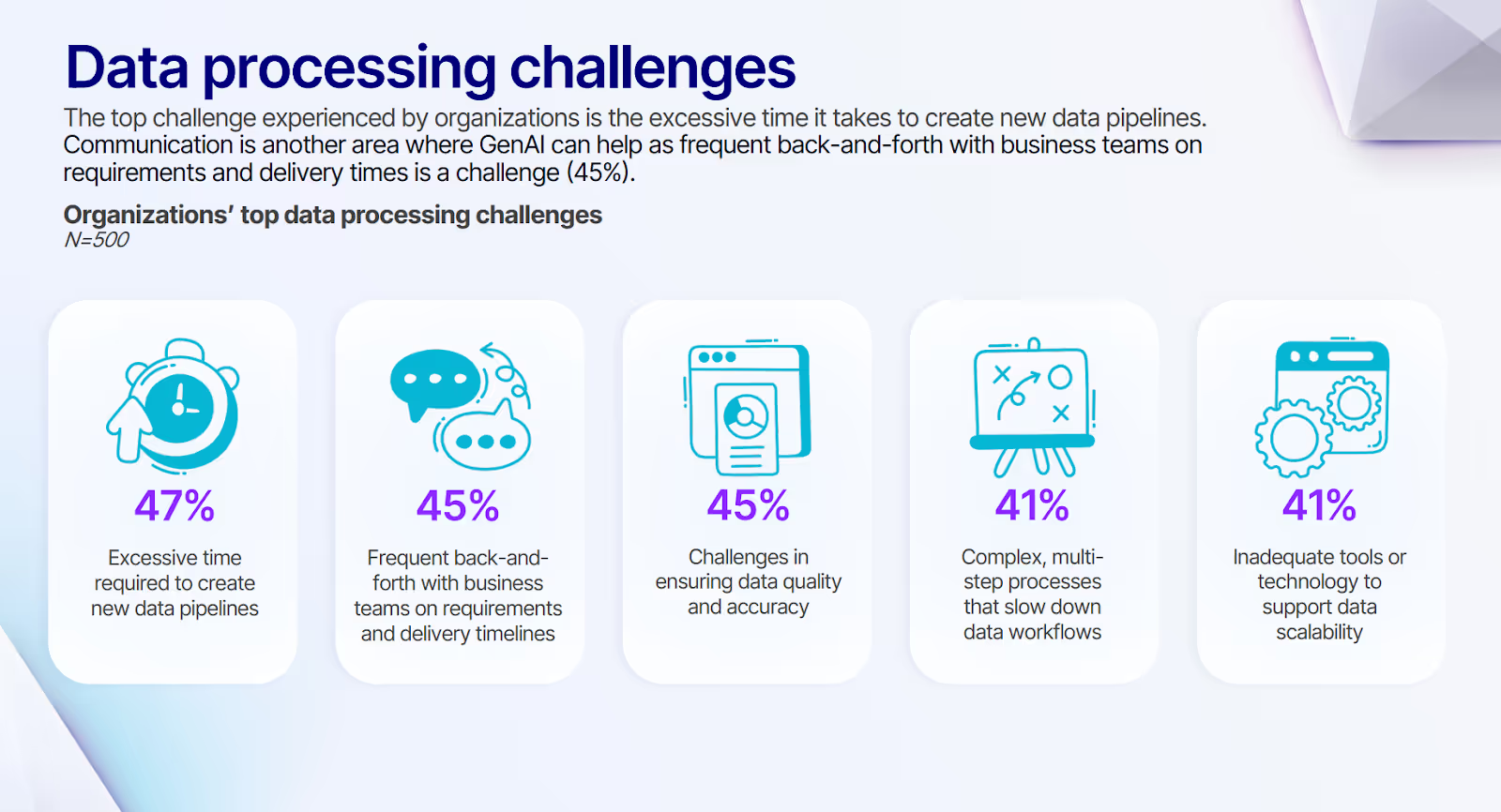

Based on our survey, organizations face a stark reality: 45% report ongoing challenges in ensuring data quality and accuracy. This translates to executives making critical decisions with unreliable information, data teams spending more time fixing problems than creating value, and business units losing confidence in analytics altogether.

The path forward requires strategic leadership approaches that address both quality crises and long-term prevention through systematic organizational change.

Break the quality bottleneck with clear ownership models

Quality problems persist because nobody owns them. When data quality issues arise, teams often spend more time debating responsibility than fixing the underlying problems.

Who's actually accountable when your customer dashboard shows contradictory revenue numbers? In most organizations, the answer is "everyone and no one"—a recipe for quality chaos that scales with your data complexity.

One solution lies in domain-specific ownership that mirrors your business structure. Consider assigning data domain owners who understand both the technical characteristics and the business context of their data assets. These owners become your quality champions, accountable for standards, incident response, and continuous improvement within their domains.

But ownership without coordination creates new silos. Quality councils that span organizational boundaries bring together domain owners, technical teams, and business stakeholders. They provide escalation forums for cross-domain issues while establishing organization-wide standards that prevent different business units from working at cross purposes.

Document decision-making authority at each escalation level. Quality incidents shouldn't languish in bureaucratic processes while business impact accumulates.

There is not one single option that always works best for every organization, but the most successful ownership models always include built-in feedback mechanisms that surface when accountability structures aren't working. Track resolution times by domain and ownership handoffs between teams—patterns reveal where your org chart conflicts with actual data flows.

When the same quality issues repeatedly bounce between owners, that's a signal your domain boundaries need redrawing, not more escalation procedures.

Unify fragmented quality tools before they multiply problems

Most organizations accidentally create quality chaos through tool proliferation. Each business unit deploys its preferred monitoring solution, creating isolated pockets that miss cross-system issues and duplicate remediation efforts.

The result? Teams discover the same quality problem multiple times through different tools, then spend more energy coordinating fixes than implementing them.

Start by mapping your current quality landscape. Document every tool, integration point, and gap where quality issues currently slip through. This assessment reveals the true cost of fragmentation, typically far beyond licensing fees when you factor in coordination overhead and missed incidents.

Unified quality platforms that integrate with existing infrastructure enable gradual consolidation without operational disruption. Rather than forcing wholesale replacement, these platforms should provide consistent monitoring capabilities while working alongside your current investments.

During consolidation, resist the temptation to migrate everything simultaneously. Leading organizations follow a "strangler fig" pattern—gradually routing quality checks through the unified platform while legacy tools handle existing workflows.

This approach lets you validate the new platform's effectiveness on non-critical data before migrating mission-critical monitoring, reducing the risk of creating quality blind spots during transition.

Common data quality metrics and definitions become crucial as you consolidate. Without standardization, comparing improvements across domains becomes impossible, making it difficult to prioritize investments where they'll deliver maximum business impact.

Transform quality monitoring from reactive to predictive intelligence

Discovering quality problems after they've already damaged business decisions represents the expensive failure mode most organizations accept as inevitable. But this reactive approach creates constant firefighting that overwhelms teams and erodes confidence in data-driven decision making.

What if your systems could predict quality issues before they occur? Automated monitoring that catches problems before downstream propagation changes the entire dynamic from crisis response to prevention.

Deploy monitoring that evaluates data against both technical constraints and business rules. These systems should flag anomalies indicating emerging problems, not just current failures. Quality scoring systems that translate technical metrics into business impact assessments help executives understand whether issues affect critical customer processes or minor internal reporting.

Real-time visibility through quality dashboards enables proactive intervention. Track trends in quality metrics rather than just current states—small problems become major crises when left unaddressed.

Early warning systems should alert stakeholders when metrics trend toward problematic thresholds, including context about potential business impact and recommended remediation actions.

However, prediction accuracy becomes your new quality metric. Monitor how often your early warning systems correctly identify actual quality degradation versus false alarms. High false-positive rates erode team confidence faster than no monitoring at all.

Calibrate alert thresholds based on historical patterns and business tolerance for different types of quality issues—customer-facing data demands higher sensitivity than internal reporting metrics.

Not all of this will (or can) happen overnight, but a commitment to transforming quality monitoring sets your organization on a path of continual improvement and greater resilience as new data priorities inevitably arise.

Eliminate the anarchy trap in quality standards

The democratization of data access creates a dangerous paradox. Business users gain self-service analytics capabilities but lack governance frameworks to ensure consistent quality standards. The result? Fragmented definitions and contradictory insights undermine the entire organization's data credibility.

This "enabled with anarchy" scenario surfaces when different teams reach contradictory conclusions from the same underlying data. Marketing reports record-breaking lead generation while sales insist pipeline quality has declined, and both teams are working from data they believe is accurate.

Quality controls must be embedded directly into self-service platforms rather than bolted on as separate gatekeeping functions. Users should automatically inherit appropriate standards based on their roles and data sensitivity without manual configuration.

The real test of governed self-service comes during crisis situations. When business teams discover urgent quality issues in their self-service workflows, do they have clear escalation paths that don't require bypassing governance controls?

Design emergency procedures that maintain auditability while enabling rapid response—quality incidents often reveal gaps between theoretical governance and practical business needs. Standardized quality requirements across user personas prevent confusion when business analysts and data scientists apply different validation approaches to similar data.

Quality templates and reusable components enable proper validation without requiring deep technical expertise, addressing common scenarios like duplicate detection, completeness validation, and outlier identification.

Design resilient systems that adapt to inevitable schema changes

Schema changes represent the most disruptive quality challenge in modern data environments, yet most organizations treat them as exceptional events requiring manual intervention. This creates brittleness that scales poorly with organizational growth and data complexity.

Schema evolution strategies should anticipate and accommodate changes without breaking downstream dependencies. Versioning approaches that maintain backward compatibility while enabling necessary improvements prevent the all-or-nothing upgrades that typically cause quality disasters.

Flexible data pipelines gracefully handle schema variations rather than failing catastrophically when structures change. Intelligent mapping capabilities that adapt to common modifications automatically reduce the manual overhead that makes schema changes so disruptive.

Coordinate modifications across affected systems through change management processes that include impact assessment, testing protocols, and rollback procedures. Monitor for schema drift before it creates quality problems—systems should compare actual structures against expected schemas and alert teams when variations exceed acceptable thresholds.

Communication protocols become as critical as technical capabilities during schema evolution. Downstream consumers need advance notice and clear migration timelines, but they also need confidence that changes won't break their existing workflows unexpectedly.

Establish "schema contracts" that define what types of changes require coordination versus what can happen transparently.

Harness AI advances to accelerate quality improvement initiatives

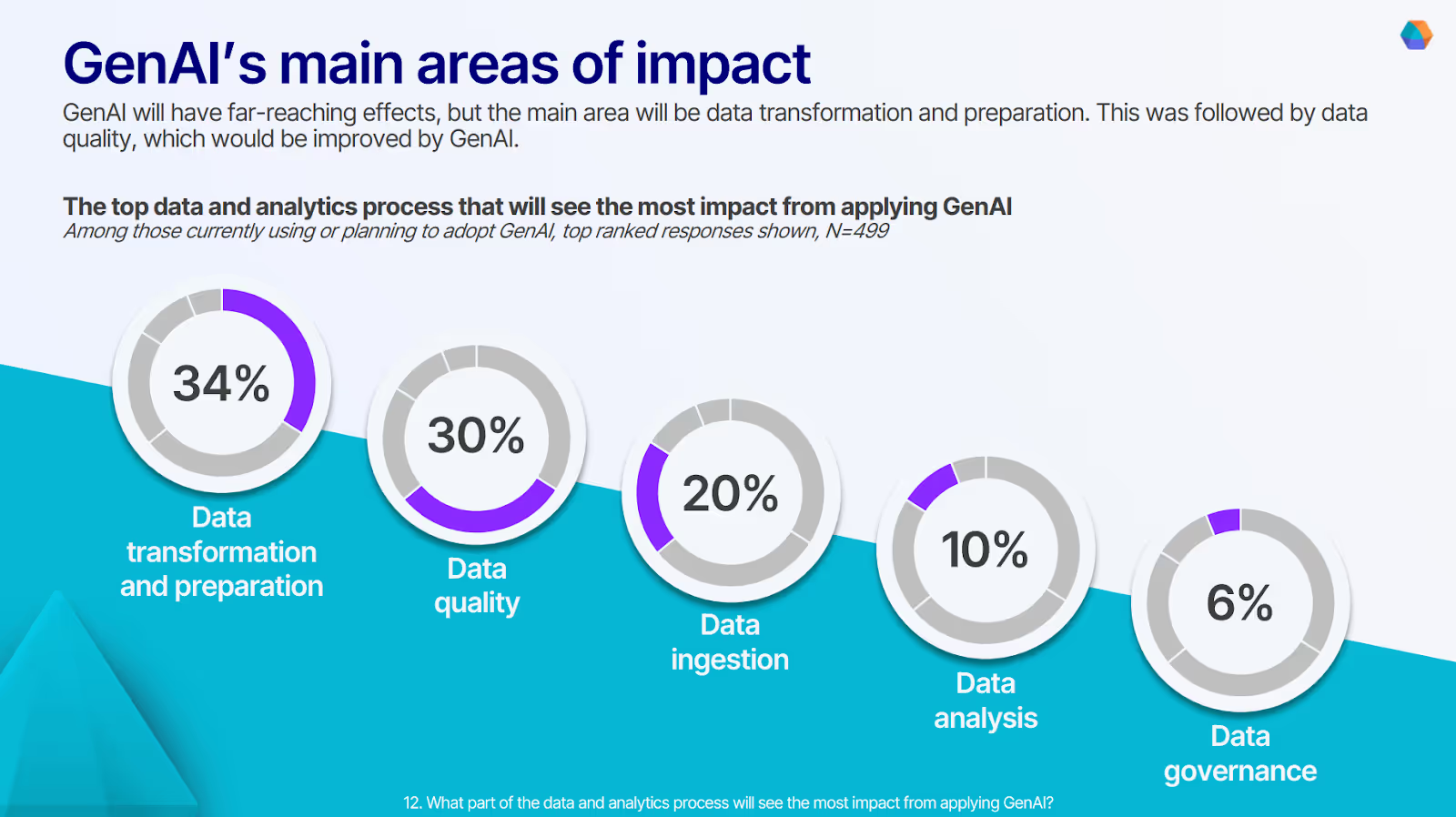

While 30% of the surveyed organizations recognize GenAI's potential impact on data quality processes, translating this potential into practical improvements remains challenging. AI technologies offer unprecedented automation opportunities at scales that manual processes cannot achieve.

How can you move beyond recognition to implementation? AI-powered anomaly detection learns normal patterns and identifies deviations indicating quality issues. These systems process volumes and complexities that overwhelm traditional rule-based approaches, catching subtle problems that manual review might miss.

Intelligent data profiling automatically identifies quality patterns and suggests validation rules, reducing the time required for monitoring new data sources. Machine learning models predict quality issues based on historical metrics and environmental factors, enabling proactive remediation rather than reactive detection.

AI-assisted remediation data workflows also suggest appropriate fixes for common issues while maintaining human oversight for complex scenarios, reducing manual effort without compromising decision quality.

Break free from quality bottlenecks that stifle innovation

Data quality represents more than a technical challenge—it's a strategic capability that enables faster decision-making, reduces operational risks, and unlocks new business opportunities. Organizations that treat quality improvement as a business transformation initiative rather than an IT project consistently achieve superior outcomes and sustained competitive advantages.

Here’s how Prophecy addresses enterprise data quality challenges through a unified platform:

- Embedded quality validation that automatically applies appropriate quality checks based on data sensitivity and user roles, preventing quality issues rather than just detecting them after they occur

- Visual pipeline development with built-in quality profiling that enables business users to implement proper quality controls without requiring deep technical expertise

- Unified governance framework that eliminates quality gaps between disconnected systems while maintaining consistent standards across the entire data ecosystem

- AI-powered quality assistance that accelerates quality detection and suggests appropriate remediation actions, enabling teams to resolve issues faster while learning best practices

- Enterprise-scale reliability that maintains quality standards even as data volumes and user numbers grow, ensuring quality improvements sustain long-term business growth

To transform persistent quality challenges into competitive advantages through systematic organizational change, explore our webinar Self-Service Data Preparation Without the Risk.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now