How to Build a Data Quality Framework Without Creating Bottlenecks

Stop drowning in unreliable data. Learn how to build a data quality framework that balances governance with accessibility.

Organizations now have more data than ever—but why is it harder than ever to trust it? Data volumes grow exponentially. Quality practices struggle to keep pace. The gap between availability and trustworthiness continues to widen.

This widening gap creates a painful paradox for enterprises today: drowning in data while starving for insights. Decision-makers stare at dashboards they can't fully trust, wondering if the numbers reflect reality or fantasy.

The result? Critical business decisions either stall completely while teams debate data accuracy, or worse, leaders revert to "gut feeling".

Without reliable data, even the most sophisticated AI and BI tools become expensive window dressing rather than competitive advantages.

A structured data quality framework solves this problem by establishing systematic approaches to ensuring data reliability, consistency, and value. By implementing proven quality controls, organizations transform unreliable data into strategic assets that drive competitive advantage.

What is a data quality framework?

A data quality framework is a structured system of processes, policies, metrics, and tools designed to consistently assess, improve, and maintain the quality of data across an organization.

Unlike ad hoc approaches to quality management, data quality frameworks provide comprehensive coverage across data sources, establish clear standards, and align quality efforts with business priorities.

Effective frameworks bridge the gap between technical data management and business outcomes, ensuring that quality efforts focus on the elements most critical to organizational success. They establish not just what constitutes "good" data but also how to measure, monitor, and improve quality in a systematic way.

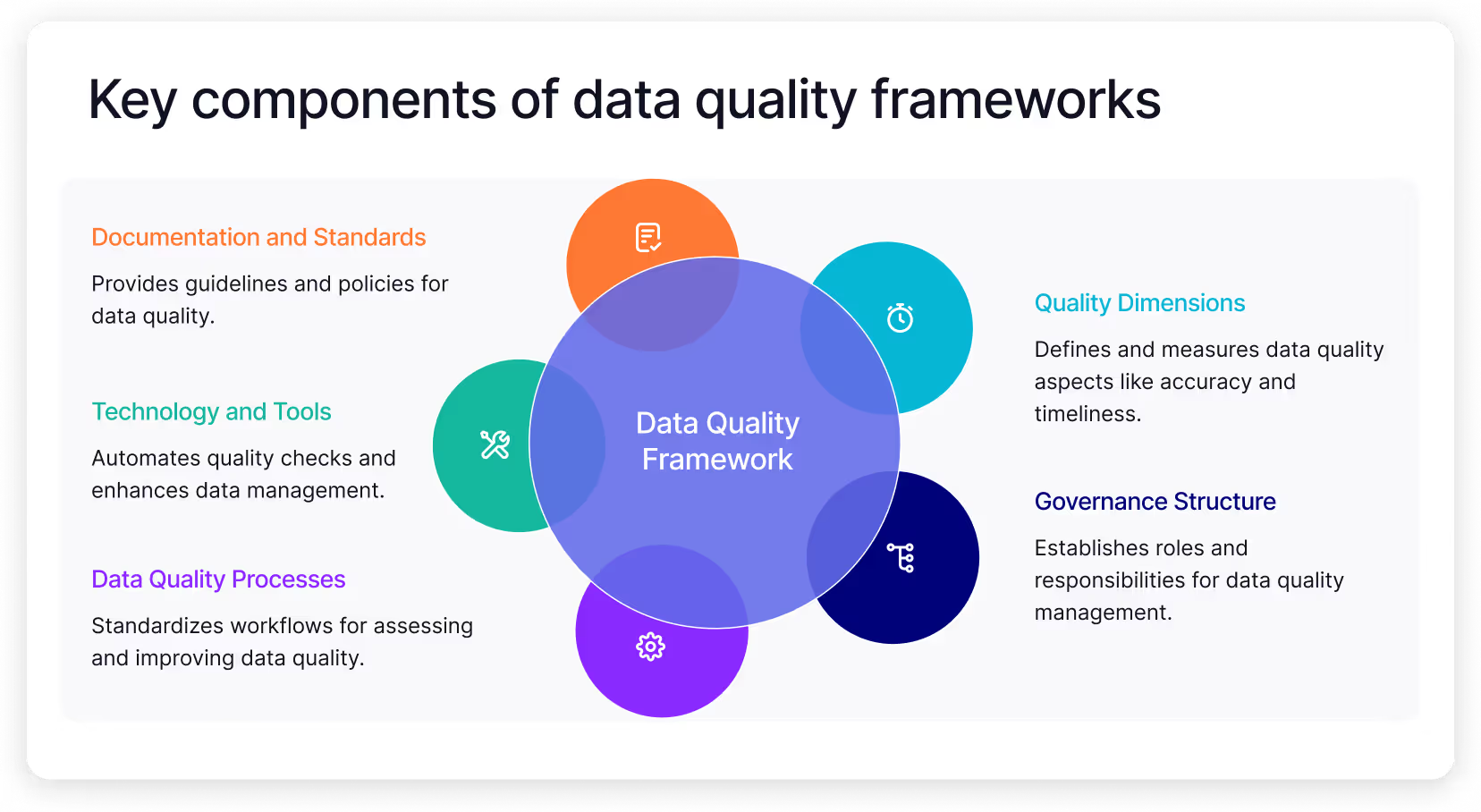

Key components of data quality frameworks

Here are the components that make up a data quality framework:

- Quality dimensions and metrics: Clear definitions of what constitutes quality data, including accuracy, completeness, consistency, timeliness, and relevance. These dimensions are paired with specific, measurable indicators and data quality metrics, that allow objective quality assessment across datasets.

- Governance structure and ownership: Defined roles and responsibilities for data quality management, following effective data governance models, including executive sponsorship, data stewards, and operational teams. This structure ensures accountability for quality at all levels of the organization.

- Data quality processes: Standardized workflows for assessing, improving, and monitoring data quality. These include data profiling, cleansing procedures, data quality checks, data transformation techniques, validation rules, and data quality monitoring practices that catch issues before they impact business operations.

- Technology and tools: Solutions that automate quality checks, data profiling, cleansing, and monitoring, including AI-enhanced ETL processes and data catalogs that enhance data management and accessibility. This technology layer enables consistent application of quality rules at scale without creating bottlenecks in data flows.

- Documentation and standards: Clear policies, guidelines, and quality requirements that define acceptable thresholds for different data types and use cases. These standards ensure consistent application of quality practices across the organization.

Benefits of data quality frameworks

Organizations derive several benefits from implementing a data quality framework:

- Enhanced decision making: Provide leaders with reliable information they can trust, eliminating doubt about data accuracy that often delays critical decisions. When executives can confidently rely on data, they make faster, more effective strategic choices.

- Operational efficiency: Reduce the time teams spend finding, fixing, and debating data issues, redirecting those resources to value-creating activities. Organizations with mature quality frameworks spend less time on data preparation and validation, enabling governed self-service analytics and reducing IT bottlenecks.

- Regulatory compliance: Meet increasingly stringent regulatory requirements around data accuracy and documentation, avoiding costly penalties and reputational damage. Quality frameworks provide the audit trails and evidence needed to demonstrate compliance.

- Improved customer experience: Deliver more personalized, timely customer interactions based on accurate customer data. When customer information is reliable, every touchpoint becomes more relevant and effective.

- Innovation enablement: Create a foundation for advanced analytics, AI, and machine learning initiatives that depend on high-quality training data. Poor quality data is the leading cause of AI project failures, making quality frameworks essential for innovation.

Common examples of data quality frameworks

Several established data quality frameworks offer proven approaches to building quality management systems. Each framework emphasizes different aspects of quality management, from statistical rigor to business alignment to technology implementation.

Data quality assessment framework (DQAF)

Developed by the International Monetary Fund (IMF), the DQAF provides a structured methodology for assessing data quality with particular emphasis on statistical standards and practices. Though originally designed for economic and financial data, its principles apply across domains.

The DQAF evaluates quality across five dimensions: integrity, methodological soundness, accuracy and reliability, serviceability, and accessibility. For each dimension, it provides specific criteria and indicators that organizations can use to benchmark their quality practices.

Organizations that implement DQAF benefit from its comprehensive approach to metadata management and documentation. The framework's emphasis on methodological transparency helps establish trust in data products while providing clear remediation paths when issues arise.

Total data quality management (TDQM)

Pioneered by MIT researchers, TDQM applies the principles of total quality management to information systems. This framework treats data as a product with consumers, producers, and manufacturing processes that can be continuously improved.

TDQM implements a cyclical approach with four phases: define (identify critical data attributes and requirements), measure (assess data quality against those requirements), analyze (identify quality gaps and root causes), and improve (implement fixes and preventive measures).

The framework's strength lies in its business orientation, focusing quality efforts on the aspects of data that matter most to its consumers. By treating data as a product, TDQM creates clear connections between quality initiatives and business value.

Data quality scorecard (DQS)

The data quality scorecard provides a measurement-focused approach that quantifies quality across multiple dimensions, typically using a 0-100 scale that makes quality assessments easily understandable to business users.

Unlike more technical frameworks, DQS emphasizes communication and stakeholder alignment by creating a common language for discussing data quality. The visual nature of scorecards helps organizations identify priority areas for improvement while tracking progress over time.

Organizations implementing DQS typically define custom scoring criteria based on their industry, data types, and business priorities. This flexibility makes the framework adaptable to various contexts while still providing consistent measurement approaches.

Six Sigma for data quality

Adapting the proven Six Sigma methodology to data management, this framework applies statistical process control techniques to data quality. The DMAIC process (Define, Measure, Analyze, Improve, Control) structures quality improvement efforts while emphasizing measurable results.

Six Sigma for Data Quality focuses heavily on reducing variation in data processes, using statistical methods to identify root causes of quality issues. Its data-driven approach appeals to organizations with mature measurement practices and quality management experience.

The framework's strength lies in its rigorous approach to process improvement and its emphasis on sustainable results. By applying statistical control principles, organizations can move beyond reactive fixes to establish stable, predictable data quality levels.

ISO 8000 data quality framework

The International Organization for Standardization's ISO 8000 offers a comprehensive set of standards specifically focused on data quality. This framework defines both the characteristics of high-quality data and the processes needed to create and maintain it.

ISO 8000 places particular emphasis on data exchange and interoperability, making it valuable for organizations that share data across boundaries. Its detailed specifications for master data are especially relevant for organizations managing product information, customer records, or other core reference data.

Organizations implementing ISO 8000 benefit from alignment with international standards and a common language for discussing quality with partners and suppliers. The framework's structured approach to metadata management creates a solid foundation for data governance initiatives.

Four challenges that make most data quality frameworks fail

Despite their potential benefits, many organizations struggle to successfully implement data quality frameworks. Let’s see why.

Disconnected silos that fragment quality efforts

Data quality initiatives often begin in isolated departments with different definitions, tools, and approaches. Without coordination, these silos create inconsistent standards where what qualifies as "good data" varies across the organization, leading to confusion and mistrust.

When quality rules differ between your systems, data moving across boundaries frequently triggers false positive errors or, worse, fails to catch real issues. These boundaries create visibility gaps where problems remain hidden until they impact downstream processes.

Organizations with fragmented approaches find themselves battling the same quality issues repeatedly as fixes applied in one system fail to address root causes in upstream data sources. This reactive pattern prevents the establishment of sustainable quality improvements and wastes resources on symptoms rather than causes.

Governance without implementation tools

Many organizations develop extensive documentation and policies but lack the practical means to implement them consistently. This creates a significant gap between theoretical quality standards and day-to-day data practices, resulting in frameworks that exist on paper but have little operational impact.

When quality policies require manual implementation, they inevitably become bottlenecks that slow down data workflows. Faced with delays, business teams often bypass quality checks to meet deadlines, creating shadow data processes that undermine governance efforts.

Even when teams intend to follow quality guidelines, the absence of automated tools means implementation varies based on individual interpretation and technical abilities. This inconsistency makes quality levels unpredictable and difficult to improve systematically.

Business-IT disconnect that undermines ownership

Quality initiatives frequently suffer from unclear ownership, with business teams assuming IT will handle quality while technical teams expect business stakeholders to define requirements. This confusion leads to a "nobody's job" situation where quality becomes an afterthought rather than a primary responsibility.

When business users don't understand how quality impacts their outcomes, they view governance as bureaucracy rather than value creation. Without clear connections between quality metrics and business results, frameworks lack the executive support needed for sustainable implementation.

Similarly, when technical teams implement quality controls without business context, they often focus on easy-to-measure technical metrics rather than the aspects of quality that truly drive business value. This misalignment creates sophisticated quality systems that fail to address the most critical business risks.

Excessive technical complexity that limits adoption

Quality frameworks frequently become overly complex, with hundreds of rules, elaborate scoring methodologies, and intricate documentation requirements. This complexity overwhelms users, particularly business stakeholders who need to incorporate quality practices into their daily workflows.

When frameworks require specialized expertise to implement or maintain, they create dependencies on scarce technical resources. This bottleneck not only slows quality improvements but also makes the framework vulnerable when key personnel leave.

Technical complexity often leads to rigid implementation approaches that fail to adapt to different business contexts or data types, resulting in a seven different systems syndrome where each new tool adds another layer of complexity.

This one-size-fits-all application of quality standards creates unnecessary friction, especially in rapidly evolving areas like analytics and AI development.

Quality framework abandonment after initial launch

Many quality initiatives start with enthusiasm but lose momentum after the initial implementation phase. When initial quality improvements fail to show immediate business impact, stakeholders redirect resources to other priorities, leaving frameworks partially implemented.

Organizations often struggle to maintain quality practices through leadership changes, reorganizations, and shifting priorities. Without institutional embedding, quality frameworks become dependent on individual champions whose departure can derail entire programs.

Quality initiatives also face the challenge of keeping pace with evolving data ecosystems. Frameworks designed for traditional structured data often struggle to adapt to new data types, cloud migrations, or the introduction of AI capabilities, making them increasingly irrelevant over time.

Five steps to build an effective data quality framework

Creating an effective data quality framework across your organization requires balancing technical controls with business relevance. Here are five steps to build a framework that drives real business impact while maintaining appropriate governance.

1. Establish ownership and accountability

Start by defining clear roles and responsibilities for data quality management across your organization. Identify executive sponsors who can provide strategic direction and remove obstacles. These sponsors connect quality initiatives to business objectives and ensure consistent support through organizational changes.

Appoint data stewards within business units who understand both the technical aspects of data and its business context. These stewards become the bridge between IT and business teams, translating quality requirements across this traditional divide and ensuring that technical implementations address business needs.

Create a governance committee that brings together stakeholders from across the organization to set priorities, resolve conflicts, and monitor progress. This cross-functional group ensures that quality initiatives remain aligned with changing business priorities while providing consistent direction across departments.

Document these roles in formal RACI matrices (Responsible, Accountable, Consulted, Informed) that clarify who makes decisions, who performs tasks, and who needs to be kept in the loop. This clarity prevents the "nobody's job" problem that derails many quality initiatives.

2. Define metrics that matter to the business

Identify the data quality dimensions most relevant to your organization based on business impact rather than technical convenience. Focus on the aspects of quality that directly affect critical business processes, customer experiences, or strategic decisions.

For each dimension, develop specific, measurable indicators that objectively assess quality levels. Leading organizations are using self-service analytics to democratize these metrics without sacrificing technical precision.

These metrics should be easy to understand for business users while being precise enough to guide technical implementation. For example, rather than a generic "completeness" metric, define completeness requirements for specific fields based on their business usage.

Create tiered quality thresholds based on data criticality and use cases. Not all data requires the same quality level – regulatory reporting may demand 100% accuracy, while marketing segmentation might accept 95%. These differentiated standards allow you to allocate resources to the areas of highest business impact.

Build visual quality dashboards that visualize current quality levels, trends, and improvement progress. These dashboards should connect quality metrics to business outcomes, helping stakeholders understand the impact of quality issues while tracking improvements over time.

3. Implement quality controls at the source

Address quality issues as close to the data source as possible, preventing bad data from entering your systems rather than cleaning it later. Implement validation rules at data entry points, whether human interfaces or automated feeds, to catch and correct issues immediately.

Deploy profiling tools that automatically analyze new data sources to identify potential quality issues before they're integrated into your data ecosystem. This proactive approach reveals structural problems, anomalies, and pattern violations that might otherwise remain hidden until they cause downstream failures.

Implement automated quality gates within your data pipelines that validate data against defined rules before allowing it to proceed to the next stage. These gates should generate clear error messages that help data producers understand and fix issues, creating a feedback loop that improves source quality over time.

Design your quality controls to balance thoroughness with performance, avoiding bottlenecks that might encourage workarounds. Controls should scale with data volumes and adapt to changing data structures without requiring constant reconfiguration.

4. Create feedback loops for continuous improvement

Establish mechanisms for tracking quality issues from detection through resolution, capturing not just the immediate fix but also root causes and preventive measures. This systematic approach transforms individual incidents into organizational learning opportunities.

Implement regular quality reviews that bring together data producers, custodians, and consumers to discuss current quality levels, challenges, and improvement priorities. These cross-functional conversations ensure that quality efforts remain focused on business impact rather than technical metrics.

Create automated alerts that notify stakeholders when quality levels fall below defined thresholds, enabling rapid response before issues impact business operations. These alerts should be tailored to different roles, providing technical details to engineers while giving business users actionable summaries.

Measure and report on the business impact of quality improvements, connecting quality metrics to operational KPIs, customer satisfaction scores, or financial outcomes. This connection demonstrates the value of quality initiatives and builds support for continued investment.

5. Scale with the right technology and tools

Select data quality tools that integrate with your existing data management platforms, avoiding isolated solutions that create additional silos. Native integration ensures that quality controls become part of your data workflows rather than separate processes that might be bypassed.

This approach helps in achieving self-service analytics compliance, balancing accessibility with governance.

Implement metadata management capabilities that maintain comprehensive information about data definitions, lineage, quality rules, and business context. This metadata creates the foundation for consistent quality assessment across diverse data types and systems.

Deploy automated monitoring that continuously assesses data quality against defined rules, detecting anomalies and degradation patterns before they impact business operations. This monitoring should cover both technical metrics, like format compliance, and business-oriented measures like value distribution changes.

Enable self-service quality assessment for business users, allowing them to understand data reliability without depending on technical teams. These capabilities should present quality information in business terms, helping non-technical users make informed decisions about when and how to use available data.

This balancing act between governance and democratization is emerging as a critical success factor for modern data quality initiatives.

Build an integrated data ecosystem with Prophecy

Creating a data quality framework that balances governance with accessibility remains challenging for most organizations. Traditional approaches force a choice between rigid controls that slow access and loose governance that compromises quality and security.

Prophecy's data integration platform resolves this dilemma by embedding quality into every step of your data lifecycle:

- Visual quality rule development that enables business users to define quality requirements without coding, while automatically generating high-quality implementation code

- Automated data profiling that identifies potential quality issues during pipeline development, catching problems before they reach production systems

- Built-in validation components that implement quality checks within data pipelines without creating performance bottlenecks

- Version control and collaboration that track quality rule changes and enable cross-team development while maintaining governance standards

- Native integration with cloud data platforms that leverages the performance and scale of modern data environments while applying consistent quality controls

- Comprehensive lineage tracking that connects quality metrics to data sources, transformations, and business usage, creating full visibility into quality impacts

- AI-assisted data quality that recommends validation rules, detects anomalies, and accelerates quality implementation through intelligent assistance

To overcome the challenge of balancing rigid governance controls with rapid data access needs, explore How to Assess and Improve Your Data Integration Maturity to implement a data quality framework that reduces engineering bottlenecks while maintaining compliance.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The AI Data Prep & Analysis Opportunity

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar