Why Neither Data Consistency nor Integrity Alone Is Enough

Learn why data consistency vs integrity isn't an either-or choice. Discover how both work together.

For modern businesses, the quality of your data directly impacts the quality of your decisions. Yet many organizations struggle with fundamental data reliability issues that undermine trust and lead to costly mistakes.

Two critical concepts—data consistency and data integrity—form the foundation of reliable information systems, but they're often confused or treated as interchangeable.

Organizations that fail to distinguish between consistency and integrity often implement the wrong solutions for their data problems—investing in expensive replication systems when simple validation rules would suffice, or adding strict data constraints while ignoring synchronization issues.

In this article, we explore the critical distinctions between data consistency and integrity, providing practical implementation strategies that help you build reliable data systems in increasingly complex environments.

Comparing data consistency vs data integrity

Data consistency ensures information remains synchronized across systems, while data integrity guarantees the accuracy and validity of that information—you need both because even perfectly synchronized data is useless if it's fundamentally incorrect:

While this comparison provides a high-level overview, let's explore the specific differences that matter most for your data architecture.

Different domains of control

Data consistency operates primarily in distributed systems and databases, focusing on how information behaves across multiple instances and over time. When designing for consistency, you're addressing questions of data synchronization, transaction boundaries, and conflict resolution between systems, often implemented using modern database types.

In contrast, data integrity operates within the domain of data structures and relationships, often implemented through structured data layers like the medallion architecture.

It focuses on whether individual values and connections between records meet defined rules and constraints, regardless of where the data resides. Integrity concerns remain relevant even in single-system environments where consistency issues don't apply.

This domain difference explains why different teams often take responsibility for each aspect—system architects and database administrators typically own consistency concerns, while data stewards and application developers focus on integrity rules.

Different time perspectives

Consistency fundamentally addresses temporal questions: Does the data look the same to all users at a given moment? If I make a change, when will other systems reflect that change? How do we handle situations where changes happen simultaneously in different locations?

Integrity takes a more structural and atemporal view: Does this data follow our defined rules? Are relationships between records preserved correctly? Do the values make logical sense within our business context? These questions remain valid regardless of when you examine the data.

This time perspective difference explains why you can have perfectly accurate data (high integrity) that differs between systems (poor consistency), or synchronized data (high consistency) that violates business rules (poor integrity).

Different implementation approaches

Consistency requires mechanisms that control how and when changes propagate through systems—transaction management protocols, replication technologies, cache invalidation strategies, and conflict resolution policies. These mechanisms primarily operate at the infrastructure and database levels, often invisible to application users.

Integrity relies on validation rules, constraints, checksums, and business logic that enforce data correctness. These controls typically appear at data creation and modification points, implemented through database constraints, application logic, and data pipeline validation steps.

The practical implication is that addressing consistency often requires infrastructure changes, while improving integrity typically involves enhancing application logic and validation rules. Organizations with strong DevOps practices may find consistency easier to address, while those with robust development teams might excel at integrity controls.

Different failure modes and recovery strategies

When consistency fails, you face conflicting versions of the same information across different systems or instances. Users accessing different parts of your infrastructure get different answers to the same questions. Recovery typically involves reconciliation processes that identify and resolve conflicts, often requiring business decisions about which version to treat as authoritative.

Integrity failures produce data that violates defined rules or logical constraints—impossible values, broken relationships between records, or information that doesn't match business reality. Recovery requires identifying corrupt or invalid data, applying correction rules, or rolling back to the last known valid state.

What is data consistency?

Data consistency ensures that information remains the same across all instances, representations, and systems where it appears. In simple systems with a single database, consistency happens almost automatically.

But modern distributed architectures—with replicated databases, caching layers, microservices, and cloud data engineering practices—make consistency significantly more challenging.

When data changes, consistency mechanisms control how those changes propagate throughout your systems. These mechanisms balance the competing demands of performance, availability, and accuracy, often requiring tradeoffs based on your specific business requirements.

A payment processing system provides a clear example: when a customer makes a purchase, their account balance must decrease while the merchant's account increases. These changes must happen together (as a consistent transaction) or not at all.

If one part completes while the other fails, the system enters an inconsistent state where money essentially disappears or is created out of nowhere.

Data inconsistency can emerge from various sources within your architecture:

- Distributed transactions failing to complete across multiple systems or databases

- Replication lag between primary and secondary database instances

- Cache invalidation failures that leave outdated information in memory

- Race conditions where concurrent updates conflict without proper resolution

- Network partitions isolating parts of your system and preventing synchronization

- Schema mismatches between systems interpreting the same data differently

- Batch processing delays that create temporary but significant inconsistencies

- Integration failures between systems that should share the same information

- Manual data entry in multiple systems without synchronization mechanisms

- Improper exception handling that leaves transactions partially completed

Three types of data consistency

Different applications have different consistency requirements based on business needs.

- Transactional consistency: Enforces ACID properties within database operations, ensuring related changes occur together or not at all. This prevents partial updates that would leave your data in an invalid state.

- Eventual consistency: Guarantees that if no new updates occur, all replicas will eventually return the same value. This model accepts temporary inconsistencies to improve performance and availability, making it suitable for applications where absolute real-time consistency isn't critical.

- Strong (or strict) consistency: Ensures all users see the most recent version of the data, regardless of which replica they access. This model creates the illusion of a single copy of the data, even when it's distributed across multiple systems or locations.

What is data integrity?

Data integrity ensures your information remains accurate, valid, and uncorrupted throughout its lifecycle. While consistency focuses on data being the same across systems, integrity addresses whether that data is correct and reliable according to defined rules and constraints.

Integrity creates the foundation of trust in your data. Without it, even perfectly consistent information becomes worthless—or worse, actively harmful—as decisions based on corrupt or invalid data lead to poor outcomes. For example, a customer address with a valid format but an incorrect postal code may be consistent across all systems but still lacks integrity.

The need for integrity spans your entire data architecture, from initial collection through transformation, storage, and eventual use. At each stage, different integrity mechanisms protect against corruption, ensure validity against business rules, and maintain relationships between related data elements.

As data volumes grow and architectures become more complex, maintaining integrity becomes increasingly challenging. Manual processes that worked at smaller scales break down, requiring automated validation, monitoring, and correction systems to ensure data remains trustworthy.

Five types of data integrity

Different aspects of integrity address specific types of data quality concerns, forming a comprehensive framework for ensuring reliable information:

- Entity integrity: Ensures each record has a unique identifier that distinguishes it from all other records of the same type. This fundamental integrity type prevents duplicate records and ensures each entity can be uniquely identified and referenced.

- Referential integrity: Maintains proper relationships between related data across tables or collections. It ensures that when one record references another, the referenced record actually exists, preventing orphaned relationships and maintaining the logical structure of your data.

- Domain integrity: Ensures data values fall within defined acceptable ranges or sets of values. This type of integrity confirms that individual fields contain only logical and appropriate values, regardless of their relationships to other data.

- Semantic integrity: Ensures data follows business rules and conventions beyond simple validation rules. This type of integrity confirms that information makes logical sense within your specific business context, even when it passes basic validation checks.

- Physical integrity: Protects data against corruption during storage, transmission, or processing. This fundamental integrity type ensures the bits and bytes of your data remain exactly as intended, without unauthorized changes or degradation.

How to ensure both data consistency and integrity

Maintaining high-quality data requires a comprehensive approach that addresses both consistency and integrity through every stage of your data lifecycle. These practical strategies help you establish robust quality controls without sacrificing performance or agility.

Implement data quality at the source

Start your quality journey at data entry points, where most problems originate. Design user interfaces with built-in validation that prevents invalid inputs before they enter your systems. Add contextual help that explains format requirements and business rules, making it easier for users to provide correct information the first time.

For automated data sources like APIs or IoT devices, implement preprocessing validation that checks incoming data before accepting it. Create notification systems that alert you to validation failures so you can address the root causes before they contaminate your downstream systems.

Make data producers responsible for the quality of what they create by establishing clear quality standards and feedback loops. When producers understand how their data will be used and receive feedback on quality issues, they become partners in maintaining both consistency and integrity from the beginning of the data lifecycle.

Design for appropriate consistency levels

Not all data requires the same level of consistency. Analyze your business requirements to determine where strong consistency is essential and where eventual consistency might be acceptable. Financial transactions typically require strong consistency for accuracy, while content delivery can often tolerate eventual consistency to improve performance.

Choose database technologies and architecture patterns that support your required consistency models. For strong consistency needs, consider traditional relational databases or distributed systems with synchronous replication. For eventual consistency, explore NoSQL databases or caching layers that prioritize availability and performance.

Document your consistency requirements clearly for development teams so they understand the trade-offs involved. Create testing scenarios that verify your implemented consistency levels match business expectations, ensuring your architecture decisions translate into appropriate runtime behavior.

Build layered validation throughout your pipelines

Create a defense-in-depth approach to data quality by implementing validation at multiple points in your data pipelines. Start with basic type checking and format validation, then add increasingly sophisticated business rule validation and data quality checks as data moves through your systems.

Automate validation wherever possible to catch issues early without slowing down your processes. Use data quality tools that integrate with your pipelines to verify integrity against defined rules and expectations. Configure these tools to log violations while taking appropriate actions based on severity, blocking critical errors while just flagging minor issues for later resolution.

Document your validation rules in a central repository that all teams can access. This documentation provides a shared understanding of quality standards and helps prevent the creation of conflicting or redundant validation checks across different systems.

Establish clear ownership for data assets

Assign specific ownership for each significant data asset in your organization. These data owners become responsible for defining quality requirements, approving changes, and addressing issues when they arise. Without clear ownership, quality problems often go unresolved, leading to data team dysfunctions as different teams assume someone else will fix them.

Create cross-functional data governance teams that bring together technical and business perspectives. These teams develop and maintain quality standards, review significant changes, and help resolve conflicts between competing requirements. By combining diverse viewpoints, they ensure your quality efforts align with both technical capabilities and business needs.

Implement regular data quality reviews that bring together data owners, technical teams, and business stakeholders. These reviews examine quality metrics, discuss emerging issues, and plan improvement initiatives. Making quality a regular topic of discussion keeps it top of mind throughout your organization.

Monitor quality metrics and respond to issues

Develop a comprehensive data quality monitoring strategy that tracks both consistency and integrity metrics across your data ecosystem. Monitor key indicators like replication lag, validation failures, constraint violations, and user-reported issues to identify potential problems before they impact business operations.

Establish clear thresholds and alerts for quality metrics based on business requirements. Configure your monitoring systems to notify appropriate teams when metrics approach or exceed these thresholds. Differentiate between informational alerts and critical issues requiring immediate action to prevent alert fatigue.

Create incident response procedures specifically for data quality issues. Define escalation paths, investigation steps, and remediation processes for different types of problems. Train teams on these procedures so they can respond quickly and effectively when quality incidents occur, minimizing their impact on business operations.

Transform your data pipeline with Prophecy's unified approach

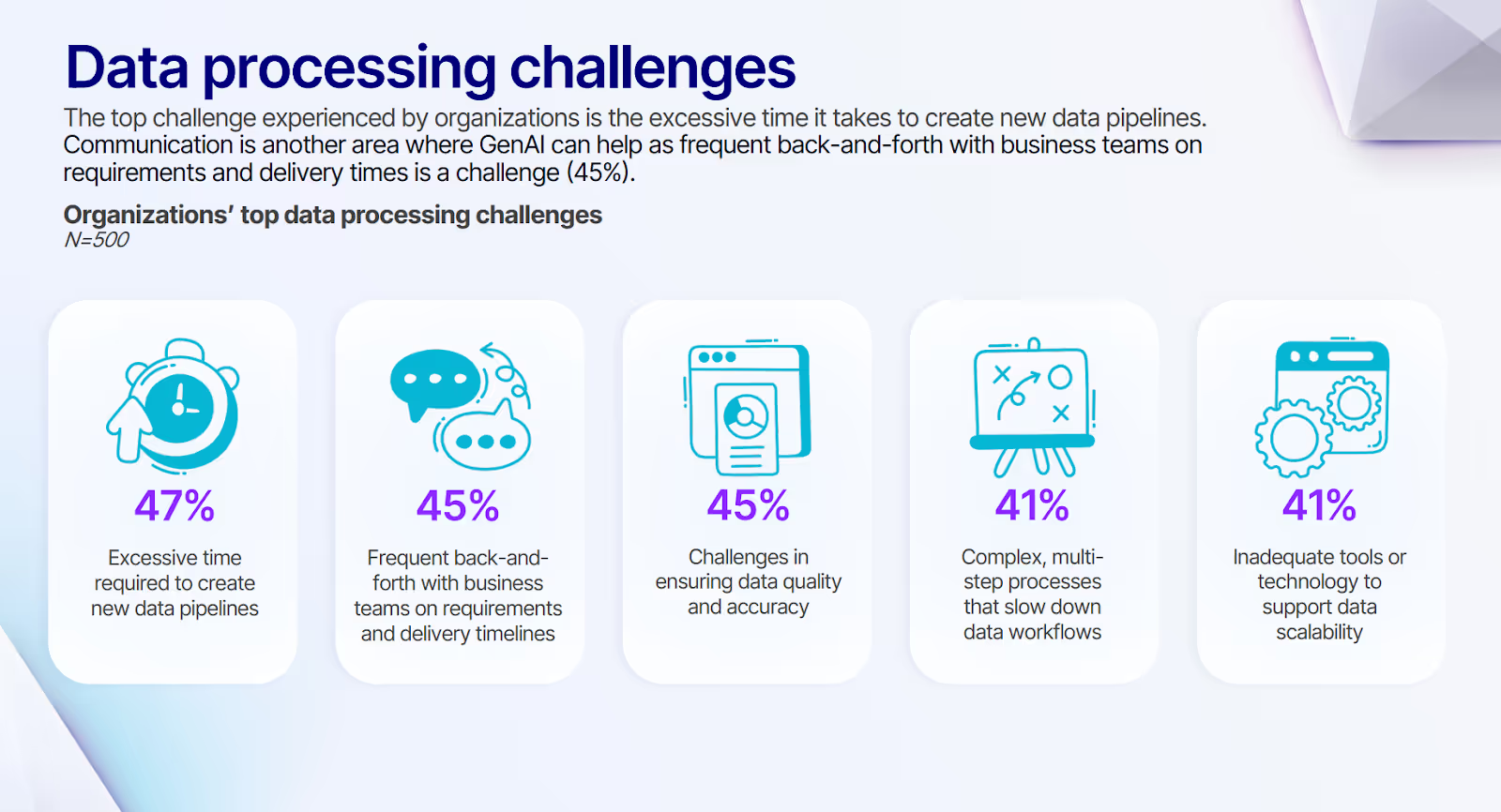

Building pipelines that maintain both consistency and integrity traditionally required extensive hand-coding and constant vigilance. This approach has created data quality challenges, as identified in our research, where 45% of organizations cite quality and accuracy as their top data processing concern.

Here’s how Prophecy changes this equation with a unified platform:

- Visual pipeline development: Create sophisticated data transformations through an intuitive interface that generates high-quality code automatically, eliminating the disconnect between business requirements and technical implementation that leads to quality issues.

- Built-in validation framework: Embed data quality checks directly into your pipelines with pre-built components that verify both consistency and integrity at every stage, catching issues early before they propagate through your systems.

- Metadata-driven governance: Maintain comprehensive information about data lineage, quality metrics, and business context through an integrated catalog that makes quality standards visible and actionable throughout your organization.

- Unified development environment: Bridge the gap between business and technical teams with a collaborative platform where both can contribute to pipeline development, ensuring quality requirements are correctly implemented from the start.

- Automated deployment workflows: Move quality-verified pipelines from development to production through standardized processes that maintain consistency and integrity controls at every step, eliminating the quality degradation that often occurs during manual deployments.

To overcome the data quality challenges that plague organizations due to manual coding and overloaded engineering teams, explore 4 data engineering pitfalls and how to avoid them to accelerate innovation through a unified pipeline approach that ensures both consistency and integrity.

Ready to give Prophecy a try?

You can create a free account and get full access to all features for 21 days. No credit card needed. Want more of a guided experience? Request a demo and we’ll walk you through how Prophecy can empower your entire data team with low-code ETL today.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation

Get started with the Low-code Data Transformation Platform

Meet with us at Gartner Data & Analytics Summit in Orlando March 11-13th. Schedule a live 1:1 demo at booth #600 with our team of low-code experts. Request a demo here.

Related content

A generative AI platform for private enterprise data

Introducing Prophecy Generative AI Platform and Data Copilot

Ready to start a free trial?

Lastest posts

The Future of Data Is Agentic: Key Insights from Our CDO Magazine Webinar

Analytics as a Team Sport: Why Data Is Everyone’s Job Now